THE KINEMATICS OF SOUND-PRODUCING AND NON-TECHNICAL MOVEMENTS

A common trend in the study of musicians' body movements is the delineation of performance gestures into distinct or overlapping movement categories. It has been suggested that these categories consist of three general movement types: sound-producing, whose direct action consequence is sound generation; sound-facilitating, which support sound-producing motor movements but themselves do not generate sound directly; and ancillary, referred to by the authors as non-technical or concurrent movements, which are not involved with sound production or sound facilitation (Cadoz & Wanderley, 2000; Jensenius, Wanderley, Godøy, & Leman, 2010; Wanderley, 2002). 1 The focus of the target article was on the function of the last category, non-technical movements; specifically, how non-technical movements correlated with a performer's expressive interpretation of the musical work and of the performer's interpretation of the phrasal structure of the composition.

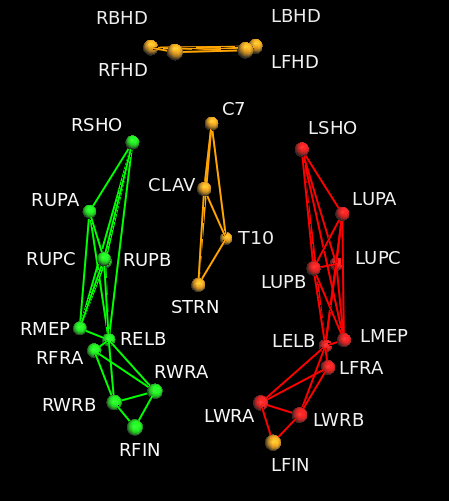

I share the authors' view that non-technical movements are likely to be tied to the expressive and phrasal goals of the performer. However, it is unclear if the movements reported as non-technical gestures in the target article only reflect movements of this category. Figure 1 illustrates the spatial placement of markers on musicians' bodies used in the collection of 3D data reported in the target article (as prescribed by Cutti, Paolini, Troncossi, Cappello, & Davalli, 2005). In addition to the eight markers located on the head and chest regions, ten markers were each placed symmetrically on the shoulders, upper arms, elbow, forearms, wrist, and finger of the performers. The set of time series of Cartesian coordinates throughout a performance for all 28 markers was used in a principal components analysis to produce representative "motion profiles" for each musician and performance (see Appendix B and Figure 5 in the target article). As such, motion profiles reflect a dimensional reduction of movements of the head, arms, wrist, and possibly fingers of the performer. By including movements of the shoulder, upper arm, forearm, and wrist in the calculation, motion profiles may reflect a combination of non-technical and sound-facilitating movements.

Fig. 1. Marker placement on musicians' bodies. Each sphere represents the approximate spatial location of retroreflective markers used in the capture of 3D data reported in the target article. From MacRitchie (2011, p. 98)

In piano performance, sound generation is achieved through the depression of the keyboard surface through direct contact with the distal phalange of the performer's hand (Goebl & Palmer, 2013). At a minimum, sound-producing gestures encompass movements of the performer's finger or fingertips. However, sound-facilitating movements encompass a much broader network of interconnected skeletal, musculature, tendon, and nervous systems that enable finger motion. Jensenius and colleagues (2010) suggest that movements of the hand, arm, and upper body should be categorized as sound-facilitating gestures in piano performance. Empirical studies of pianists' muscle and joint activity support this categorization. In an examination of multi-joint arm movements in the keystroke of expert pianists, Furuya, Altenmüller, Katayose, and Kinoshita (2010) found that movement of the shoulder, elbow, and wrist were all directly related to the control of note dynamics (loudness) and the type of keytouch used, pressed or struck (see also Furuya, Aoki, Nakahara, & Kinoshita, 2012). As such, motion profiles reported in the target article encompass non-technical gesture and sound-facilitating movements.

The issue though is not clear cut. Note dynamics are a critical feature of emotional expression in music performance and composition (Gabrielsson & Lindström, 2001; Juslin, 2001; Livingstone, Muhlberger, Brown, & Thompson, 2010). It has been suggested that during performance, pianists have direct control over only two variables, duration and intensity (Seashore, 1938; as cited by Todd, 1985). This notion is supported by recent work of Thompson and Luck (2012). In their investigation, pianists were instructed to perform a work under a variety of conditions that included normal, exaggerated (increased expression), deadpan (no expression), and immobile (as little body movement as possible). Motion of the pianists' torso, head, arms, and wrists were recorded. They found that in the immobile condition, pianists' movements and note dynamics were significantly reduced, and approximated those of the deadpan performance. They also reported that "performers equate playing without expression to playing without nonessential movements" (2012, p. 19). As such, movements of the shoulder, arm, elbow, and wrist may also be considered as forms of non-technical gesture and need not be considered mutually exclusive from sound-facilitating movements. This evidence highlights the problematic nature of delineating bottom-up, biomechanical movements in an interconnected system using top-down abstract categories.

I do not believe that the issues raised here change the interpretation of the data in the target article. If anything, I believe it only strengthens the fascinating and deeply-interconnected nature of movement and emotional expression in music performance. My suggestion would be that the movement types reported by the authors need not be classified as non-technical gestures, but encompass a broader range of movements involved in sound production and the control of emotional expression.

INDIVIDUAL DIFFERENCES IN EMBODIED EXPRESSIVE TIMING

A main finding of the target article was that large individual differences were found in the motion between performers, and across pieces by the same performer. These results may reflect the natural individual differences exhibited by expert musical performers. In a detailed investigation of performance timing microstructure, Repp (1992) examined 28 performances of Schumann's Träumerei, the 7th movement of Kinderszenen, from 24 world famous concert pianists. Repp found that while many of the performers' timings were predicted using Todd's (1985) model of expressive timing in tonal music, there remained considerable room for individual variation. Repp (1992) also found that repeated performances of a work by some pianists were highly correlated (see also Repp, 1998). This suggests that performers maintain a stable mental representation of expressive timing that is associated with the underlying structure of the musical work. Performers may have a stable yet unique spatiotemporal representation of embodied musical expression. Alternatively, gestures may be generated 'on the fly', and are repeated across performances because those are the gestures that a specific performer uses to achieve that particular expressive goal. Either way, the gestures used would vary between performers, as they may choose different movement gestures or have different interpretations of the underlying phrasal structure, but the movements remain stable across repeat performances, as shown by Wanderley (2002). Such repeatability could be examined in the present data by correlating performer movements, during structurally important and unimportant locations, across repeated performances by the same performer. Importantly, while a performer's expressive timing is widely recognized in classical schools as pivotal to expressive performance, performance gesture is not. That is, while timing and dynamics are likely to be shaped through cultural exposure and expert training, a performer's expressive bodily movements may not be constrained through pedagogy or cultural learning. Still, performers operate under the constraints of biological motion, and so their movement space is still constrained. These considerations support the presence of individual differences in expressive movements in the target article.

FREQUENCY-BASED ANALYSIS OF MOTION DATA

The motion profiles reported in the target article appear to be periodic in nature (see Appendix B and Figure 5). This quality opens the data up to a range of analyses that may yield insight into the rhythmic and repeating nature of performers' movements. To begin, a Fourier transform on motion profile data may identify underlying frequency components in the performers' movements. Such frequency components may reflect the timing structure, 'pulse', or 'pulses' of the performer. Another method which may prove fruitful is empirical mode decomposition (Huang et al., 1998). This analysis is well suited to non-stationary, nonlinear data. This more sophisticated technique could be used to separate out frequency components and underlying trends in the data, such as the motion peak between phrases 0.65-0.7 in the A major prelude that was observed in several performers (as discussed in MacRitchie, Buck, & Bailey, 2013). It is unclear if this analysis would be best performed on the warped or original unwarped data.

The authors' transformation of head motion data from Cartesian coordinates to spherical coordinates also allows for additional analysis. Inclination and azimuth are circular data types, and as such, these coordinates could be examined using circular statistics. This analysis may reveal underlying frequency trends in the direction of performers' head movements that were not uncovered in the principal components analysis.

CONCLUSION

During performance, musicians move their bodies in expressive displays that reflect underlying qualities of the performed work. Buck, MacRitchie, and Bailey's article did a commendable job of pulling together a range of complex techniques across disparate fields to support this central thesis, and clearly demonstrated that musicians' body movements reflect aspects of the underlying musical work. This article will spur further interest in the complex yet fascinating science of musicians' bodily movements during musical performance.

NOTES

- For a different terminology, see Delalande (1988, as cited in Cadoz & Wanderley, 2000).

Return to Text

REFERENCES

- Cadoz, C., & Wanderley, M.M. (2000). Gesture: Music. In: M.M. Wanderley & M. Battier (Eds.), Trends in Gestural Control of Music. Paris: IRCAM/Centre Pompidou, pp. 71-94.

- Cutti, A.G., Paolini, G., Troncossi, M., Cappello, A., & Davalli, A. (2005). Soft tissue artefact assessment in humeral axial rotation. Gait & Posture, Vol. 21, No. 3, pp. 341-349.

- Delalande, F. (1988). La gestique de Gould: éléments pour une sémiologie du geste musical. In: G. Guertin (Ed.), Glenn Gould, Pluriel. Montreal: Louise Courteau Editrice, pp. 83-111.

- Furuya, S., Altenmüller, E., Katayose, H., & Kinoshita, H. (2010). Control of multi-joint arm movements for the manipulation of touch in keystroke by expert pianists. BMC Neuroscience, Vol. 11, No. 82.

- Furuya, S., Aoki, T., Nakahara, H., & Kinoshita, H. (2012). Individual differences in the biomechanical effect of loudness and tempo on upper-limb movements during repetitive piano keystrokes. Human Movement Science, Vol. 31, No. 1, pp. 26-39.

- Gabrielsson, A., & Lindström, E. (2001). The influence of musical structure on emotional expression. In: P.N. Juslin & J.A. Sloboda (Eds.), Music and Emotion: Theory and Research. Oxford: Oxford University Press, pp. 223-248.

- Goebl, W., & Palmer, C. (2013). Temporal control and hand movement efficiency in skilled music performance. PLoS ONE, Vol. 8, No. 1, e50901. doi: 10.1371/journal.pone.0050901.

- Huang, N.E., Shen, Z., Long, S.R., Wu, M.C., Shih, H.H., Zheng, Q., . . . Liu, H.H. (1998). The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proceedings of the Royal Society of London. Series A: Mathematical, Physical and Engineering Sciences, Vol. 454, No. 1971, pp. 903-995.

- Jensenius, A.R., Wanderley, M.M., Godøy, R.I., & Leman, M. (2010). Musical gestures: Concepts and methods in research. In R.I. Godøy & M. Leman (Eds.), Musical Gestures: Sound, Movement, and Meaning. New York: Routledge, pp. 13-35.

- Juslin, P.N. (2001). Communicating emotion in music performance: A review and a theoretical framework. In: P.N. Juslin & J.A. Sloboda (Eds.), Music and Emotion: Theory and Research. Oxford: Oxford University Press, pp. 309-340.

- Livingstone, S.R., Muhlberger, R., Brown, A.R., & Thompson, W.F. (2010). Changing musical emotion: A computational rule system for modifying score and performance. Computer Music Journal, Vol. 34, No. 1, pp. 41-64.

- MacRitchie, J. (2011). Elucidating musical structure through empirical measurement of performance parameters. PhD thesis. University of Glasgow.

- MacRitchie, J., Buck, B., & Bailey, N.J. (2013). Inferring musical structure through bodily gestures. Musicae Scientiae, Vol. 17, No. 1, pp. 86-108.

- Repp, B.H. (1992). Diversity and commonality in music performance: An analysis of timing microstructure in Schumann's ''Träumerei''. The Journal of the Acoustical Society of America, Vol. 92, No. 5, pp. 2546-2568.

- Repp, B.H. (1998). A microcosm of musical expression. I. Quantitative analysis of pianists' timing in the initial measures of Chopin's Etude in E major. The Journal of the Acoustical Society of America, Vol. 104, No. 2, pp. 1085-1100.

Seashore, C.E. (1938). Psychology of Music. New York: Dover Publications. - Thompson, M.R., & Luck, G. (2012). Exploring relationships between pianists' body movements, their expressive intentions, and structural elements of the music. Musicae Scientiae, Vol. 16, No. 1, pp. 19-40.

- Todd, N. (1985). A model of expressive timing in tonal music. Music Perception, Vol. 3, No. 1, pp. 33-57.

- Wanderley, M.M. (2002). Quantitative analysis of non-obvious performer gestures. Gesture and Sign Language in Human-Computer Interaction. Berlin: Springer, pp. 241-253.