INTRODUCTION

TIMING is considered paramount in groove-based music, where in performance, varying magnitudes of microrhythmic irregularity from isochrony can supply degrees of metrical tension ranging from the subtle to the stark for aesthetic purposes (Butterfield, 2006, 2010; Câmara & Danielsen, 2019; Danielsen, 2010, 2018; Danielsen et al., 2015; Iyer, 2002). A few studies have investigated the extent to which various audio features, such as onset timing, attack articulation, and/or dynamic accentuation are involved in the production of so-called microrhythmic "timing styles/feels" in controlled performance contexts, and found that musicians tend to display a variety of timing and sound strategies when intentionally delaying or anticipating events relative to an external timing reference (Câmara, 2021; Câmara et al., 2020a, 2020b; Danielsen et al., 2015; Kilchenmann & Senn, 2011). The ways in which groove-based musicians produce intentionally asynchronous sounds in terms of action/movement strategies, however, remain unexplored. Therefore, in this study, we aim to investigate what kinds of action/movement features are involved in the performance of laid-back and pushed timing feel, and how they correlate with findings from our previous audio investigations of timing-sound strategies.

Recent studies have shown that both temporal and sound-related aspects are important in the production of different microrhythmic feels by expert musicians in performance, including not only manipulations of onset timing, but also changes in the shape of sounds, such as duration, intensity, and timbre (Câmara et al., 2020a, 2020b; Danielsen et al., 2015). For example, both Danielsen and colleagues (2015) and Câmara and colleagues (2020b) found that drummers tend to play intentionally asynchronous laid-back strokes both later in time and with greater dynamic accentuation to distinguish them from on-the-beat (synchronous) performances. The tendency to utilize late and loud sound combinations was explained as potentially facilitating the perception of asynchronous dyads of sound. Câmara and colleagues (2020a) also found that both guitarists and bassists manipulate sound features other than onset to communicate timing feel. Guitarists tend to lengthen their strokes (attack and decay segments) and play with lower brightness (spectral centroid [SC]) in addition to delaying onsets to achieve laid-back performances, and bassists tend to apply greater stroke intensity (SPL) in addition to early timing to achieve a pushed feel. These timing-sound strategies are largely in accord with research into the perceptual centers (P-centers) of musical sounds (Danielsen et al., 2019; Gordon, 1987; Villing, 2010), in the sense that musicians may be exploiting the delaying P-center effects of longer duration, lower frequency and/or lower intensity to convey laid-back timing, and, conversely, may be exploiting the anticipatory P-center effects of shorter duration, higher frequency and greater intensity to convey pushed and on-the-beat timing.

Embodied perspectives on sound and music perception (e.g., Godøy & Leman, 2010) highlight that music and motion are intrinsically related. They posit that human perception is multimodal in nature, referring to how we use multiple senses simultaneously when we explore our environment (e.g., Gibson, 1966). The integration of several modalities has been proposed as an optimal strategy for perception as it can help achieve a better understanding of the world (Ernst & Bülthoff, 2004). Some embodied perspectives emphasize that perception is an active process–something that we do– which is related to sense-making and is based on previous multimodal experiences (e.g., Noë, 2004; Shapiro, 2010; Varela et al., 2016; Van Der Schyff et al., 2018). So-called motor theories of perception further propose that sound perception includes not only processing of auditory input but also an understanding of what we believe causes a sound–that is, the listener takes into account the sound's source and/or the action that produced the sound (e.g., Berthoz, 2000; Cox, 2016; Godøy, 2003, 2010; Jensenius, 2007; Laeng et al., 2021; Liberman & Mattingly, 1985). Within this framework, sound perception is not only a matter of feature extraction based on the sound-signal but also includes knowledge of sound-source and sound-action relationships, based on previous embodied experiences of how sounds are produced.

This idea is also supported by several studies showing that motor-related areas in the brain are activated when people are listening to music (see, for example, Morillon & Baillet, 2017; Wilson & Knoblich, 2005). For example, Haueisen and Knösche (2001) found that motor-related areas of the brain associated with piano playing were also activated when pianists listened to piano music. Interestingly, Haslinger and colleagues (2005) found that the observation of silent piano playing (i.e., meaningful "sound-producing" actions without the sound itself) activated auditory areas in the brains of pianists, implying that this relation works in both directions: sound to motion and motion to sound. This relationship also seems to be influenced by expertise. In a more recent study, Endestad and colleagues (2020) found positive correlations between pupil diameters of a professional pianist during normal playing, silenced playing, listening and, imagining the same piece, which indicates an intimate link between the motor imagery of sound-producing body motions and gestures.

Along these lines, Cox (2016, p. 12) argues for the importance of mimetic behavior—both overt mimetic motor action and covert mimetic motor imagery—in music cognition. His mimetic hypothesis states that "part of how we comprehend music is by imitating, covertly or overtly, the observed sound-producing actions of performers." Godøy (2003, 2006, 2010) expands the motor theories of perception to also include the shape of the sound, suggesting that simulated sound-producing actions can both be directly related to playing an instrument and imitative of sonic shapes that are gesturally rendered. In other words, a sound that is perceived as a sonic shape may include a corresponding simulated action with a similar shape (see also, Jensenius, 2007; Jensenius et al., 2010). According to Godøy, these actions and their corresponding sound shapes usually fall into one of three main categories: impulsive, sustained, or iterative. A sustained sound-producing action with gradual onset and continuous energy transfer will produce a continuously changing, sustained sound (e.g., bowing of a violin), whereas an impulsive sound-producing action characterized by a discrete energy transfer will produce an impulsive sound with a fast sonic attack (e.g., plucking of a harp), and an iterative sound-producing action characterized by series of discontinuous energy transfers will produce a sound with series of rapid successive attacks (e.g., drumroll on a snare; for an illustration of such action-sound shapes, see figure 3.4, p. 26 in Jensenius, 2007). We perceive these action-sound shape relationships as meaningful units due to multimodal perception. Experimental studies support the idea that information from multiple sensory inputs is integrated into our sound perception. For example, visual presentation of striking motions in percussion instruments can affect the perceived duration of notes (Schutz & Lipscomb, 2007).

It has been shown that tempo can affect sound-producing motions in drum performance (Dahl, 2011). Considering the intrinsic relationship between sound and motion, it is likely that the execution of the sound-producing motions would also be affected by microrhythmic timing feel. Interestingly, in two recent studies by Câmara and colleagues (2020a, 2020b), participants reported consciously changing action/movement strategies in order to better achieve different intended timing style instructions–both in terms of sound-producing motions related to instrument (e.g., slower vs. faster and longer vs. shorter stroke movements) as well as non-sound producing/accompanying body movements, such as shifting body posture by leaning more backwards vs. forwards (see also Haugen et al., submitted). While music-related body motion encompasses various types of movement (see Jensenius et al., 2010, for an overview), in this study, we focus on exploring the movement strategies of the guitarists in terms of instrument sound-producing movements.

A typical guitar performance involves the striking of the strings with either fingers or a plectrum, which can be viewed as an "excitation" action, in combination with "modification" actions, such as the fingering hand modifying the pitch by fretting or bending (Schaeffer et al., 2017). As noted by Erdem and colleagues (2020, p. 336), normal guitar performance generally affords mainly impulsive (single strokes) or iterative (repeated multiple strokes) rather than sustained excitation actions (with the exception being the use of "extended playing techniques" or electronic effects processing units). This indeed tends to be the case in groove-based guitar performance traditions, where a typical rhythmic accompaniment style is comprised of repeated patterns spanning one or two measures with clearly articulated events involving either multiple chord strokes or single-line riffs.

In guitar performance, the striking position relative to the guitar fretboard is also often associated with timbral differences; playing at a higher position closer to the "neck" (top) tends to produce a warmer/duller "tone", while playing at a lower position closer to the "bridge" (bottom) tends to produce a sharper/brighter tone. In general, the further away from the center a string is struck, the lower the amplitude of the fundamental harmonics relative to the upper partials and the "thinner" or brighter the resultant sound (Rossing et al., 2002, p. 218). In an electric guitar, the further away from the microphones the strings are struck (i.e. closer to the neck), the greater this effect. The audio feature of spectral centroid [SC] generally accounts for the perceived brightness or sharpness of sounds (Donnadieu, 2007; Schubert & Wolfe, 2006), and in general, the brightness of a produced instrument sound tends to correlate positively with intensity (Beauchamp, 1982; Grey & Gordon, 1978). Erdem and colleagues (2020) also recently showed that in performance of single-note guitar picking, use of louder dynamics tend to produce strokes with higher SC in general.

In this study, we investigate the relationship between production of microrhythmic timing feels and sound-producing motions in guitar playing. We limit our focus to three movement features: velocity, stroke duration, and fretboard position; and three audio/sound features: attack duration, intensity, and SC, asking the following questions:

- How do guitarists manipulate stroke movement features in order to achieve the different desired microrhythmic "timing feels" of laid-back, on-the-beat, and pushed?

- To what extent do these movement features correlate with systematic changes in the audio features of the guitar strokes?

To answer these questions, we first analyze motion capture data collected in a previously reported audio experiment conducted by Câmara and colleagues (2020a), calculating three movement features for each of the timing feel conditions. We then compare them to audio features analyzed in the previous study. Based on the findings in this study and the abovementioned literature, we expected laid-back strokes to be played with longer, more stretched out movement durations, and on-the-beat/pushed strokes with shorter durations. This is because Câmara and colleagues (2020a) found that guitarists tend to produce laid-back strokes with longer acoustic durations (both attack and decay) and pushed/on-the-beat strokes with shorter durations. Relatedly, we also expected laid-back strokes to be played with lower striking velocity than on-the-beat/pushed strokes and vice versa, since slower striking motions likely correspond with greater distances in acoustic attack duration (in terms of longer intervals between individual notes/strings in a played chord). Finally, in line with the results of Câmara and colleagues (2020a) where strokes in the laid-back conditions were found to be played with less brightness (lower SC), we suspect that laid-back strokes may be played at a higher position on the fretboard (closer to the "neck").

METHOD

Experiment Dataset

PARTICIPANTS

21 electric guitarists (three female) aged 22 to 50 (M=32.6, SD=7.2) participated in the experiment. All of them were active part-time or full-time musicians well versed in groove-based musical styles, and they all had between 4 and 30 years of professional performance experience (M=12.8, SD=7.0). 2 participants were excluded from the data set (see 'Data Pre-processing' section below), and thus data from 19 participants were analyzed in total.

APPARATUS AND PROCEDURE

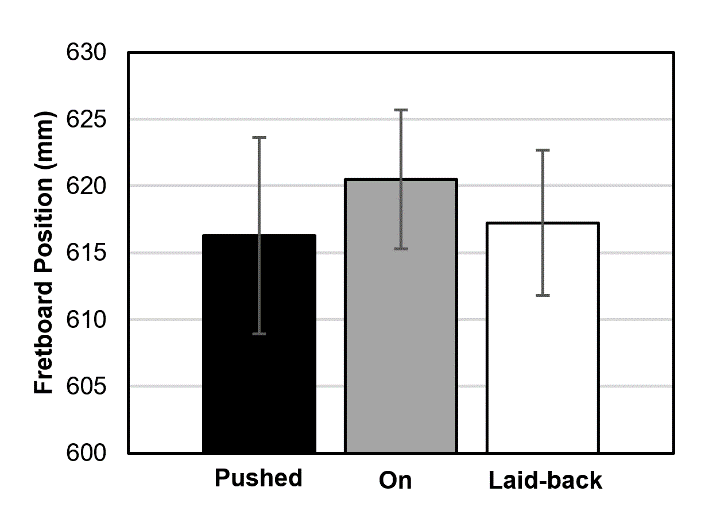

The recordings were carried out in the fourMs motion capture lab at the Department of Musicology at the University of Oslo. Both stroke audio features, and the performers' body motion were recorded (for details of the audio analysis, see Câmara et al., 2020). The participants' body motions were recorded using optical motion capture system [9 Oqus 300 cameras, Qualisys Ab, Gothenburg, Sweden]. Reflective markers were attached both to the performers' bodies and to the instruments. The system tracked the movements of the markers at the frame rate of 400 Hz. Marker placement on the participants and instruments is presented in Figure 1. Sound was recorded using Reaper Digital Audio Workstation [ver. 5.77, Cockos Inc., San Francisco, CA]. The sound and motion were recorded simultaneously, and the two were synchronized by recording the mocap camera trigger signal on an audio track in Reaper. The performances were also video recorded for reference purposes.

Figure 1. Illustration of the placement of the reflective markers attached to the musicians and the instrument.

Each guitarist was equipped with a motion capture suit with 22 optical markers in total placed on the head (4), upper back, lower back, shoulders (2), elbows (2), wrist (2), hands (2), right hand index finger and thumb, knees (2), heels (2), toes (2). In addition, there were four markers placed to form planes on the guitar itself. After being equipped with the markers, the guitarists sat on a stool and were given time to familiarize themselves with the instrumental setup and reported when they were ready to begin.

The guitarists were instructed to play a simple "back-beat" pattern (chord strokes on beats two and four, see Figure 2), ubiquitous in popular groove-based music, at a 96 bpm medium tempo with a plectrum of their choice. They performed along with a metronome track quarter-note metronome pulse comprised of percussive 'woodblock' sounds (pitched higher on beat 1, and pitched lower on beats 2, 3, and 4) in three different timing-style conditions, in randomized order:

- in a laid-back manner, or behind-the-beat relative to the metronome (condition: Laid-back)

- in a pushed manner, or ahead-of-the-beat relative to the metronome (condition: Pushed)

- synchronized with, or on-the-beat relative to, the metronome (condition: On-the-beat)

Each task lasted for approximately 67.5 seconds (27 bars) where participants began to play as soon as they had entrained with the metronome, resulting in approximately 54 strokes per trial. After the performances, we conducted short semi-structured interviews to gain insight into movement strategies that had been applied to satisfy the different timing condition tasks, asking them questions such as "what kind of body movements were required to successfully achieve each timing style" in terms of both sound-producing related to instrument playing technique and non-sound producing movements related to body posture more generally (e.g. slower vs faster, longer vs shorter, narrower vs tighter, smooth vs choppy/jerky movements), as well as "what kind of bodily sensations did the timing styles engender" (e.g. tense vs relaxed, more or less concentrated)? For more details regarding the set-up, procedure, and materials used in the experiment, see the previous work by Câmara and colleagues (2020a).

DATA PREPROCESSING

The recordings were pre-processed manually using Qualisys Track Manager. This involved verifying and correcting marker labels, and gap-filling small gaps in the recordings. Larger gaps, or gaps where the motion path was uncertain, were left unfilled. Recordings were then imported to Matlab and processed further using the MoCap Toolbox (Burger & Toiviainen, 2013) and custom scripts.

To account for differences in playing posture, height, and other factors, the raw position data were translated and rotated to a coordinate system 2 defined by three markers on the guitar (markers 23, 24 and 26 in Figure 1), using a custom-made add-on function to the MoCap toolbox. Essentially, we used the vector between markers 23 and 24, and extracted the perpendicular vector from that vector to marker 26. These then comprised the X and Y directions for our new coordinate system, and their intersection point our new origin.

To analyze individual strokes, each mocap recording was separated into shorter segments. Mocap segments were extracted from 250 ms before to 250 ms after each onset. Onsets extracted from the audio features were used as references (see Câmara et al., 2020a). Each segment then contained both the preparatory phase of the stroke, the downstroke itself, and the hand returning upward after the stroke. The first five segments in each recording were removed in order to leave some time for the participant to get into a steady flow. Each segment was subsequently categorized into notes coinciding with beat 2 or 4 of the meter, and whether the stroke was an upstroke or downstroke (clearly distinguished by the average vertical velocity around the onset point). At this stage, we noticed that most participants used upstrokes occasionally, while one played almost exclusively upstrokes and was thus removed from the dataset. Since the overall data material for upstrokes was too small for a proper analysis, we focus our attention on the downstrokes. Another participant failed to understand the task, and thus was also removed from the analyses.

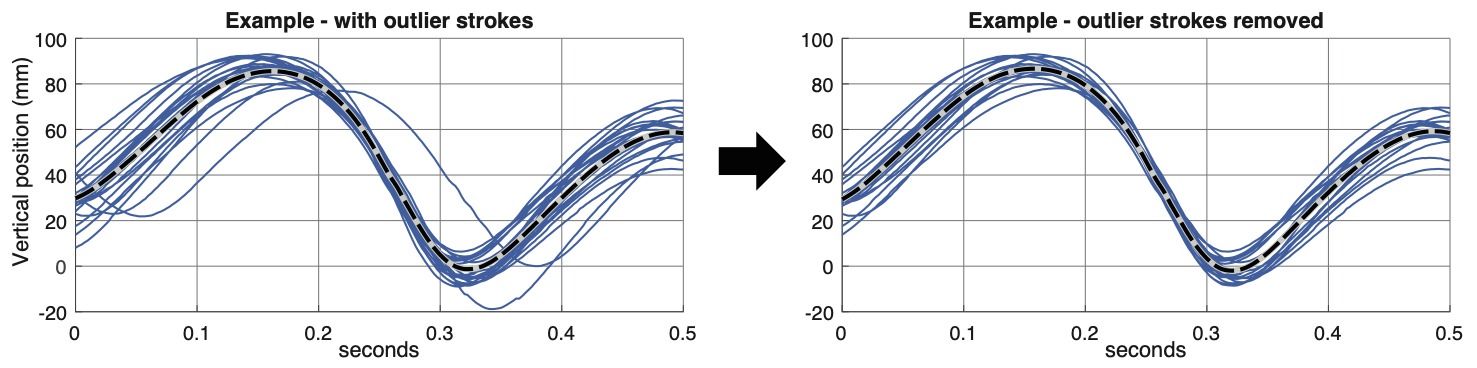

In most recordings, participants' strokes were similar within the same recording. Some recordings, however, had a few strokes in which the movement deviated. To remove such outliers from our dataset all strokes were ranked by their Euclidean distance to the mean across all strokes, and the five least typical strokes were removed from each recording (see Figure 3). After this processing, there were between 16 and 20 strokes in each recording that were used for further analysis.

Motion Features

STROKE VELOCITY

As a metric of stroke velocity, we used the absolute value of the trough (i.e., negative peak) value of vertical velocity of the right hand index finger (marker 14), as the strokes analyzed were all guitar "downstrokes" (struck from top to bottom strings). Values are calculated in mm/s. The higher this value, the faster the movement.

MOVEMENT DURATION

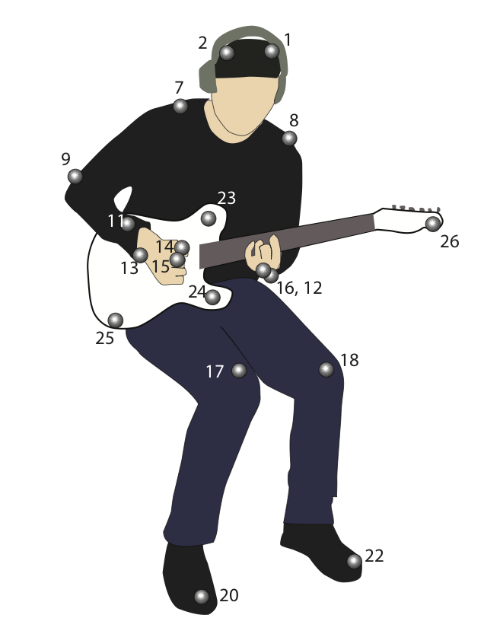

The velocity of a stroke depends both on the size of the trajectory and the duration of the movement. Determining the duration of a stroke requires determining consistent landmarks across participants (Elliott et al., 2018). However, there is great uncertainty in defining the exact beginning and end moments of a guitar stroke on the motion trajectory. Instead, we approached the duration of a stroke as a relative quantity. The movement duration measurement reflects how each participant performed the guitar strokes in the Laid-back and Pushed conditions relative to their own On-the-beat performance. That is, each participant's On-the-beat strokes are treated as baselines relative to which the timing of their Laid-back or Pushed strokes can be measured. To determine the relative movement duration, we employed dynamic time warping (DTW, Sakoe & Chiba, 1978). DTW optimally matches the features of one signal to similar features of another, resulting in a set of associations of the time points between the two time series (see Figure 4). Based on these associations, we define the duration of a stroke relative to a baseline stroke as:

where Δtbaseline=70 ms is the duration corresponding to the segment following the audio onset, and Δttest is the duration of the segment associated with Δtbaseline through DTW (Figure 4). Accordingly, d is a dimensionless quantity where positive values correspond to strokes of longer duration than the baseline, and negative values to strokes of shorter duration. The higher the absolute value, the greater the difference from the baseline. The window of 70 ms was chosen to be slightly smaller than the average duration between the audio onset and the lowest point of the trajectory in the On-the-beat recordings (77.5 ms).

Before running the DTW algorithm the time series were z-normalized by dividing with the standard deviation of the time points ±200 ms around the audio onset. Normalization ensures that the DTW associates similar features of the signals regardless of the size of the motion trajectory. It is assumed that at the audio onset the markers should have no distance from the strings. As small displacements of the markers may occur between recordings, for instance, due to slightly different grip of the guitar pick, the time series were vertically translated so that they coincided at the audio onset. In the DTW algorithm, the Raw-Subsequence shape descriptor of shapeDTW (Zhao & Itti, 2018) was used as an enhancement to the standard DTW algorithm. The Raw-Subsequence descriptor replaces the simple Euclidean distance between single points of two time series with the distance between short subsequences around each point. In this way, it provides a more reliable measure of the local shape similarity and results in better matching of signal features and fewer singularities in the DTW associations (multiple points of one time series associated with a single point of the other). The size of the raw subsequence was chosen based on the duration of the motion features and was set to be 5 frames (12.5 ms). It must be noted that the result of the DTW algorithm is not sensitive to different subsequence sizes.

As each motion capture recording consists of several strokes, the movement duration d was measured for every stroke of a Laid-back or Pushed recording against all strokes of the respective On-the-beat recording. Averages were then computed: 1) across each entire Laid-back or Pushed recording (used in the ANOVA analysis), and 2) for each stroke within a Laid-back or Pushed recording (used in the correlation study).

Figure 4. Relative motion duration measurement example.

Note. DTW associates the time points of the two strokes based on their similarity (grey lines connecting the two strokes). The duration of 70 ms after the Audio Onset in the Baseline Stroke (solid horizontal line) is mapped to a similar segment of the Test Stroke (dashed horizontal line). The Audio Onset occurs at 0 ms and is marked with a *. The Test Stroke has been vertically translated in the figure for clarity.

FRETBOARD POSITION

Fretboard position was calculated as the Euclidean distance between the index finger and the guitar head marker in mm (markers 14 and 26 in Figure 1), averaged across five data frames around the onset (from 2.5 ms before the onset to 7.5 ms after the onset). The higher this value, the closer the participant played to the guitar bridge (bottom of the fretboard), and the lower this value, the closer the participant played to the guitar neck (top of the fretboard).

Audio Features

We chose to correlate the various movement features of the guitar strokes (described above) with the following audio features calculated in a previous study (see Câmara et al., 2020a for details):

- Attack Duration: calculated as the elapsed time interval of the signal from onset to maximum amplitude peak, measured in milliseconds;

- Sound Pressure Level (SPL): calculated as the unweighted root-mean-square (rms) amplitude of the signal from onset to offset, measured in dB, with a 0 dB reference given as the average rms amplitude of all strokes in all timing conditions;

- Spectral Centroid (SC): calculated as the weighted mean of the frequencies present in the signal from onset to offset, determined using a Fourier transform, with their magnitudes as the weights, measured in Hz.

Statistical Analysis

To gauge the differences in movement features between different timing conditions, we conducted repeated measures analysis of variance [RMANOVA]) with the three timing conditions (Laid-back, On-the-beat, and Pushed) as within-subjects factor. Residuals were manually screened for normality via histograms and Q-Q plots for each dependent variable, and none showed departure from normality. Post hoc paired samples t-tests showed significant main effects and were Bonferroni-corrected for multiple comparisons. To assess the correlation between movement and audio features of the guitar strokes, Pearson's correlations tests were run. The correlations were calculated using all of the guitar strokes from all the participants per timing condition, for each audio feature. All statistical analyses were performed using SPSS (version 28, IBM, Inc., New York).

RESULTS

Movement Features

Below are the results for the statistical tests of difference between timing style conditions and movement features. For an overview of the descriptive statistics and complete results, see Table 1.

| DV | F | df | df(err.) | p | ηp2 | Pushed | On | Laid-back | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| M | (SE) | M | (SE) | M | (SE) | ||||||

| Velocity (mm/s) | 5.67 | 2.00 | 36.00 | .007 | 0.24 | 1246 | (87) | 1296 | (81) | 1056 | (84) |

| Movement Duration | 6.96 | 1.00 | 18.00 | .017 | 0.28 | 0.135 | (0.075) | - | - | 0.432 | (0.093) |

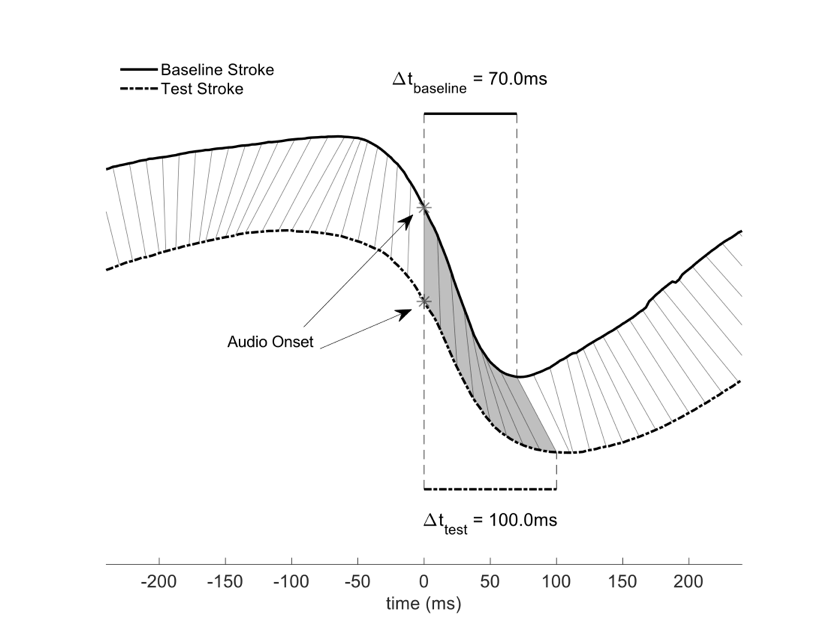

| Fretboard Position (mm) | 0.85 | 2.00 | 36.00 | .435 | 0.05 | 616 | (7) | 620 | (5) | 617 | (5) |

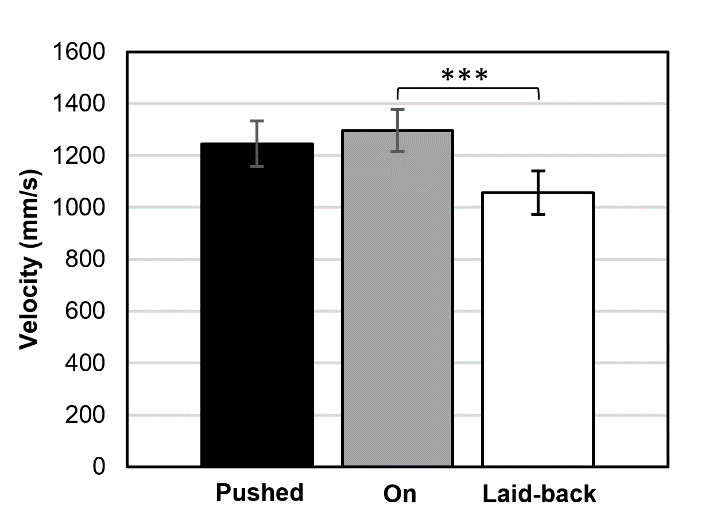

STROKE VELOCITY

A one-way RMANOVA (N=19) was conducted with Timing (Laid-back, On-the-beat, Pushed) as the within-subjects independent variable and stroke velocity as the dependent variable. We found a main effect of Timing, where pairwise comparisons between timing conditions showed that laid-back strokes were played significantly slower than on-the-beat strokes (mean difference = 240 mm/s, p = .021; see Figure 5). The differences between laidback and pushed (mean difference = 189 mm/s, p = .131) and between pushed and on-the-beat strokes (mean difference = 50 mm/s, p = 1.000) were not significant.

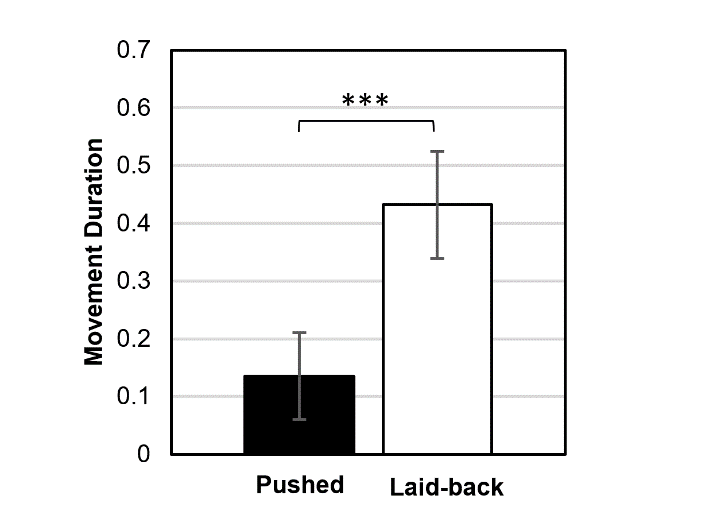

MOVEMENT DURATION

To investigate the relative movement duration of the downstrokes for the different conditions, we conducted a one-way RMANOVA (N=19) with Timing (Laid-back, Pushed) as independent variable and mean duration as the dependent variable. We found a main effect of Timing, where laid-back strokes were significantly longer than pushed (mean difference = .297; see Figure 6).

FRETBOARD POSITION

A one-way RMANOVA (N=19) was conducted with Timing (Laid-back, On-the-beat, Pushed) as within-subjects independent variable and Fretboard Position as the dependent variable. We found no main effect of Timing on Fretboard Position (see Figure 7).

Correlation between Movement and Audio Features

Below are the results for the correlation tests between movement and audio features in different timing style conditions, using values for all guitar strokes and averages across all participants. For a complete overview of these results, see Table 2.

Movement Features | Timing | Audio Features | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Attack Duration | Intensity (SPL) | Brightness (SC) | ||||||||

| R | p | N | R | p | N | R | p | N | ||

| Velocity | Laid-Back | -.49*** | <.001 | 677 | .31*** | <.001 | 677 | .28*** | <.001 | 677 |

| On | -.47*** | <.001 | 646 | .00 | .929 | 646 | .20*** | <.001 | 646 | |

| Pushed | -.47*** | <.001 | 682 | -.11** | .006 | 682 | .48*** | <.001 | 682 | |

| Movement Duration | Laid-Back | .50*** | <.001 | 680 | .11** | 0.003 | 680 | .06 | 0.10 | 680 |

| Pushed | .42*** | <.001 | 682 | .16*** | <.001 | 682 | -.06 | 0.11 | 682 | |

| Fretboard Position | Laid-Back | -.40*** | <.001 | 676 | .36*** | <.001 | 676 | -.01 | .875 | 676 |

| On | .27*** | <.001 | 645 | .14*** | <.001 | 645 | -.12** | .003 | 645 | |

| Pushed | .28*** | <.001 | 682 | .26*** | <.001 | 682 | -.05 | .215 | 682 | |

* p = <0.05, ** p = <0.01, *** p = <0.001

STROKE VELOCITY

For Attack Duration vs. Stroke Velocity, negative, moderate correlations (R ≥ -0.3, < -0.7) were found for all three timing conditions, where higher absolute velocity (faster striking movement) corresponded with shorter attack duration.

Regarding Intensity vs. Stroke Velocity, a moderate positive correlation was found for Laid-back, where lower velocity (slower striking movement) corresponded with lesser intensity (lower SPL), and a weak negative correlation (R < -0.3) was found for Pushed strokes, where higher velocity (faster striking movement) corresponded with lesser intensity (lower SPL)

For Brightness vs. Stroke Velocity, a moderate positive correlation was found in the Pushed condition, and weak positive correlations were found for On-the-beat and Laid-back, where higher velocity (faster striking movement) corresponded with a brighter sound (higher SC).

MOVEMENT DURATION

For Attack Duration vs. Movement Duration, moderate positive correlations were found for both Laid-back and Pushed conditions, where longer movement duration corresponded with longer acoustic attack duration.

A weak positive correlation was found between Movement duration and Intensity in the Laid-back and Pushed conditions, where longer movement duration corresponds to higher intensity. No significant correlation was found with Brightness.

FRETBOARD POSITION

For Attack Duration vs. Fretboard Position, a moderate negative correlation was found for Laid-back, where a striking position closer towards the neck (lesser distance between striking hand and the top of the guitar) corresponded with longer acoustic attack duration, whereas weak positive correlations were found for On-the-beat and Pushed, where a striking position closer towards the bridge (greater distance between striking hand and the top of the guitar) corresponded with longer acoustic attack duration.

For Intensity vs. Fretboard Position, weak to moderate positive correlations were found for the three timing conditions, where a striking position closer towards the bridge corresponded with greater intensity (higher SPL).

Regarding the correlation between Fretboard Position and Spectral Centroid, neither Pushed nor Laid-back showed any significant correlations–only On-the-beat showed a weak negative correlation where a striking position closer towards the bridge corresponded with a darker sound (lower SC).

DISCUSSION AND CONCLUSION

The results show that guitarists utilized systematic differences in the movement features of stroke velocity and duration to achieve the different microrhythmic timing feels (laid-back, on-the-beat, pushed). Similar to the case of acoustic sound production, where musicians do not just simply shift the temporal onset position of strokes earlier or later in time to achieve different timing feels but also systematically manipulate other acoustic features (such as duration, intensity or brightness), guitarists do not simply utilize the same type of movements played at earlier or later temporal positions, but rather also systematically manipulate other movement features such as stroke velocity and duration to express these feels. These findings expand upon the hypothesis proposed by Danielsen and colleagues (2015) that both timing and acoustic sound features are fundamental to the production and perception of microrhythm in groove-based performance, further showing that movement features also play an important role.

Regarding the movement features, we found that the guitarists displayed a tendency to use both slower (lower velocity) and longer (greater duration) movements when playing impulsive chord strokes in laid-back fashion, and faster (greater velocity) and shorter (lower duration) movements when playing on-the-beat and/or pushed. Previously in Câmara and colleagues (2020a), we found that intentionally laid-back strokes tend to be produced with longer acoustic durations, especially in the attack segments (signal onset to max. amplitude peak), and both on-the-beat and pushed strokes produced with shorter acoustic durations. As such, longer acoustic durations achieved in intentional laid-back playing appear to be achieved in part by playing with both slower and longer striking movements, and shorter acoustic attacks with faster and shorter striking movements. This was supported by the moderate significant correlations found between acoustic attack duration and both striking velocity and movement duration, in all the timing conditions: strokes with longer movement duration and lower striking velocity were both correlated with longer acoustic attack durations. Overall, these findings are the first of their kind to demonstrate the particular action-sound couplings involved in performance of timing feel in groove-based microrhythm. Microrhythm involves not only changes to onset timing, but also action-sound shapes, that is, couplings between acoustic features and how they are produced. These action-sound shapes differ systematically between the timing conditions in accordance with the auditory features: laid-back sounds, which were found to have longer auditory duration, were produced by slower/longer sound-producing motions, and early and on-the-beat sounds, which were found to have shorter auditory duration, were produced by faster/shorter sound-producing motions. In short, our results show that playing in a laidback, on-the-beat or pushed manner produces systematic changes in action-sound shapes.

While Câmara and colleagues (2020a) did not find that guitarists utilized differences in produced stroke loudness to distinguish between timing feels, here we found weak to moderate significant correlations between striking velocity and acoustic intensity. Slower movements led to softer sound, though mostly in the laid-back condition. This is expected, however, since the guitar can be considered a system of coupled oscillators (Rossing et al., 2002), where the plucked strings, which radiate only a small amount of sound directly, excite a second sound system in the form of the bridge and top plate in the pick-up microphones. Therefore, a higher striking velocity can influence the intensity of the produced guitar sound by virtue of stretching the strings more, and as more mechanical energy means stronger vibrations, this in turn causes stronger excitation of the secondary system, and thus louder sounds. The velocity differences here, however, were simply just not enough to produce much of a physical difference in acoustic intensity (and perceptual loudness furthermore).

As for striking position on the fretboard, we did not find any evidence that guitarists systematically played either closer to the bridge or the neck in any timing condition. Furthermore, we did not find any significant correlations between fretboard position and acoustic brightness (SC) in the intentionally asynchronous timing conditions (Laid-back and Pushed), but instead only a weak correlation in the On-the-beat condition, where the opposite expected result of lower SC produced at positions closer to the guitar bridge (bottom) as opposed to neck (head) was found. As such, at least in the context of our experiment, we found no evidence that differences in brightness between timing conditions are related to the striking of the strings at different vertical fretboard positions. This is somewhat surprising, considering that Câmara and colleagues (2020a) found that laid-back strokes tended to be played with lower SC and pushed/on-the-beat strokes with higher SC, which were purported to increase the perceptual salience of produced asynchronies by further attenuating or enhancing distances between guitar and reference sounds in combination with intentional early, on-beat, or late onset timing. As such, alternative explanations for the achieved differences in stroke brightness still need to be explored, such as the possibility of greater or lesser emphasis placed upon either the lower-pitched notes/strings (which might lead to a darker sound) or the higher-pitched notes/strings (which might lead to brighter sounds) of a chord during a stroke. This might be achieved by the selective aiming of the plectrum toward, or the partial muting of the fretting hand of, the strings, and would thus need to be investigated via alternative methods that measure contact and/or striking intensity of the plectrum with the strings, which we were unable to collect here.

Research has shown that sound-producing gestures influence the perception of the sound (see, for example, Davidson, 1993; Wanderley et al., 2005). Accordingly, performers use gestures to communicate–consciously or unconsciously– musical features. One could thus think that part of the differences in motion between conditions in the present study was due to cross-domain emphasis; that is, a tendency to emphasize the auditory timing profiles through visual movement. Given the design of our study, it is difficult to assess whether the differences are due to biomechanical aspects only, or if the need to communicate timing profiles through gestures also plays a role. Although the tasks were done without any audience, it may be the case that professional musicians' communicative movements are so automatized that they are still performed even when no-one is watching. The gestures investigated in the present study, however, are quite small and thus likely not very effective in visually communicating timing feels with an audience. We thus lean towards cross-domain emphasis playing a minor role in this case.

In conclusion, this study demonstrates the importance of sound-producing motions to the expression of microrhythmic timing feels in groove-based performance. When playing with deliberate timing feels either behind, ahead of, or on-the-beat, expert guitarists adapt their striking movements to produce different types of sounds that may facilitate their perceived intentionally synchronous or asynchronous timing characteristics (slower, longer movements to produce laid-back sounds with longer acoustic durations, and faster, shorter movements to produce pushed and on-the-beat sounds with shorter acoustic durations). The results shed new light on how such timing profiles can be produced and provide further support to the previously reported auditory findings.

The results also have pedagogical value as they reveal important facets of the tacit knowledge that skilled, professional musicians accumulate through extended practice. As such, students of groove-based performance may capitalize upon this knowledge to improve, or expand upon, their performance skills in terms of suitable action-sound couplings for different microrhythmic timing feels. For example, guitarists attempting to achieve a successful laid-back feel might try to think not simply in terms of positioning the onsets of their strokes late in reference to a timing reference, but also playing strokes with both slower and longer movements (and vice versa for a pushed feel).

One limitation of the study is that we do not know if the action-sound couplings for microrhythmic feels found here are specific to guitar performance or whether they might extend to other instruments. Another is that, due to the study design, we were unable to distinguish between biomechanical adjustments in playing style, and the aspects of the movements that may aid in communicating the different feels to an audience. In the future, we plan to investigate how timing feel affects acoustic and movement features in other salient groove-based rhythm-section instruments (e.g., drums, bass), as well as the extent to which visual cues affect the perceived timing of instrumental strokes in performance.

ACKNOWLEDGEMENTS

The authors wish to thank all the participating guitarists, and Victor Gonzales Sanchez for his assistance with the experimental setup. This work was partially supported by the Research Council of Norway through its Centers of Excellence scheme, Project No. 262762 and the TIME (Timing and Sound in Musical Microrhythm) project, Grant No. 249817. This article was copyedited by Tanushree Agrawal and layout edited by Jonathan Tang.

NOTES

-

Correspondence can be addressed to Dr. Guilherme Schmidt Câmara, RITMO Center for Interdisciplinary Studies in Rhythm, Time, and Motion, University of Oslo, P.O. Box 1133 Blindern, 0318 Oslo, Norway, g.s.camara@imv.uio.no

Return to Text -

The script can be accessed at: https://github.com/krisny/mct-extras/blob/master/mcrotate2vectorandpoint.m

Return to Text

REFERENCES

- Beauchamp, J. W. (1982). Synthesis by spectral amplitude and 'brightness' matching of analyzed musical instrument tones. Journal of the Audio Engineering Society, 30, 396-406.

- Berthoz, A. (2000). The brain's sense of movement. Harvard University Press.

- Burger, B., & Toiviainen, P. (2013). MoCap Toolbox – A Matlab toolbox for computational analysis of movement data. Proceedings of the 10th Sound and Music Computing Conference, (SMC), Stockholm, Sweden: KTH Royal Institute of Technology.

- Butterfield, M. W. (2006). The power of anacrusis: Engendered feeling in groove-based musics. Music Theory Online, 12(4), 1-17. https://doi.org/10.30535/mto.12.4.2

- Butterfield, M. W. (2010). Participatory discrepancies and the perception of beats in jazz. Music Perception, 27(3), 157-176. https://doi.org/10.1525/mp.2010.27.3.157

- Câmara, G. S. (2021). Timing Is Everything… Or Is It? Investigating Timing and Sound Interactions in the Performance of Groove-Based Microrhythm. [Doctoral Dissertation, University of Oslo]. University of Oslo Research Archive. http://urn.nb.no/URN:NBN:no-91219

- Câmara, G. S., & Danielsen, A. (2019). Groove. In A. Rehding & S. Rings (Eds.), The Oxford handbook of critical concepts in music theory (pp. 271–294). Oxford, UK: Oxford University Press. https://doi.org/10.1093/oxfordhb/9780190454746.013.17

- Câmara, G. S., Nymoen, K., Lartillot, O., & Danielsen, A. (2020a). Effects of instructed timing on electric guitar and bass sound in groove performance. Journal of the Acoustical Society of America, 147(2), 1028-1041. https://doi.org/10.1121/10.0000724

- Câmara, G. S., Nymoen, K., Lartillot, O., & Danielsen, A. (2020b). Timing Is Everything…Or Is It? Effects of Instructed Timing Style, Reference, and Pattern on Drum Kit Sound in Groove-Based Performance. Music Perception, 38(1), 1-26. https://doi.org/10.1525/mp.2020.38.1.1

- Cox, A. (2016). Music and Embodied Cognition. Listening, Moving, Feeling, and Thinking. Bloomington, Indiana: Indiana University Press. https://doi.org/10.2307/j.ctt200610s

- Dahl, S. (2011). Striking movements: A survey of motion analysis of percussionists. Acoustical Science and Technology, 32(5), 168-173. https://doi.org/10.1250/ast.32.168

- Danielsen, A. (2010). Here, there and everywhere: Three accounts of pulse in D'Angelo's "Left and right." In A. Danielsen (Ed.), Musical rhythm in the age of digital reproduction (pp. 19–34). Ashgate.

- Danielsen, A. (2018). Pulse as dynamic attending: Analysing beat bin metre in neo soul grooves. In C. Scotto, K. M. Smith, & J. Brackett (Eds.), The Routledge companion to popular music analysis: Expanding approaches (pp. 179–189). Routledge. https://doi.org/10.4324/9781315544700-12

- Danielsen, A., Nymoen, K., Anderson, E., Câmara, G. S., Langerød, M. T., Thompson, M. R., & London, J. (2019). Where is the beat in that note? Effects of attack, duration, and frequency on the perceived timing of musical and quasi-musical sounds. Journal of Experimental Psychology: Human Perception and Performance, 45(3). https://doi.org/10.1037/xhp0000611

- Danielsen, A., Waadeland, C. H., Sundt, H. G., & Witek, M. A. G. (2015). Effects of instructed timing and tempo on snare drum sound in drum kit performance. Journal of the Acoustical Society of America, 138(4), 2301-2316. https://doi.org/10.1121/1.4930950

- Davidson, J. W. (1993). Visual perception of performance manner in the movements of solo musicians. Psychology of Music, 21(2), 103-113. https://doi.org/10.1177/030573569302100201

- Donnadieu, S. (2007). Mental representation of the timbre of complex sounds. In J. W. Beauchamp (Ed.), Analysis, Synthesis, and Perception of Musical Sounds (pp. 272–319). Springer New York. https://doi.org/10.1007/978-0-387-32576-7_8

- Endestad, T., Godøy, R. I., Sneve, M. H., Hagen, T., Bochynska, A., & Laeng, B. (2020). Mental effort when playing, listening, and imagining music in one pianist's eyes and brain. Frontiers in Human Neuroscience, 14, 416. https://doi.org/10.3389/fnhum.2020.576888

- Erdem, C., Lan, Q., & Jensenius, A. (2020). Exploring relationships between effort, motion, and sound in new musical instruments. Human Technology, 16, 310–347.

- Ernst, M. O., & Bülthoff, H. H. (2004). Merging the senses into a robust percept. Trends in Cognitive Sciences, 8(4), 162-169. https://doi.org/10.1016/j.tics.2004.02.002

- Elliott, M. T., Ward, D., Stables, R., Fraser, D., Jacoby, N., & Wing, A. M. (2018). Analyzing multi-person timing in music and movement: Event based methods. In A. Vatakis, F. Balci, M. Di Luca, & Á, Correa (Eds.), Timing and Time Perception: Procedures, Measures, and Applications (pp. 177-215). Brill. https://doi.org/10.1163/9789004280205_010

- Gibson, J. J. (1966). The senses considered as perceptual systems..Houghton Mifflin.

- Godøy, R. I. (2003). Motor-mimetic music cognition. Leonardo, 36(4), 317-319. https://doi.org/10.1162/002409403322258781

- Godøy, R. I. (2006). Gestural-sonorous objects: Embodied extensions of Schaeffer's conceptual apparatus. Organised Sound, 11(02), 149-157. https://doi.org/10.1017/S1355771806001439

- Godøy, R. I. (2010). Gestural affordances of musical sound. In R. I. Godøy & M. Leman (Eds.), Musical gestures: Sound, movement, and meaning (pp. 103–25). Routledge New York, NY. https://doi.org/10.4324/9780203863411

- Godøy, R. I., & Leman, M. (2010). Musical gestures: sound, movement, and meaning. Routledge. https://doi.org/10.4324/9780203863411

- Gordon, J. W. (1987). The perceptual attack time of musical tones. Journal of the Acoustical Society of America, 82(1), 88-105. https://doi.org/10.1121/1.395441

- Grey, J. M., & Gordon, J. W. (1978). Perceptual effects of spectral modifications on musical timbres. Journal of the Acoustic Society of America, 63, 1493-1500. https://doi.org/10.1121/1.381843

- Haslinger, B., Erhard, P., Altenmüller, E., Schroeder, U., Boecker, H., & Ceballos-Baumann, A. O. (2005). Transmodal sensorimotor networks during action observation in professional pianists. Journal of Cognitive Neuroscience, 17(2), 282-293. https://doi.org/10.1162/0898929053124893

- Haueisen, J., & Knösche, T. R. (2001). Involuntary motor activity in pianists Evoked by music perception. Journal of Cognitive Neuroscience, 13(6), 786-792. https://doi.org/10.1162/08989290152541449

- Haugen, M. R., Câmara, G. S., Nymoen, K., & Danielsen, A. (in press). Instructed Timing and Body Posture in Guitar and Bass Playing in Groove Performance. Musicae Scientiae.

- Iyer, V. (2002). Embodied mind, situated cognition, and expressive microtiming in African-American music. Music Perception, 19(3), 387-414. https://doi.org/10.1525/mp.2002.19.3.387

- Jensenius, A. R. (2007). Action - sound: developing methods and tools to study music-related body movement. [Doctoral Dissertation, University of Oslo]. University of Oslo Research Archive. http://urn.nb.no/URN:NBN:no-18922

- Jensenius, A. R., Wanderley, M. M., Godøy, R. I., & Leman, M. (2010). Musical gestures. Concepts and methods in research. In R. I. Godøy & M. Leman (Eds.), Musical gestures: Sound, movement, and meaning (pp. 12-35). Routledge New York.

- Kilchenmann, L., & Senn, O. (2011). "Play in time, but don't play time": Analyzing timing profiles in drum performances. In A. Williamon, D. Edwards, & L. Bartel (Eds.), Proceedings of the International Symposium on Performance Science 2011 (pp. 593-598). European Association of Conservatoires.

- Laeng, B., Kuyateh, S., & Kelkar, T. (2021). Substituting facial movements in singers changes the sounds of musical intervals. Scientific Reports, 11(1), 22442. https://doi.org/10.1038/s41598-021-01797-z

- Liberman, A. M., & Mattingly, I. G. (1985). The motor theory of speech perception revised. Cognition, 21(1), 1-36. https://doi.org/10.1016/0010-0277(85)90021-6

- Morillon, B., & Baillet, S. (2017). Motor origin of temporal predictions in auditory attention. Proceedings of the National Academy of Sciences, 114(42), E8913. https://doi.org/10.1073/pnas.1705373114

- Noë, A. (2004). Action in perception. MIT Press.

- Rossing, T. D., Moore, R. F., & Wheeler, P. A. (2002). The science of sound (3rd ed.). Addison Wesley.

- Sakoe, H., & Chiba, S. (1978). Dynamic programming algorithm optimization for spoken word recognition. IEEE Transactions on Acoustics, Speech and Signal Processing, 26(1), 43–49. https://doi.org/10.1109/TASSP.1978.1163055

- Schaeffer, P., North, C., & Dack, J. (2017). Treatise on musical Objects. An essay across disciplines (1st ed.). University of California Press.

- Schubert, E., & Wolfe, J. (2006). Does timbral brightness scale with frequency and spectral centroid? Acta Acoustica United with Acoustica, 92, 820-825.

- Schutz, M., & Lipscomb, S. (2007). Hearing gestures, seeing music: vision influences perceived tone duration. Perception, 36(6), 888-897. https://doi.org/10.1068/p5635

- Shapiro, L. (2010). Embodied Cognition (2nd ed.). Routledge. https://doi.org/10.4324/9780203850664

- Van Der Schyff, D., Schiavio, A., Walton, A., Velardo, V., & Chemero, A. (2018). Musical Creativity and the Embodied Mind: Exploring the Possibilities of 4E Cognition and Dynamical Systems Theory. Music & Science, 1. https://doi.org/10.1177/2059204318792319

- Varela, F. J., Thompson, E., & Rosch, E. (2016). The embodied mind: cognitive science and human experience (Revised ed.). MIT Press. https://doi.org/10.7551/mitpress/9780262529365.001.0001

- Villing, R. C. (2010). Hearing the moment: Measures and models of the perceptual centre. [Doctoral Dissertation, National University of Ireland]. Maynooth University Research Archive Library. https://mural.maynoothuniversity.ie/2284/

- Wanderley, M. M., Vines, B. W., Middleton, N., McKay, C., & Hatch, W. (2005). The Musical Significance of Clarinetists' Ancillary Gestures: An Exploration of the Field. Journal of New Music Research, 34(1), 97-113. https://doi.org/10.1080/09298210500124208

- Wilson, M., & Knoblich, G. (2005). The case for motor involvement in perceiving conspecifics. Psychological Bulletin, 131(3), 460-473. https://doi.org/10.1037/0033-2909.131.3.460

- Zhao, J., & Itti, L. (2018). shapeDTW: Shape dynamic time warping. Pattern Recognition, 74(c), 171-184. https://doi.org/10.1016/j.patcog.2017.09.020