CONSIDER a sigh. This gesture features an audible vocalization as a result of a decrease in subglottal air pressure and, more generally, is a social signifier of dissatisfaction or exhaustion ('t Hart, Collier & Cohen, 1990). Now imagine the same sound, but with more emotional and physical intensity, perhaps accompanied by tears. The vocalization would likely transcend its status as a "sigh" and be considered "wailing" or a "lament." Even with the shift in intensity, however, one feature remains constant between the two: pitch declination, i.e., a decrease of fundamental frequency.

Lament as a signifier of grief and its association with descending pitch has manifested in various musical and performative contexts across cultures. Commenting on the musical elements of Hungarian laments, composer and ethnomusicologist Zoltán Kodály wrote:

The inspirational aspect results in sections of unequal length ending with pauses, irregular repetition of melodic phrases, and a melodic structure tied to the freely flowing prose and consisting of two descending lines […](as quoted in Wilce, 2009)

Here, Kodály regards the nature of Hungarian laments as largely improvisatory, but the descending musical line is consistent across performances. Kodály thus formally observes the relationship between sadness (as conveyed by the lyrics) and descending pitch in grief-specific musical performances.

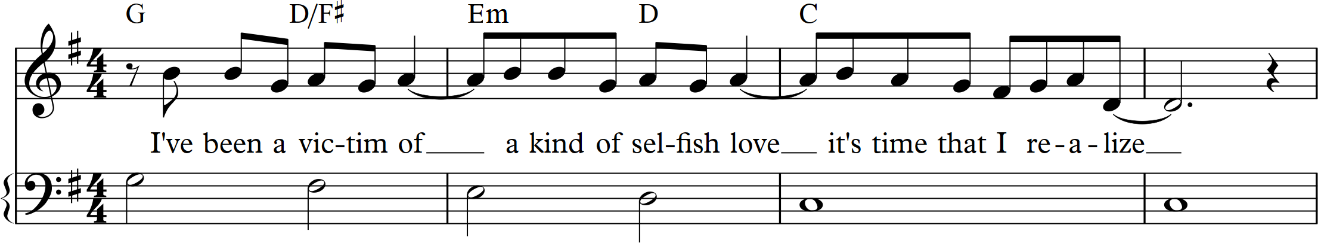

In Western classical music, the lament topic is perhaps most recognizable in opera. Consider the aria "When I am laid in earth," from Henry Purcell's Dido and Aeneas (1680) (Figure 1). In the events leading up to the relevant scene, Dido and Aeneas engage in a fiery argument about Aeneas's duty to the Trojan fleet, unknowingly under the manipulation of a plotting sorceress. The quarrel concludes with an ultimatum on behalf of Dido—Aeneas must leave from Carthage to fulfill the "gods' decree" of establishing a new city of Troy in Italy, or she will take her own life. This forces Aeneas to depart, leaving Dido alone to contemplate her inevitable death in the following scene. The aria is set in the minor mode, first in C minor then in G minor, features chromatic (i.e., pitch movement by half-step) inflection on particularly macabre words such as "darkness" and "death" and is accompanied by a similarly chromatic descending accompaniment in the bass instruments. Through a captivating harmonic analysis, Schmalfeldt (2001) remarks: "There can be no question that her long, slow, fundamentally stepwise descent metaphorically summarizes her inevitable descent toward the grave over the span of Purcell's three acts" (p. 604). This "descent" is captured quite literally by the lament bass pattern (Figure 1). 2

Caplin (2014) argues the lament is unique amongst musical topics in that it is defined by a schema —a frequently employed contrapuntal-harmonic pattern that underpins a musical passage (Gjerdingen, 2007)— which is explicitly associated with the bass voice. Specifically, "[…] whereas most schemata embrace both an upper-voice melody and bass melody, the lament schema is defined essentially by its stepwise descending bass; no one melodic pattern emerges as a conventional counterpoint to this bass line. In short, we can say that the lament topic is defined by the lament schema and the lament schema is defined by its bass" (p. 415–16). He continues by describing how the "descending tetrachord" (i.e., a stepwise descending bass pattern spanning a perfect fourth) came to be associated with mourning in mid-seventeenth century music. However, Caplin also admits that the schema often does not necessarily connote sadness and thus limits his analyses to the minor mode. In this respect, others such as Williams (1997) refer to the schema more neutrally as a "chromatic fourth" or "descending tetrachord."

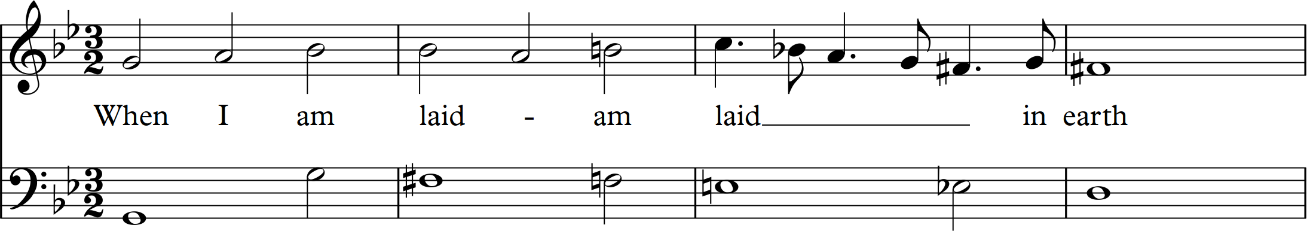

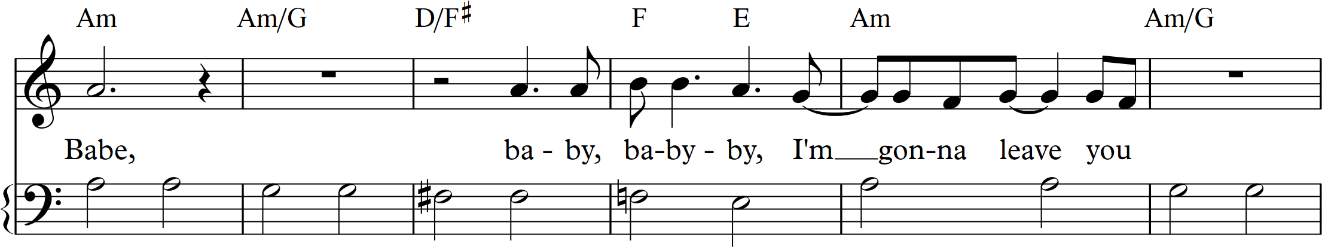

The lament bass topic has yet to be linked explicitly to sadness in Western popular music scholarship, but Christopher Doll (2017) has categorized a number of descending bass schemata in the style. Consider the song "Babe I'm Gonna Leave You" (1969) by the rock band Led Zeppelin in Figure 2. The bass line is in the minor mode and accompanies lyrics describing loss. Note that the descending bass line does not match the exact pattern of "When I am laid in earth," however. Instead of moving by half-step exclusively, the line passes in a whole-half-half-half pattern, i.e., A–G–F♯–F–E. In this situation, the bass line is diatonic (i.e., dictated by the underlying natural minor scale) with chromatic inflection. Doll would categorize this pattern as "the Drop," indicating the larger interval between A and G.

Figure 1. Sad lyrics and chromatic descending bass line in "When I am laid in earth," mm. 5–8, from Dido and Aeneas (1680) by Henry Purcell.

Figure 2. Sad lyrics and minor (mixture) descending bass line in "Babe I'm Gonna Leave You" (1969) by Led Zeppelin.

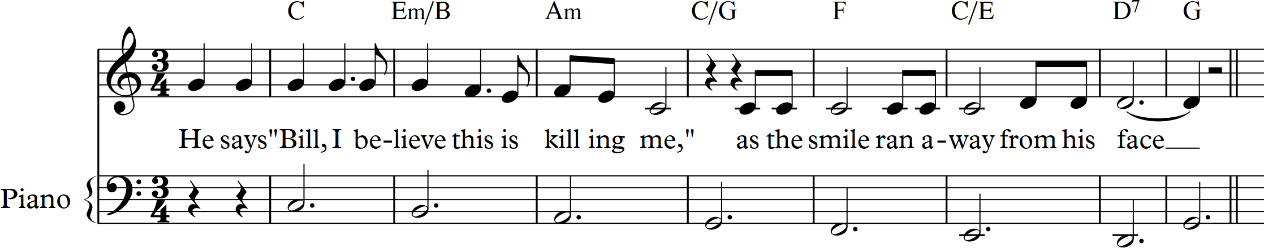

Other descending bass lines in popular music sometimes subvert the observed relationship between the minor mode and sad lyrics. In Billy Joel's "Piano Man" (1973) (Figure 3) the singer describes a bar full of people who are failing to achieve their dreams, with lyrics such as

He says, "Bill, I believe this is killing me," as the smile ran away from his face. "Well I'm sure that I could be a movie star, if I could get out of this place."

Interestingly, the entire harmonic structure of the verse is centered around a diatonically descending bass line in C major, even though the overall emotional affect of the piece is arguably still quite melancholic, if not nostalgic or wistful.

We can observe the same discrepancy in common practice works. The major-mode lament "Piangero, la sorte mia" from Handel's Guilio Cesare (1724) (Figure 4) is one such instance, where Cleopatra, imprisoned by her brother Tolomeo and hearing of the alleged death of Cesare, begins to contemplate her fate. At first glance, descending pitch and sadness are strongly associated. Indeed, all the presented instantiations of sad lyrics feature descending pitch movement in either the accompaniment, melody, or both. However, one can likely list a number of songs where this relationship does not hold. There are also instances in which a descending bass signals sadness, regardless of mode. This study seeks to determine the relationship (if any) between descending bass line and the emotion of sadness using empirical and behavioral methods, in light of these discrepancies.

Figure 3. Sad lyrics and diatonically (major) descending bass line in "Piano Man" (1973) by Billy Joel.

Figure 4. Sad lyrics and diatonically (major) descending bass line in "Piangerò la sorte mia" (1724) by G. F. Handel.

Hypothesis

As implied by the lyrics to "Piano Man," the appearance of a descending bass line may also be associated with other negative affects, such as nostalgia or fear and anger. This suggests first broadening the hypotheses to include a range of negative emotions, then testing for a sadness-specific correlation with descending bass patterns should the broader case prove significant. In respect to descending musical lines more broadly, it may also be the case that pitch descent is a universal tendency of Western music (c.f., Heinrich Schenker's theory of the Urlinie, 1935/1979), but also human speech ('t Hart, Collier, and Cohen, 1990). Huron (1996), for example, argued for a universality of the "melodic arch" in Western folk music, where the overall melodic trajectory of a given folk song typically demonstrates an inverted U-shape. However, Huron's comparison of overall pitch declination between song and everyday speech reveals that music only consists of a tenth of the declination compared to human utterances. Shanahan and Huron (2011) also found that German folk songs conclude with smaller intervals than Chinese folk songs. Thus, it may be the case that Western songs tend to descend in pitch regardless of emotive content.

Due to these exceptions, the following hypothesis serves as an empirical test of the nominal relationship between descending bass lines and negative emotion as conveyed by lyrics:

H1. Compared to songs with non-descending bass lines, songs with descending bass lines are more closely associated with lyrics that convey negative emotions.

Corpus

An ideal collection of songs on which to test this hypothesis would be both stylistically and historically diffuse. In this study, I have chosen two corpora which I believe are at least, in part, representative of both Western common practice song and popular music, but also afford a computational harmonic and lyric analysis. The first is a collection of Bach cantatas (ca. 1720–1750) encoded at the Center for Computer Assisted Research in the Humanities (CCARH). While Bach is not representative of all classical song, this sample is helpful in that the bass lines are encoded in the continuo part of each work. To bring this research closer to present-day compositional practice, songs from the McGill Billboard Hot 100 database (Burgoyne, 2011) were also included. Originally created as a training database for music information retrieval tasks, the Billboard is currently the most stylistically diverse and historically expansive (ca. 1950–1990) collection of popular songs (de Clercq, 2015). Its primary shortcoming, however, is that harmonic information is encoded via root-name chord symbols, therefore falling short of the notational specificity provided by the Bach cantatas. One might suppose that the lack of complete musical texture for these songs might inhibit an accurate analysis of a bass part; however, a survey of the dataset reveals that more than 90% of chords in the Billboard are in root position, and a handful of songs do feature encoded chord inversions, which allows one to approach the issue with relative confidence. 3

Descending Bass Lines

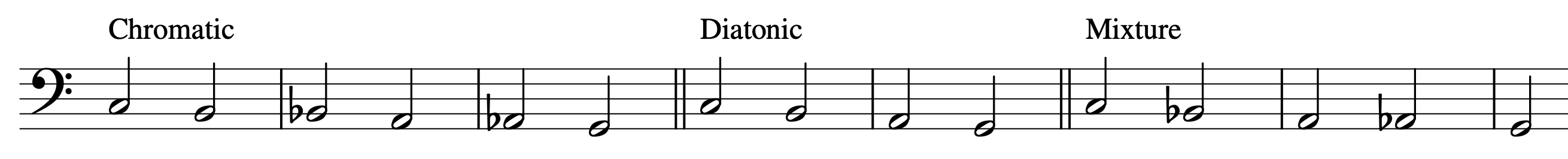

Broadly, a descending bass line is a salient musical progression that descends in pitch. For the purposes of this study, the "bass" is defined as the instrument or part that sounds the lowest pitches in the composition. A bass line is deemed to be descending if it conforms to one of three patterns: diatonic, chromatic, and a combination (diatonic/chromatic). For example, a chromatically descending bass line is one that moves downward through a scale comprised of only half steps. Contrastingly, a diatonically descending bass line moves downward through the scale at the intervals dictated by the mode (i.e., the fixed combination of whole and half steps). Descending bass lines can also feature a mix of chromatic and diatonic inflections. Figure 5 illustrates these three types, as defined for this study.

Descending bass lines typically move from ^1 to ^5 and the cycle is repeated with a leap back to ^1 (Williams, 1997; Caplin, 2014). However, not all descending bass lines are confined to this paradigm. For example, some descending bass lines in popular music examples cover larger spans (e.g., ^1 down to ^2, as in Figure 3) or may not begin the cycle on ^1. Only descending bass patterns that start on ^1 and contain at least four, but no more than six, notes are tracked in this study. Table 1 demonstrates the possible scale degree combinations between ^1 and ^5 in the key of C major/minor.

Finally, it is important to note that descending bass lines can occur at different levels of the metric hierarchy. For the purposes of this study, descending bass lines were tracked at three pulses: at the counting pulse (the beat division of the measure as dictated by the top number of the time signature), the half bar (e.g., every half note in a measure of 4/4), and at the downbeat pulse (on the first beat of every measure) (Cohn, 2016).

| S.D. | ^1 | ^7 | ^6 | ^5 | |||

|---|---|---|---|---|---|---|---|

| Note | C | B-natural | B-flat | A-natural | A-flat | G | Scale type |

| 6-note | 1 | n 7 | b 7 | n 6 | b 6 | 5 | Chromatic |

| 5-note | 1 | n 7 | b 7 | n 6 | 5 | ||

| 1 | n 7 | b 7 | b 6 | 5 | |||

| 1 | n 7 | n 6 | b 6 | 5 | |||

| 1 | b 7 | n 6 | b 6 | 5 | |||

| 1 | n 7 | b 7 | n 6 | b 6 | |||

| 4-note | 1 | n 7 | n 6 | 5 | Diatonic major | ||

| 1 | b 7 | b 6 | 5 | Diatonic minor | |||

| 1 | n 7 | b 6 | 5 | Harmonic minor | |||

| 1 | n 7 | b 7 | b 6 | ||||

| 1 | n 7 | n 6 | b 6 | ||||

| 1 | b 7 | n 6 | b 6 | ||||

As long as the descending bass schema manifests at one of these levels, then it is considered to fulfill the operational criteria of a descending bass line. In summary, the current study tracks descending bass lines that start on ^1 and contain at least four notes, regardless of the mode and metric level at which the schema manifests.

EXPERIMENT 1

Method

J. S. BACH CANTATA CORPUS

Western classical music excerpts featuring descending bass lines were extracted from the cantatas (n = 842 movements) by J. S. Bach included in the Humdrum Toolkit (Huron, 1996). These cantatas were selected because all the instrumental and vocal parts have been encoded. As songs, cantatas feature lyrics, as opposed to other instrumental genres such as symphonies or sonatas. Extracting a descending bass pattern was accomplished by first converting each pitch in the continuo part into scale degrees, which removed its associated chromatic inflections. For example, a bass line (in the key of C minor) comprised of C–B–C–Ab–G would be translated by Humdrum into ^1–^7–^1–^6–^5. All continuo parts of the cantatas were then parsed for the patterns outlined in Table 1. Because this study tracks descending bass lines at three levels of the metric hierarchy, the schematas in which every other member of the pattern features the necessary scale degree were also tracked (e.g., ^1–x–^7–x–^6–x–^5, where "x" can be any scale degree). I decided a priori that only cantata movements with eight or more instantiations of a repeated bass pattern would be included in the analysis.

McGILL BILLBOARD CORPUS

Popular music excerpts featuring descending bass lines were extracted from the 760 songs featured in the McGill Billboard Corpus (Burgoyne, 2011) in a similar manner to the previous corpus. However, because the data in the Billboard corpus were encoded in a different format than the Humdrum cantatas, a new parser had to be implemented. The parser is a modified version of that used in Gauvin (2015), which re-encodes the chord symbols associated with each song in a format consistent with scale degrees. As before, the parsing procedure included patterns in which interpolated pitches were ignored at different levels of metric hierarchy. This resulted in 95 songs that feature descending bass lines.

LYRIC ANALYSIS

I retrieved lyrics associated with each song from The Bach Cantatas website (https://webdocs.cs.ualberta.ca/~wfb/cantatas/bwv.html) and Google Play, respectively. Non-matches (i.e., incongruities between the titles of the encoded cantatas and those found on the website) were discarded from the following analysis. I then analyzed lyrics for their emotive content using the Linguistic Inquiry and Word Count database (LIWC) (Pennebaker et al., 2015a, 2015b). LIWC (pronounced "Luke") is a psychometric text analysis tool that categorizes words according to content and function, through comparison to the LIWC dictionary, which was "carefully developed using established, standard psychometric approaches such as validation on external psychological data, as well as techniques that ensure high internal reliability from a statistical perspective" (Boyd, 2017, p. 165). To describe LIWC concisely, a user uploads a text document and the words within are compared to a total of 80 word-type categories in the LIWC dictionary. This process also accounts for frequency and clustering (i.e., the tendency for people to group similar types of words together). LIWC output details word count, words per sentence, and continuous variables for content categories. To test the standing hypothesis, this study focuses on the "emotional tone" or "tone" variable, where "a high number is associated with a more positive, upbeat style; a low number reveals greater anxiety, sadness, or hostility. A number around 50 suggests either a lack of emotionality or different levels of ambivalence" (Pennebaker, Booth, Boyd & Francis, 2015b, p. 22).

Word count is a critical criterion for using LIWC effectively, since the dictionary cannot provide an accurate measure of emotional affect with only a few words to compare to its database. A preliminary word count of the Bach cantatas, which were expected to have low word count due to segmentation into movements, revealed a median of 39 and a mean of 48 words per movement. Compared to the Billboard songs (mean = 251, median = 234), this is quite low. Thus, it was resolved a priori to limit the analysis of the cantatas to only songs that met a minimum criterion corresponding to the median of 39 words or more, effectively removing half the Bach cantata corpora (n = 356 available movements). Finally, it should be noted that for songs in languages other than English (i.e., the cantatas), Google Translate was used to convert the text to English before submission to LIWC. One might suppose that machine translation would distort the affective content ratings; however, since LIWC is lexically oriented (i.e., cued by emotional words) and pays little attention to grammatical structure, machine translation provides an appropriate input (Boyd, 2017) to LIWC.

Results

The results are summarized in Table 2, which shows the mean LIWC "tone" values and their standard deviations for each of four conditions. In the case of the Bach cantatas, an independent-samples t-test revealed no significant difference between the negative emotion values of the descending bass line and non-descending movements, t(354) = .18, p = .58. Similarly, no significant difference in the negative emotion values between these groups was evident from the McGill Billboard songs, t(726) = -1.11, p = .27. These results were not consistent with Hypothesis 1.

Discussion

In Table 2, note that the descending bass groups demonstrated a greater average tone variable than the comparison groups in both corpora. This is somewhat puzzling. My first intuition is that I perhaps painted with too broad of a brush: Caplin (2014) and Williams (1997) are both reticent about associating the lament topic with any bass lines that are not set in the minor mode, and even then they admit they are uneasy admitting the association stands for all cases. Addressing this issue is ultimately a matter of sampling. As it stands, 49.2% of the gross cantata movements are in minor (n = 415). Selecting only cantatas that contain the required median of 39 words (n = 356) from this collection leaves 182 songs. And only 13 of these 182 feature a descending bass pattern. Thus, we would be faced with a truly unremarkable sample size.

Reconciling modality for the Billboard songs presents a slew of complications; namely because defining popular song as "major" or "minor" is, at best, a tenuous endeavor. One approach is to analyze harmonies by framing them against a global tonic, which may or may not be fully actualized throughout the course of the work. This most closely follows the Schenkerian methodology of Walter Everett (2009), which frequently gives priority to a major-mode (i.e., Ionian) centered interpretation of chord relationships. I argue this type of generalization is not ideal in light of some of the pervasive (and more importantly, salient) harmonic characteristics of popular music, including modal mixture and chromatic alteration of roots. 4 A more detailed method to define modality is to segment a song into sections—that is, each formal zone (e.g., verse, chorus) is treated as having its own tonal center by which the chords within are contextualized. 5 This approach follows how the Billboard is encoded: each formal section is categorized under a "local key" heading. However, even by narrowing the temporal range in which the harmonies are analyzed, the functional relationship between chords is still not always clear, nor is the tonal center. Biamonte (2010) addresses this tendency by arguing that the harmonic content of many rock songs can be best understood as a collection of modal and pentatonic patterns and are thus not governed by traditional tertian harmony. Indeed, this concern has impacted harmony-oriented popular music corpus studies; de Clercq and Temperley (2011) have excluded non-triadic songs from their statistical analyses, presumably because the song's harmonic structure complicates defining a tonic.

| Mean "tone" | Standard dev. | |

|---|---|---|

| Bach cantatas (wc > 39) | 64.30 | 41.06 |

| descending (n = 31) | 66.90 | 39.84 |

| non-descending (n = 325) | 62.78 | 39.64 |

| McGill Billboard songs | 60.42 | 35.42 |

| descending (n = 633) | 64.19 | 35.82 |

| non-descending (n = 95) | 59.85 | 32.75 |

Even if one considers all possible modes in their analysis and then sorts them into a major/minor dichotomy, not all modes stand on equal ground in terms of their perceived affect. A study by Temperley and Tan (2013) shows that Dorian was consistently rated as "happier" than Aeolian, even though both are technically minor (in that they both contain lowered-third scale degrees). In a follow-up study, Tan and Temperley (2017) suggest that a higher degree of "sharpness" (i.e., modes that contain more raised scale degrees), combined with a listener's familiarity with the tonal system, may be the primary factors of perceived happiness or sadness. These studies suggest there is a gradient relationship between emotional valence and a work's underlying harmonic system. Given the diffuse harmonic makeup of popular songs, the subjective nature of defining modality in harmonically ambiguous settings, and the spectrum of emotional affect as it corresponds to modality, claims of association between conveyed emotion and a tonal center in popular music will remain tenuous until our understanding of pop-rock tonality is further developed.

Overall, the case made above suggests that a clearly conveyed major/minor modality may not be a systematic structural feature of popular music. This, I believe, diminishes any claims about songwriters choosing to contextualize a descending bass pattern within a major or minor key. Because popular music research is currently not in a position to make a convincing case for a generalized theory of modality, 6 I attempted to resolve a song's general key affect (i.e., major or minor) by reconciling traditional analysis with an analytically agnostic computational-driven approach. For each of the 98 songs with descending bass lines in the McGill Billboard Corpus, the modality reported by the Spotify API was compared with my a priori interpretation, which resulted in an agreement of 72%. By considering only minor-mode songs from this cross-validated collection, I was left with 30 songs with descending bass lines. Again, this is an unfortunately small sample. Another method would be to let the descending bass pattern "speak for itself"—that is, I could choose only the descending bass patterns that fit the scale degrees of the diatonic minor mode (e.g., ^1–^b7–^b6–^5). Again, however, this approach problematic, as it ignores how these scale degrees are harmonized and instead assumes that a listener could hear this pattern as salient in reference to global tonic. It also yields a similarly poor sample of just 28 songs.

The results of my first experiment, framed by the preceding complications, would suggest there is no guarantee that a composer has intentionally matched a descending bass line with negatively valenced lyrical content in the context of a mode. Listener responses may instead provide a better measure of emotional valence in songs that employ the descending bass compositional pattern. General acoustic properties of a recording may also provide more insight into affect than harmonic setting. In response, a second study was conducted that examines the relationship between songs with descending bass lines and five additional acoustical-musical cues that have been demonstrated to be associated with sadness (Huron, Anderson, & Shanahan, 2014).

EXPERIMENT 2

Sad-sounding musical passages have been reported to exhibit the following features: quieter dynamic levels, slower tempi, smaller pitch movements, relatively low overall pitch, "mumble-like" articulations, and darker timbres (Juslin & Laukka, 2003; Huron, Anderson, & Shanahan, 2014). Experiment 1 did not and could not address these pertinent features, for two reasons. First, complete notations for popular music excerpts do not necessarily exist. Indeed, the harmonic content of the McGill Billboard corpus consists exclusively of chord symbols as reductions of musical texture. Second, even if these songs were fully scored, notated music does not capture all musical properties that contribute to the general affect of a sound, such as timbre and articulation. However, such traits may be judged by listening, which serves as the basis for the following hypothesis:

H2. Compared to songs with non-descending bass lines, musical excerpts featuring descending bass lines are judged by listeners to better exhibit acoustic features already established to be associated with sadness.

Specifically, one would expect an association between descending bass lines and the five features (quieter, slower, etc.) identified earlier. To avoid potential experimenter bias, independent listeners unfamiliar with the purpose of this study were asked to make such judgements.

Method

Twenty-three musician participants were recruited from among undergraduate and graduate music students and faculty at The Ohio State University. None of the participants were familiar with the purpose of the study. Participants were asked to sit at a computer terminal in a soundproofed booth and listen to 40 fifteen-second musical excerpts through headphones.

Musical stimuli consisted of fifteen-second extracts from sound recordings corresponding to the works listed in the Billboard corpus. Half of the excerpts played for participants were randomly selected from songs that were reported to feature a descending bass line from the results of the above corpus study (n = 20), while the other half were randomly selected from the non-descending songs (the target and control songs are listed in the appendix). Upon listening, participants were prompted to respond to the questions stated below, on a 7-point scale.

PREDICTIONS

It was determined a priori that dynamics (i.e., loudness) and articulation would not be effective predictors of songs with descending bass patterns. In popular music, dynamic levels are often restricted or normalized using dynamic compression technology. Uploading almost any popular song to the sound editor program Audacity, for example, reveals a "loaf-shaped" audio visualization. Similarly, in contrast to Western art music, which commonly exhibits a range of articulations between staccato and legato textures, my anecdotal experience with popular music leads me to hear it as mostly smooth (i.e., notes are connected with legato articulation). This leaves slowness or pace, interval size, and timbre as anticipated effective predictors. To summarize, it is expected that excerpts with descending bass lines will be judged to be slower, demonstrate audibly smaller interval sizes, and have a darker timbre, which would indicate a generally sadder affect.

INSTRUCTIONS

The instructions for participants are given below:

In this survey we will ask a series of questions related to the musical and acoustic features of musical excerpts. For each excerpt, rate how well the music you hear conforms to the characteristic being described by the question. Note that when a question asks you to focus on the accompaniment, be sure to listen closely to the instruments and not the vocal part (if any). The content of the lyrics or the quality of the singer's voice should have no bearing on your judgement of the accompaniment's musical or acoustic characteristics.

| Very quiet | Not quiet at all | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Very slow | Not slow at all | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Mostly small | Mostly large | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Mostly staccato | Mostly legato | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Very dark | Not dark at all | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

In addition, the survey asked participants to indicate whether they were familiar or unfamiliar with the excerpt. Following completion of the survey, participants were debriefed in order to alert the experimenters of the possibility of unwanted demand characteristics, or possible confusion in completing the survey. Most respondents reported taking between 30 and 45 minutes to complete the survey; no respondents reported taking more than 75 minutes.

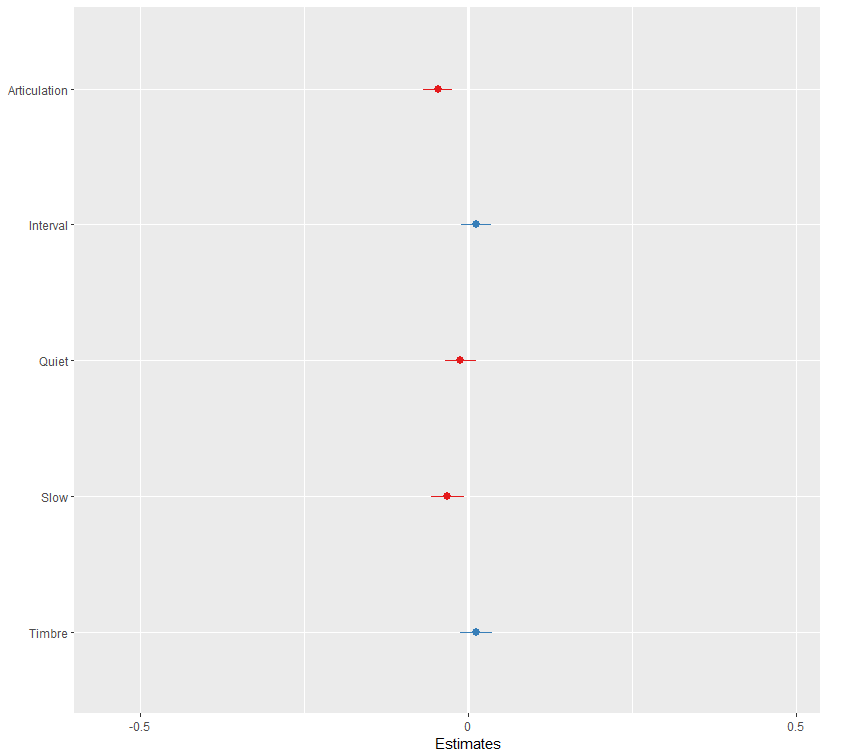

Results

In order to understand the results of the survey, binomial multi-variate logistic regression analysis was conducted, where the predicted variable is whether the excerpt exhibits a descending or non-descending bass line, F(5, 919) = 2.65, p < .01. Each of the five variables in the survey were used as predictor variables. The results suggest that two variables provided significant predictors, namely slowness/pace (p = .02) and articulation (p < .01). Timbre (p = .30), dynamics/loudness (p = .33), and interval size (p = .28) were non-significant (Table 3). Therefore, only two of the a priori categories—dynamics/loudness and tempo/pace—were predictive as anticipated.

Note that the sample size for participant responses is small (n = 23). This is reflected in the log-odds ratios reported in Figure 6. For example, when considering articulation as a predictor of a descending bass line, participants did so at odds better than chance, but only by a slim margin. That is, if the odds of successfully predicting that a song features a descending bass pattern is 1 to 1, then listeners did so by evaluations of interval size at a rate of 1.01 to 1.

Discussion

An ideal outcome from this study is that participants would, if present, (1) hear the descending bass as salient and (2) report the song as evoking small interval sizes, in addition to the other features that track with known characteristics of sad speech and music. A close look at the data for "Man in the Mirror" (1988), one of the target descending bass songs (notated in Figure 7), represents the results well. Listeners reported the song to have a greater than average articulation (mean = 5.57, where 7 is most legato) against all songs (mean = 3.93), but also a slower than average pace (mean = 2.43, where 1 is the slowest) against all songs (mean = 4.16). This tracks with what we know about laments such as "When I am laid in Earth" and "Piangerò la sorte mia." Both are ostensibly slow and are performed with legato articulation—as is the case for "Man in the Mirror." The musical topic that theoretically ties these three songs together, the entire crux of this empirical investigation, however, appears to be insignificant to the 23 listeners. Data demonstrates that, for "Man in the Mirror," the average interval size (mean = 3.82) was reported as just that: average (mean = 3.12 across all songs).

| Estimate | Std. Error | z-value | Pr (>|z|) | |

|---|---|---|---|---|

| Intercept | 1.11 | 0.42 | 2.65 | .01** |

| Articulation | -0.19 | 0.05 | -4.00 | 6.22e-05*** |

| Interval | 0.05 | 0.05 | 1.08 | .28 |

| Quiet | -0.05 | 0.05 | -0.98 | .33 |

| Slow | -0.13 | 0.05 | -2.44 | .01* |

| Timbre | 0.05 | 0.05 | 1.04 | .30 |

Significance codes: *** p < .001, ** p < .01, * p < .05

| Articulation | Interval | Quiet | Slow | Timbre |

|---|---|---|---|---|

| 0.95 | 1.01 | 0.99 | 0.97 | 1.01 |

Figure 6. Log-odds ratios for participant ratings of acoustic features of sad sounds.

Why might this be the case? One could speculate that "Man in the Mirror" features a diatonic major descending bass line from ^1 to ^4 and the total number of whole steps (3 between ^7–^6–^5–^4) may have inflated the rating. However, I am not convinced this reflects the listeners' interpretation. Instead, I would argue the airy synthesizer (articulation), finger snaps on beats 2 and 4 (slowness/pace), and the multi-track vocals (even though we asked participants to ignore the voice) are much more salient than any descending root motion in the bass, even as they wore headphones. 7 It is true that participants may have simply been presented with an unfortunately selected excerpt from the random sample of descending bass songs, but their evaluation of articulation and slowness are still convincing, which suggests they were indeed capable of making the holistic acoustic judgments asked of them. However, my conclusion is that harmonic transitions, as reflected by the descending bass pattern in the context of a tonal center (in this case, Ionian), may be secondary to other musical characteristic in conveying emotional affect. Which is to say the hegemony of harmony appears to take a back seat to pace (e.g., tempo and meter) and articulation (e.g., attack, but also perhaps rhythm and phrasing).

CONCLUSION

The lament topic was a signal to listeners that the narrative had taken a turn for the worse. Lovers would depart, a heroine would be imprisoned, and/or someone was surely to soon be at Death's door. For 17th-century listeners, the descending bass pattern, especially in the context of the minor mode, shared a close association with such dramatic developments. But when Handel began the introduction to "Piangerò la sorte mia," it seems unlikely that keen listeners would be confused about the context of the aria; namely because the descending bass was still slow, legato, and set in triple meter. Plus, they would have been cued into the dramatic developments leading up to Cleopatra's imprisonment. Thus, the general message of the topic would still be maintained, even if one of the parameters had been altered.

A primary shortcoming of this study is that there are simply not enough encoded songs between the 16th century and the present that are available for computational analysis. If there were, I would expect the results of the first study to be even more diffuse as time progresses—even from the start, a descending bass line on its own is not necessarily a topic, but a convenient compositional device to get from a tonic to dominant harmony (or in the case of popular music, something else entirely). Context makes the pattern a topic. Tracking the use of the descending bass line over time, across styles and geographic regions, would likely yield intriguing observations about this common contrapuntal device. A larger sample would also provide more specificity to hypothesis-driven studies, including this one. One could limit analysis to only songs in the minor mode, set in a slow tempo, and in triple meter, thus sidestepping the issue of sample size. Moreover, there would perhaps be fewer limitations on word count, thus granting LIWC more power to make a more convincing emotional analysis. A corpus of opera arias would be the ideal testing ground for a lament-related hypothesis.

The second half of this study also encourages expansion. Emotional valence is more nuanced than the positive-negative dichotomy assumed here; instead, it might be informative to have participants respond to the excerpts by arousal and affect to arrive at more specific emotional categories like grief (high arousal, negative affect), melancholy (low arousal, negative affect), contentedness (low arousal, positive affect), or joy (high arousal, positive affect). Lyrics could also be considered. For example, I would categorize "Man in the Mirror" as conveying a generally neutral affect because the lyrics both remark on injustices but also express a desire to "make a change." Meanwhile, the music sits at a relatively low state of arousal compared to the other songs sampled. By this measure, the song could be characterized as "contemplative" or "aspirational" which tracks with the expected associated affect of a diatonic major bass line.

ACKNOWLEDGMENTS

This article was copyedited by Tanushree Agrawal and Niels Chr. Hansen and layout edited by Diana Kayser.

NOTES

-

Correspondence can be addressed to: Nicholas Shea, Ph.D., Assistant Professor of Music Theory, Arizona State University, Music Building, 50 E Gammage Pkwy, Tempe, AZ 85281

Return to Text - Figures 1–4 are transcriptions made by the author and do not reference any specific score.

Return to Text - de Clerc and Temperley (2011) similarly report that 94.1% of the chords in the Rolling Stone 200 corpus are in root position. This appears to be a stylistic feature of popular music more broadly.

Return to Text - Schenker treated chromatic (i.e., non-major) scale degrees as non-structural alterations to the underlying diatonic scale.

Return to Text - For a summary of these two approaches, see Capuzzo (2009).

Return to Text - My personal view is that modality (i.e., major vs. minor) in popular music is sectional, but also that chords on strong beats should be given more priority as perceived tonics. Moreover, I do believe that formal sections belong to "collections" of scale degrees, from which listeners experience major and minor harmonies in a syntactic manner.

Return to Text - A study by Pollard-Gott (1983) suggests that trained listeners were only able to hear structural features such as harmony, themes, and cadences after multiple hearings.

Return to Text

REFERENCES

- Biamonte, N. (2010). Triadic modal and pentatonic patterns in rock music. Music Theory Spectrum, 32(2), 95–110. https://doi.org/10.1525/mts.2010.32.2.95

- Boyd, R. L. (2017). Psychological text analysis in the digital humanities. In S. Hai-Jew (Ed.), Data analytics in the digital humanities (pp. 161–189). Cham, Switzerland: Springer Nature. https://doi.org/10.1007/978-3-319-54499-1_7

- Burgoyne, A. (2011). Stochasitic processes and database-driven musicology. Doctoral dissertation, McGill University, Montreal, Canada.

- Caplin, W. (2014). Topics and formal function: the case of the lament. In D. Mirka (Ed.), The Oxford handbook of topic theory (pp. 415–452). New York, NY: Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199841578.013.0016

- Capuzzo, G. (2009). Sectional tonality and sectional centricity in rock music. Music Theory Spectrum, 31(1), 157–174. https://doi.org/10.1525/mts.2009.31.1.157

- Cohn, R. (2016). Analytical theory of musical meter, part 1. Materials from the Society of Music Theory Graduate Student Workshop.

- de Clercq, T. (2015). Corpus studies of harmony in popular music: a response to Léveillé Gauvin. Empirical Musicology Review, 10(3), 239–244. https://doi.org/10.18061/emr.v10i3.4842

- de Clercq, T. & Temperley, D. (2011). A corpus analysis of rock harmony. Popular Music, 30(1), 47–70. https://doi.org/10.1017/S026114301000067X

- Doll, C. (2017). Hearing harmony: toward a tonal theory for the rock era. Ann Arbor, MI: University of Michigan Press. https://doi.org/10.3998/mpub.3079295

- Everett, W. (2009). The foundations of rock. New York, NY: Oxford University Press.

- Gjerdingen, R. (2007). Music in the Galant style. Oxford, UK: Oxford University Press.

- Gauvin, Hubert Léveillé. (2015). "'The Times They Were A-Changin'': A Database-Driven Approach to the Evolution of Musical Syntax in Popular Music from the 1960s." Empirical Musicology Review, 10(3), 215–238. https://doi.org/10.18061/emr.v10i3.4467

- Huron, D. (1996). "The melodic arch in Western folksongs." Computing in Musicology, 10, 3–23.

- Huron, D., Anderson, J., & Shanahan, D. (2014). You can't play a sad song on the banjo: acoustic factors in the judgement of instrument capacity to convey sadness. Empirical Musicology Review, 9(1), 29–41. https://doi.org/10.18061/emr.v9i1.4085

- Hart, J., Collier, R., & Cohen, A. (1990). A perceptual study of intonation: an experimental-phonetic approach to speech melody. Cambridge, UK: Cambridge University Press. https://doi.org/10.1017/CBO9780511627743

- Juslin, P. N. & Laukka, P. (2003). Communication of emotions in vocal expression and music performance: different channels, same code? Psychological Bulletin, 129(5), 770–814. https://doi.org/10.1037/0033-2909.129.5.770

- Lieberman, P. & Michaels, S. B. (1962). Some aspects of fundamental frequency and envelope amplitude as related to the emotional content of speech. Journal of the Acoustical Society of America, 34, 922–927. https://doi.org/10.1121/1.1918222

- Pennebaker, J. W., Boyd, R. L., Jordan, K., & Blackburn, K. (2015a). The development and psychometric properties of LIWC2015. Austin, TX: University of Texas.

- Pennebaker, J. W., Booth, R. J., Boyd, R. L., & Francis, M. E. (2015b). Linguistic inquiry and word count: LIWC2015, Operator's Manual. Austin, TX: Pennebaker Conglomerates.

- Pollard-Gott, L. (1983). Emergence of thematic concepts in repeated listening to music. Cognitive Psychology, 15(1), 66–94. https://doi.org/10.1016/0010-0285(83)90004-X

- Schenker, H. (1935/1979). Free composition (E. Oster, Trans.). New York, NY: Longman. (Original work published 1935).

- Schmalfeldt, J. (2001). In search of Dido. Journal of Musicology, 18(4), 584–615. https://doi.org/10.1525/jm.2001.18.4.584

- Shanahan, D. & Huron, D. (2011). Interval size and phrase position: a comparison between German and Chinese folksongs. Empirical Musicology Review, 6(4), 187–197. https://doi.org/10.18061/1811/52948

- Tan, D. & Temperley, D. (2017). Perception and familiarity of diatonic modes. Music Perception, 34(3), 352–365. https://doi.org/10.1525/mp.2017.34.3.352

- Temperley, D. & Tan, D. (2013). Emotional connotations of diatonic modes. Music Perception, 30(3), 237–257. https://doi.org/10.1525/mp.2012.30.3.237

- Wilce, J. (2009). Language and emotions. New York, NY: Cambridge University Press.

- Williams, P. (1997). The chromatic fourth during four centuries of music. Oxford, UK: Clarendon Press.

APPENDIX

| Song name, Artist | Control | Quiet | Slow | Inter. | Articu. | Timbre | Familiar |

|---|---|---|---|---|---|---|---|

| Man in the Mirror, Michael Jackson | 0 | 3.74 | 2.43 | 3.83 | 5.57 | 5.39 | 3.04 |

| Hooked on a Feeling, BJ Thomas | 0 | 3.65 | 2.74 | 2.09 | 5.35 | 4.09 | 2.00 |

| Tonight Tonight Tonight, Genesis | 0 | 4.74 | 3.52 | 2.96 | 5.30 | 3.87 | 1.48 |

| Maybe I'm Amazed, Paul McCartney | 0 | 4.09 | 3.22 | 3.22 | 5.17 | 3.43 | 2.30 |

| On the Wings of a Nightingale, Everly Brothers | 0 | 4.83 | 4.04 | 3.43 | 4.70 | 4.13 | 1.30 |

| Sail on Sailor, The Beach Boys | 0 | 4.52 | 3.30 | 2.65 | 4.43 | 3.96 | 1.43 |

| Bluebirds Over the Mountains, The Beach Boys | 0 | 4.39 | 4.35 | 3.00 | 4.35 | 4.26 | 1.48 |

| Standing in the Shadows of Love, Four Tops | 0 | 5.22 | 4.57 | 3.43 | 4.26 | 3.65 | 1.70 |

| Its Rainin Men, The Weather Girls | 0 | 3.83 | 4.74 | 2.39 | 4.17 | 3.74 | 3.00 |

| Sanctify Yourself, Simple Minds | 0 | 3.91 | 4.04 | 3.13 | 4.00 | 4.57 | 1.30 |

| Town Without Pity, Gene Pitney | 0 | 3.52 | 3.17 | 2.65 | 4.00 | 3.52 | 1.30 |

| Maneater, Hall and Oates | 0 | 4.35 | 4.39 | 2.65 | 3.83 | 3.78 | 1.83 |

| I Want You Back, Jackson 5 | 0 | 6.00 | 4.83 | 2.91 | 3.83 | 5.00 | 4.04 |

| Lady, The Commodores | 0 | 5.22 | 4.39 | 3.35 | 3.83 | 4.22 | 1.74 |

| One Bad Apple, The Osmonds | 0 | 4.39 | 5.22 | 3.17 | 3.48 | 5.30 | 2.22 |

| Looking for a Love, J Giels Band | 0 | 5.30 | 5.87 | 3.30 | 3.43 | 4.78 | 1.39 |

| I Want You to Want Me, Cheap Trick | 0 | 4.78 | 5.26 | 2.00 | 3.26 | 3.74 | 3.17 |

| 25 or 6 to 4, Chicago | 0 | 5.00 | 5.39 | 3.22 | 3.26 | 3.61 | 2.26 |

| Silver Threads and Golden Moons, The Cowsills | 0 | 4.87 | 4.52 | 3.61 | 2.96 | 3.48 | 1.22 |

| Black Cars, Gino Vannelli | 0 | 5.74 | 4.57 | 4.43 | 2.91 | 5.83 | 1.52 |

| My Hometown, Bruce Springsteen | 1 | 2.26 | 2.26 | 2.17 | 5.43 | 3.57 | 1.30 |

| Must of Got Lost, J Giels Band | 1 | 4.22 | 3.13 | 3.87 | 4.65 | 3.83 | 1.39 |

| Invisible Touch, Genesis | 1 | 5.00 | 4.48 | 3.39 | 4.48 | 4.13 | 1.87 |

| Heart Full of Soul, Yardbirds | 1 | 4.87 | 4.52 | 3.39 | 4.48 | 3.78 | 1.30 |

| Sunday Morning Sunshine, Harry Chapin | 1 | 3.17 | 3.09 | 3.43 | 4.39 | 4.13 | 1.39 |

| Make a Little Magic, Nitty Gritty Dirt Band | 1 | 4.78 | 3.35 | 3.17 | 4.39 | 4.13 | 1.22 |

| Stoned Love, Supremes | 1 | 4.83 | 4.00 | 3.30 | 4.04 | 4.78 | 1.17 |

| Little Old Lady from Pasadena, Jan and Dean | 1 | 4.30 | 4.96 | 3.35 | 4.00 | 5.39 | 2.09 |

| A Dream Goes on Forever, Todd Rundgren | 1 | 4.39 | 3.26 | 3.48 | 4.00 | 4.00 | 1.13 |

| If You Need Me, Solomon Burke | 1 | 3.74 | 2.39 | 3.30 | 3.91 | 4.22 | 1.74 |

| Give it to me Baby, Rick James | 1 | 5.65 | 4.70 | 3.43 | 3.83 | 4.57 | 1.91 |

| Think of Me, Buck Owens | 1 | 4.43 | 4.26 | 3.13 | 3.52 | 5.22 | 1.13 |

| Wanna Be Startin' Something, Michael Jackson | 1 | 5.09 | 4.91 | 2.52 | 3.52 | 4.30 | 3.39 |

| In the Midnight Hour, Wilson Pickett | 1 | 4.39 | 3.39 | 3.78 | 3.43 | 3.96 | 1.61 |

| Living in the Past, Jethro Tull | 1 | 4.78 | 5.17 | 3.13 | 3.30 | 4.43 | 1.26 |

| Wake Me Up Before You Go Go, WHAM | 1 | 4.13 | 5.00 | 2.91 | 3.17 | 5.43 | 3.39 |

| Beat It, Michael Jackson | 1 | 4.74 | 4.43 | 2.48 | 2.96 | 3.17 | 4.30 |

| All This Time, Sting | 1 | 4.30 | 5.04 | 2.52 | 2.96 | 4.39 | 1.43 |

| Trampled Underfoot, Led Zeppelin | 1 | 5.70 | 4.48 | 3.65 | 2.43 | 3.26 | 1.78 |

| Getaway, Earth Wind and Fire | 1 | 5.65 | 5.22 | 3.04 | 2.39 | 5.13 | 1.87 |

| Averages | 4.56 | 4.17 | 3.12 | 3.93 | 4.25 | 1.91 |