INTRODUCTION

FADO is a performance practice that was listed as UNESCO Intangible Cultural Heritage in 2011 4. In the context of a PhD dissertation, we have encoded and edited a symbolic music corpus derived from 100 musical transcriptions, identified as fado in the written sources. The constitution of this corpus was an important step in order to lay the foundations of a digital archive of songs associated with the fado practice. This archive is relevant in order to gather, in a single reference, essential information about repertoires, as well as make it easily available to the scientific community and to the public at large. We will share our research experience and further detail the symbolic corpus and the methods and techniques we have used to assemble, process, and test it. These methods are reproducible and suitable for implementation in future research. Therefore, we invite other researchers to extrapolate and make use of these methods in other musical corpora. Having the encoded information, we were then able to experiment and perform analytical and systematic techniques and obtain preliminary results (to be detailed in another paper). It also allowed us to build a proof-of-concept generative model for these songs and automatically create new instrumental music based on the ones in the corpus (Videira, Pennycook, & Rosa, 2017). We hope the description of our endeavors helps us gather support and funding to proceed and scale this work, namely by adding more songs and metadata to the archive, and linking the symbolic data to available audio data. This would allow us on the one hand to refine our analysis and our generative models, hopefully leading in the future to the creation of better songs and, on the other hand, to combine systematic knowledge with ethnographic and historical knowledge leading to a better understanding of the practice.

ON FADO

Fado is a performance practice in which a narrative is embodied and communicated to an audience by the means of several expressive cues and gestural conventions. The fadista styles a melody, highly constrained by the lyrical (prosodic and semantic) content, on top of a harmonic accompaniment usually based on stock figurations and repetitive ostinati. Currently, the accompaniment is often played on a viola (the emic name for a single-course 6-string guitar) and occasionally aided by a bass. The voice and the harmonic layer are complemented with counter-melodic figurations played on a guitarra (a pear-shaped 12-steel-stringed double-course guitar unique to Portugal). Although this ensemble was the most common one during most of the twentieth century, older sources indicate the use of a piano or a single guitarra playing arpeggiated chords. In more sophisticated contexts like the musical theater an orchestra was often used (Castelo-Branco, 1994; Côrte-Real, 2000; Gray, 2013; Nery, 2004, 2010a). Several contemporary examples of live fado performance in the context of tertúlia [informal gathering] can be found in the "Tertúlia" YouTube channel 5.

THE MUSICAL SCORES

The study of past musical examples is a fundamental source to fully characterize and model the musics and sounds of fado. Given that fado performance pre-dates the era of sound recordings, the study of symbolic notation is required to access the music of those periods. There are several transcriptions of fado on musical notation spanning from the second half of the nineteenth century to current days. However, up to date, they were never systematically encoded nor made easily available to the community. Our project is a first contribution to solve that issue.

These transcriptions have different origins. Some of them (Neves & Campos, 1893; Ribas, 1852) were assembled by collectors based on the popular repertoires already in circulation in the streets and popular venues. As such, they represent songs of anonymous origins, probably derived from improvisatory practices that were being orally transmitted. Hence, they are difficult to date with precision, and their reification carries bias from the decisions their collectors made: their values, motivations, social and cultural background (Negus, 1997, p. 69). However, these transcriptions are the only ones available for certain parts of the repertoire that otherwise would be lost. On the other hand, there are other transcriptions representing a parallel practice to the one happening in the streets and popular venues: the practice of stylized songs associated with fado practice by the upper classes in their own contexts, that evolved and coexisted with the oral tradition. There are numerous examples of songs titled or identified as fado composed in musical notation, following processes of western art music tradition. Some notated fados emerged from schooled composers expressing their individual creativity (namely Reinaldo Varela, Armandinho, Joaquim Campos, Alain Oulman), while others were the result of a flourishing urban entertainment industry combining the printed music business and the musical theater. Established composers linked with the music industry (namely Rio de Carvalho, Freitas Gazul, Tomás del Negro, Luís Filgueiras, Carlos Calderón, Alvez Coelho or Filipe Duarte) were commissioned to compose songs from scratch based on what was perceived to be the popular taste. These songs were then performed by renowned artists on the theater shows and the most successful ones were edited and sold to the masses, expanding their audiences. Many songs composed and edited in this manner were labeled as fados and recognized by the community as such, being gradually incorporated into the canonical repertoire later on (Losa, Silva, & Cid, 2010; Oliveira, Silva, & Losa, 2008).

Both the transcriptions derived from oral tradition and the newly composed songs in sheet music format, were meant to be played by upper class women in their homes. The distinctive accepted social model at the time was the one imported from Paris and thus it was of the utmost importance for the women to learn French and to play piano at a basic level (Nery, 2010b, p. 55). According to Leitão da Silva, the notion that the musical scores were meant for the domestic entertainment market led collectors to present them according to the conventions of commercial sheet music editions at the time, namely by adapting their harmonies and instrumentation, softening their lyrics, and dedicating each piece to an aristocratic lady (Leitão da Silva, 2011, pp. 257–258). As such, most songs are presented for solo piano or voice and piano, with the melodic material in the upper staff and the harmonic material in the bottom one, often with the lyrics in between. There are usually tempo indications, but few articulations or dynamics. Our priority was to encode already digitized sources, including the oldest ones available. At a later stage we expect to proceed with others. The Cancioneiro de Músicas Populares, compiled by César das Neves and Gualdim Campos (Neves & Campos, 1893), contains several traditional and popular songs transcribed during the second half of the nineteenth century, 48 of which categorised as fados by the collectors, either in the title or in the footnotes. This set was complemented with a transcription of fado do Marinheiro, provided and claimed by Pimentel to be the oldest fado available (Pimentel, 1904, p. 35). We also had access to the photographs of the collection of musical scores from the Theater Museum in Lisbon. This collection comprises more than 1,000 individual popular works, gathered from several sources, that were previously digitized by researchers from the Institute of Ethnomusicology at the Universidade NOVA de Lisboa (INET-MD). Since they are sorted arbitrarily, we just encoded all the scores categorized as fados along the way until we reached the number of 51, thus rounding up our initial corpus to a total of 100 encoded transcriptions. This number is arbitrary and it was just a convenient sample size given our limited time and budget. There are at least 200 more scores categorized as fados in this collection and we intend to encode them as well in the future. The corpus is now available as an open digital archive and, given time and support, continuous updates and improvements are to be expected [3]. The archive consists of the musical scores (in PDF and MIDI format), and metadata in the format of relevant information (sources, designations, date(s), authorship(s)) for each fado, as well as analytical, formal and philological commentaries. The creation of this new digital object is relevant for archival and cultural heritage purposes.

THE ENCODING PROCESS

Initially, two versions of the corpus were made: an Urtext version representing the faithful encoding of the transcriptions with minimal editorial intervention (which is currently online), and a first attempt at a (critically) edited version that is being progressively improved with the expectation of replacing the current one.

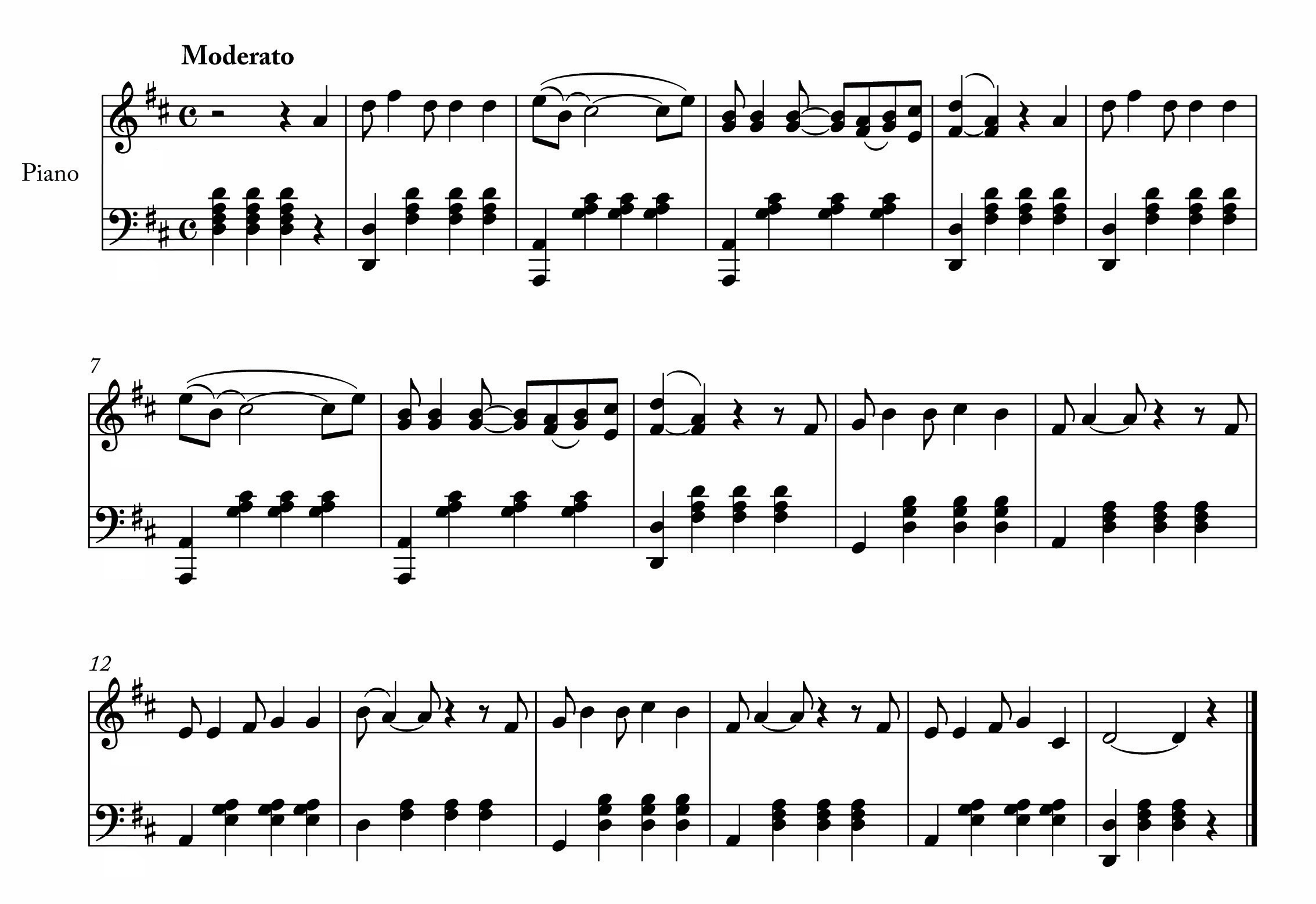

The encoding technique consisted of giving a unique identifier number to each fado occurrence found in the sources mentioned and then to re-transcribing each one into a Music Notation program. In this case we have used Finale (MakeMusic Software). Then, we cleared the document of minor errors, incongruences and mistakes, using philological techniques, following a principle of minimal editorial intervention (Bardin, 2009; Cook, 1994; Feder, 2011; Grier, 1996). This originated an Urtext version of the corpus. A sample is presented in Figure 1.

Fig. 1: Urtext transcription of A menina dos olhos negros [The girl with black eyes], originally found in (Neves & Campos, 1893, p. 84)

The following step of the method consisted in a critical edition based on standardization processes. These processes can be described formally using techniques coming from musical analysis, namely semiotic and syntagmatic analysis (Cook, 1994, p. 151). Our goal was to make the materials uniform and easily comparable among themselves. The content of the database refers to musical scores as early as the nineteenth century. As such, the first written definition of fado as a musical genre, presented by Ernesto Vieira, in his musical dictionary, was used as a model:

a section of eight measures in binary tempo, divided into two equal and symmetric parts, with two melodic contours each; preferably in the minor mode, although many times it modulates into the major, carrying the same melody or another; the harmony being built on an arpeggio in sixteenth-notes alternating tonic and dominant chords, each lasting two measures (Vieira, 1890, pp. 238–239).

All transcriptions were standardized into the same time signature and beat unit as Ernesto Vieira's model. In this case, binary tempo with a quarter note as a unit. Phrases lasting four or eight measures were expected, so when we saw a phrase nine measures long, among others lasting eight, we assumed that this was a textual error. Several fados were notated in either quaternary or binary meter but with doubled note durations, originating sections sixteen measures long. In those cases, the note durations were halved. The melody was used as a main guide to perform segmentation and identify repetitions and patterns of recurrence. Once the formal sections were identified, double measure lines were added so that the transitions were easily identifiable.

The corpus at this stage was called the Edition corpus, since it was critically edited and it is the most complete and standardized corpus possible, while still retaining all the original information from the sources. We hope to refine this edition with more time by carefully proof-reading each score, and professionally engraving them in order to obtain a better graphical display and include some of the metadata currently missing. Since many original sources lack of articulatory or dynamic instructions they seem somewhat distant from the actual practice and their sonic output is rather mechanical and dull. In an ideal scenario all scores should be played in a MIDI keyboard by the same human performer following the conventions of the time. However, this was neither efficient nor feasible in our context. Hence, after some trial and error, we decided to encode the MIDI files using the humanizer preset "marching band" in order to simulate a consistent human performance of all scores (current and future), no matter how large the sample size. Our expectations being that the MIDI files obtained containing articulatory and gestural fluctuations better reflect a human performance of these songs, thus better representing the practice. A sample from this corpus is presented in Figure 2.

THE INTERNAL CONSISTENCY OF A CORPUS

The constitution of our symbolic music corpus raised some issues that we inferred were similar to those anyone working in music style imitation in particular, or on systematic musicology projects in general, might face. How can we guarantee that any symbolic corpus is consistent and able to provide reliable source material for a subsequent process? How does one know that a corpus is effectively a solid representation of a given genre or style and not a mere amalgamation of very different tunes?

A corpus is internally consistent when it is perceived as a cohesive, congruent, standalone entity in relation to other corpora; when most of its elements share enough common features among themselves. We realized that many genres can be successfully identified by very specific traits or a combination of features. Therefore, if a given musical corpus is internally consistent, and can indeed represent a genre or a style of its own, this means there are more affinities among its elements than with the elements of other corpora. Consequently, the elements will form a cluster. On the other hand, if a given corpus is not consistent, this means the data will either be dispersed or the elements will cluster with elements of other corpora.

There are several studies concerning automatic feature extraction and classification of symbolic corpora (Conklin, 2013; Hillewaere, Manderick, & Conklin, 2009; Knopke, 2008; Mayer et al, 2010; Pérez-Sancho, De León, & Inesta, 2006). In our research we have found particularly relevant both a study conducted on Cretan Songs, using music information retrieval systems (Conklin & Anagnostopoulou, 2011), and very recent attempts at automatic music classification of fado based on sound recordings (Antunes et al, 2014). We learned that it is possible for a system to automatically extract information from encoded music files, and then to automatically perform a supervised classification, since such files will present distinctive features (derived from the encoded musical information in symbolic data format). These features will allow them to be closer to certain clusters of files previously identified as a given genre or style than others. These previous works inspired us to create our methodology, which we will now describe.

Our methodology consists of defining part of the corpus to be tested as a new class and training the computer with that information. Then, we provide the computer with corpora of MIDI files representing (some of) the other genres and styles from the universe (what one could call a set of classes representing "the world's musics"). The computer is also trained with that information. At this point, one asks the computer to automatically classify the remainder of the corpus to be tested. If the corpus is not consistent that will mean its MIDI files will be very different from each other, not sharing enough common features (the data will not cluster). As such, the probability that the computer will successfully classify them as exemplars of the class previously defined and trained will be low. Odds are that the computer will be confused and will either classify them as exemplars from the classes representing "the world's musics" or will be unable to classify them at all, not having enough positive features in common with any class present. On the other hand, if the corpus is indeed consistent, then this means that most MIDI files from the corpus will share many common features, forming a cluster. Hence, it is expected that the computer will be able to successfully classify most MIDI files as belonging to the class previously defined and trained.

We explored some automatic music information retrieval tools available. These were the MIDI Toolbox (Eerola & Toiviainen, 2004), available to install in Mathlab, or the Music21 6, a toolkit for computer aided musicology that is fairly accessible to use and program in Python (Cuthbert & Ariza, 2010). We started programming with Music21. However, after an initial period of experimentation, we were not satisfied with the steepness of the learning curve versus the efficiency of the results, and instead discovered the set of tools developed by Cory McKay, Bodhidharma and JMIR (McKay, 2004, 2010). Bodhidharma was of particular interest and ended up being our choice based on its effectiveness when compared to the competition, usability, ease of the learning curve, flexibility, feature selection, completeness and diversity of the corpora already present, and ability to import data in MIDI format and export results instantly into spreadsheets. Looking at the results obtained in a symbolic genre classification competition held, the 2005 MIREX Contest 7, Bodhidharma was also the best option taking into account the available systems. Having all this in mind, we decided to invest our time in this particular program. All tests were performed and features retrieved using this software and its pre-assembled corpora.

Cory McKayʼs software Bodhidharma is able to extract up to 111 different features from MIDI files. These features are divided into seven different categories: instrumentation, musical texture, rhythm, dynamics, pitch statistics, melody and chords. A list of all the features implemented and the ones used in our tests is presented in Table 1.

| Code | Feature | D | U | Code | Feature | D | U | Code | Feature | D | U |

|---|---|---|---|---|---|---|---|---|---|---|---|

| I-1 | Pitched Instruments Present | MD | R-4 | Strength of Strongest Rhythmic Pulse | OD | v | P-7 | Number of Common Pitches | OD | x | |

| I-2 | Unpitched Instruments Present | MD | R-5 | Strength of Second Strongest Rhythmic Pulse | OD | v | P-8 | Pitch Variety | OD | x | |

| I-3 | Note Prevalence of Pitched Instruments | MD | R-6 | Strength Ratio of Two Strongest Rhythmic Pulses | OD | v | P-9 | Pitch Class Variety | OD | x | |

| I-4 | Note Prevalence of Unpitched Instruments | MD | R-7 | Combined Strength of Two Strongest Rhythmic Pulses | OD | v | P-10 | Range | OD | x | |

| I-5 | Time Prevalence of Pitched Instruments | MD | R-8 | Number of Strong Pulses | OD | v | P-11 | Most Common Pitch | OD | x | |

| I-6 | Variability of Note Prevalence of Pitched Instruments | OD | R-9 | Number of Moderate Pulses | OD | v | P-12 | Primary Register | OD | x | |

| I-7 | Variability of Note Prevalence of Unpitched Instruments | OD | R-10 | Number of Relatively Strong Pulses | OD | v | P-13 | Importance of Bass Register | OD | x | |

| I-8 | Number of Pitched Instruments | OD | R-11 | Rhythmic Looseness | OD | v | P-14 | Importance of Middle Register | OD | x | |

| I-9 | Number of Unpitched Instruments | OD | R-12 | Polyrhythms | OD | v | P-15 | Importance of High Register | OD | x | |

| I-10 | Percussion Prevalence | OD | R-13 | Rhythmic Variability | OD | v | P-16 | Most Common Pitch Class | OD | x | |

| I-11 | String Keyboard Fraction | OD | R-14 | Beat Histogram | MD | P-17 | Dominant Spread | OD | x | ||

| I-12 | Acoustic Guitar Fraction | OD | R-15 | Note Density | OD | x | P-18 | Strong Tonal Centres | OD | x | |

| I-13 | Electric Guitar Fraction | OD | R-17 | Average Note Duration | OD | x | P-19 | Basic Pitch Histogram | MD | x | |

| I-14 | Violin Fraction | OD | R-18 | Variability of Note Duration | OD | x | P-20 | Pitch Class Distribution | MD | x | |

| I-15 | Saxophone Fraction | OD | R-19 | Maximum Note Duration | OD | x | P-21 | Fifths Pitch Histogram | MD | x | |

| I-16 | Brass Fraction | OD | R-20 | Minimum Note Duration | OD | x | P-22 | Quality | OD | ||

| I-17 | Woodwinds Fraction | OD | R-21 | Staccato Incidence | OD | x | P-23 | Glissando Prevalence | OD | ||

| I-18 | Orchestral Strings Fraction | OD | R-22 | Average Time Between Attacks | OD | x | P-24 | Average Range of Glissandos | OD | ||

| I-19 | String Ensemble Fraction | OD | R-23 | Variability of Time Between Attacks | OD | x | P-25 | Vibrato Prevalence | OD | ||

| I-20 | Electric Instrument Fraction | OD | R-24 | Average Time Between Attacks For Each Voice | OD | x | M-1 | Melodic Interval Histogram | MD | x | |

| T-1 | Maximum Number of Independent Voices | OD | R-25 | Average Variability of Time Between Attacks For Each Voice | OD | x | M-2 | Average Melodic Interval | OD | x | |

| T-2 | Average Number of Independent Voices | OD | R-30 | Initial Tempo | OD | v | M-3 | Most Common Melodic Interval | OD | x | |

| T-3 | Variability of Number of Independent Voices | OD | R-31 | Initial Time Signature | MD | M-4 | Distance Between Most Common Melodic Intervals | OD | x | ||

| T-4 | Voice Equality - Number of Notes | OD | R-32 | Compound Or Simple Meter | OD | x | M-5 | Most Common Melodic Interval Prevalence | OD | x | |

| T-5 | Voice Equality - Note Duration | OD | R-33 | Triple Meter | OD | x | M-6 | Relative Strength of Most Common Intervals | OD | x | |

| T-6 | Voice Equality - Dynamics | OD | R-34 | Quintuple Meter | OD | x | M-7 | Number of Common Melodic Intervals | OD | x | |

| T-7 | Voice Equality - Melodic Leaps | OD | R-35 | Changes of Meter | OD | x | M-8 | Amount of Arpeggiation | OD | x | |

| T-8 | Voice Equality - Range | OD | D-1 | Overall Dynamic Range | OD | v | M-9 | Repeated Notes | OD | x | |

| T-9 | Importance of Loudest Voice | OD | D-2 | Variation of Dynamics | OD | v | M-10 | Chromatic Motion | OD | x | |

| T-10 | Relative Range of Loudest Voice | OD | D-3 | Variation of Dynamics In Each Voice | OD | v | M-11 | Stepwise Motion | OD | x | |

| T-12 | Range of Highest Line | OD | D-4 | Average Note To Note Dynamics Change | OD | v | M-12 | Melodic Thirds | OD | x | |

| T-13 | Relative Note Density of Highest Line | OD | P-1 | Most Common Pitch Prevalence | OD | v | M-13 | Melodic Fifths | OD | x | |

| T-15 | Melodic Intervals in Lowest Line | OD | P-2 | Most Common Pitch Class Prevalence | OD | v | M-14 | Melodic Tritones | OD | x | |

| T-20 | Voice Separation | OD | P-3 | Relative Strength of Top Pitches | OD | v | M-15 | Melodic Octaves | OD | x | |

| R-1 | Strongest Rhythmic Pulse | OD | v | P-4 | Relative Strength of Top Pitch Classes | OD | v | M-17 | Direction of Motion | OD | x |

| R-2 | Second Strongest Rhythmic Pulse | OD | v | P-5 | Interval Between Strongest Pitches | OD | x | M-18 | Duration of Melodic Arcs | OD | x |

| R-3 | Harmonicity of Two Strongest Rhythmic Pulses | OD | v | P-6 | Interval Between Strongest Pitch Classes | OD | x | M-19 | Size of Melodic Arcs | OD | x |

We refer to chapter 5 of Cory McKay's dissertation for a more complete discussion of all these features (McKay, 2004, pp. 55–68). Notice that McKay uses the term "recordings" in reference to MIDI files (MIDI recordings), even if that term is usually employed to refer solely to sound/audio files.

Bodhidharma uses a hybrid classification system "that makes use of hierarchical, flat and round robin classification. Both k-nearest neighbor [kNN] and neural network-based [NN] classifiers are used, and feature selection and weighting are performed using genetic algorithms" (McKay, 2004, p. 3).

One leaf classifier ensemble is created that has all leaf categories as candidate categories. In addition, one round-robin classifier ensemble is created for every possible pair of leaf categories. Each round-robin ensemble has only its corresponding pair of categories as candidate categories. Finally, one parent classifier ensemble is created for each category that has child categories in the provided taxonomy. Each parent ensemble has only its direct children as candidate categories. Each ensemble is trained using only the recordings belonging to at least one of its candidate categories. Each ensemble consists of one kNN classifier for use with all one-dimensional features and one NN for each multi-dimensional feature. The scores output by the kNN and NN classifiers are averaged in order to arrive at the final scores output by the ensemble. Feature selection is performed on the one-dimensional features during training, followed by feature weighting on the survivors of the selection. Classifier selection and weighting is also performed on the scores output by the kNN and NN classifiers, so that each classifier has a weight controlling the impact of its category scores on the final score for each category output by the ensemble. All selection and weighting is done using genetic algorithms. Actual classification is performed by descending down the hierarchical taxonomy one level at a time. A weighted average of the results of the flat, round-robin and parent classifier ensembles is found for each child of the parent category under consideration, and only those child categories whose scores for a given recording are over a certain threshold are considered for further expansion down the taxonomy tree. The leaf category or categories, if any, that pass the threshold are considered to be the final classification(s) of the recording. (McKay, 2004, pp. 94–95)

As a result, for each music file fed for classification the output consists of all classes whose scores are either over 0.5 or at least 0.25 and are within 20% of the highest score.

Cory McKayʼs software included two different classification taxonomies and corpora, representing the world's musics, which were used in our tests. First, a reduced one for faster evaluation and comparative purposes with other similar studies that were also based on rather small classification taxonomies. Afterward, an expanded one, more suitable for real-life purposes. The reduced taxonomy originally comprised three root categories: jazz, popular and western classical. Each of these was subdivided into three leaf categories. In total the reduced world's musics set consisted of 9 classes with 25 MIDI files each, for a total of 225 MIDI files. We added the fado class (and respective corpus of MIDI files) inside the "popular" root category resulting in a total of 10 classes for classification, which is presented in Table 2. While we concede that this particular corpus is a small one, it is useful for pilot testing and fine tuning, obtaining faster results.

| Jazz | Popular | Western Classical |

|---|---|---|

| Bebop | Rap | Baroque |

| Jazz Soul | Punk | Modern Classical |

| Swing | Country | Romantic |

| Fado |

The expanded classification taxonomy comprises nine root categories, eight intermediary and several child categories for a total of 38 unique classes, to which we have added the fado one. This set of world's musics has the same 25 MIDI files for each class leading to a total of 950 MIDI files, plus the fado MIDI files we have added. This classification taxonomy is presented in Table 3.

| Country | Rap | Western Classical |

|---|---|---|

| Bluegrass | Hardcore Rap | Baroque |

| Contemporary | Pop Rap | Classical |

| Traditional Country | Early Music | |

| Renaissance | ||

| Medieval | ||

| Modern Classical | ||

| Romantic |

| Jazz | Rhythm and Blues | Western Folk |

|---|---|---|

| Bop | Blues | Bluegrass |

| Bebop | Chicago Blues | Celtic |

| Cool | Blues Rock | Country Blues |

| Fusion | Soul Blues | Flamenco |

| Bossa Nova | Country Blues | Fado |

| Jazz Soul | Funk | |

| Smooth Jazz | Jazz Soul | |

| Ragtime | Rock and Roll | |

| Swing | Soul |

| Modern Pop | Rock | World Beat |

|---|---|---|

| Adult Contemporary | Classic Rock | Latin |

| Dance | Blues Rock | Tango |

| Dance Pop | Hard Rock | Salsa |

| Pop Rap | Psychedelic | Bossa Nova |

| Techno | Modern Rock | Reggae |

| Smooth Jazz | Alternative Rock | |

| Hard Rock | ||

| Punk | ||

| Metal |

According to Cory McKay:

the expanded taxonomy developed here (…) made use of an amalgamation of information found in scholarly writings on popular music, popular music magazines, music critic reviews, taxonomies used by music vendors, schedules of radio and video specialty shows, fan web sites and the personal knowledge of the author. Particular use was made of the All Music Guide, an excellent on-line resource, and of the Amazon.com on-line store. These sites are widely used by people with many different musical backgrounds, so their systems are perhaps the best representations available of the types of genres that people actually use. These two sites are also complimentary, in a sense. The All Music Guide is quite informative and well researched, but does not establish clear relationships between genres. Amazon.com, in contrast, has clearly structured genre categories, but no informative explanations of what they mean (McKay, 2004, pp. 81–82).

The discussion of musical categories vastly transcends the scope of this paper, so we are using these taxonomies as they have been presented, and using them as a starting point to demonstrate the potentials of the methodology. For a more in-depth discussion of musical categories we refer to the work of Fabian Holt (Holt, 2007).

TEST IMPLEMENTATION

Intuitively, the fado corpus seems rather dispersed, given that 48 fados come from the same homogeneous source (César das NevesʼCancioneiro (Neves & Campos, 1893)) and all others are individual folios transcribed by several different people, spanning a rather large period. So, in order to obtain a consistent training set of these two different universes, our training sample consisted of the first quartile of the corpus (roughly half of the fados from the Cancioneiro) and the third quartile of the same corpus (half of the fados from the individual folios). Following this criterion we believe we have partitioned the data in a consistent way. We conducted several tests and the results are all summarized in Table 4. However, we will detail and narrate them in chronological order so our thoughts and decision processes are made clear and other researchers can learn from our doubts and flaws, as well, hopefully avoiding them in their endeavors.

In Test 1 we have trained 50 MIDI files from the Edition Corpus using the implemented, one-dimensional, 71 features signaled either with "v" or "x" in Table 1. We decided to discard the features related to timbre, instrumentation and pitch bend messages. Since the fado corpus consists of piano reductions, these features are irrelevant because of this encoding and could create bias. We believe that many other symbolic corpora may suffer from similar encoding limitations, in contrast with sound recordings, therefore one should always be cautious when deciding which features are relevant for comparative purposes. The trial was executed using the ten classes of the reduced taxonomy presented in Table 2. The resulting classification of the remaining fados succeeded with a precision of 100%. All fados from the second and fourth quartile of the corpus were successfully classified as fados achieving high scores (min 0.54, max 0.98, avg 0.83). These results led us to think that they were actually too good to be true and we suspected that some bias coming from encoding might have led to this outcome: namely from the fact that all trained fados in the Edition Corpus were encoded using the Finale "marching band" humanizer style, most of them using the same 2/4 time signature, an average base tempo of 78 beats per minute with little fluctuation, and very few dynamics when compared to a probably much wider range of options coming from the rest of the MIDI files of the world's musics set. If, on the other hand, we interpret critically the results of a previous study on the automatic classification of fados performed on sound files (Antunes et al., 2014), there is a precedent for fado to be easily recognizable by a very distinctive set of specific features, namely instrumentation and range. While these characteristics might seem to make the task easier to perform, in fact, in the end they do not help that much. This is because one does not extract from these features valuable information regarding the possibility of fado having unique melodic, rhythmic or harmonic traces. In order to overcome this problem, our solution was to try to eliminate the strong bias these features brought.

In Test 2, we decided to use the Urtext corpus instead, with all the small errors, incongruencies, and different time signatures it contains. This corpus was encoded without any humanizer preset. We decided to train the database using only 49 features – the one-dimensional ones directly linked to rhythm, melody and harmony, signaled with "v" in Table 1 – deliberately discarding all features related to timbre, instrumentation, pulse, dynamics and even time signature. Our main concern, at this point, was to understand if the fado corpus could be recognized and classified only based on rhythm, melodic and harmonic features. We used the 10 classes of the reduced taxonomy, presented in Table 2, and its 225 MIDI files representing the world's musics. The result was still surprising: 48 fados were successfully classified as fados (a precision of 96%), the classifications achieving high scores (min 0.54, max 0.97, avg 0.81) which indeed indicates the strong consistency of the corpus. The two MIDI files not classified as fados were outliers. Number 076 is one of the fados originally in 3/4 and, as it resembles a light waltz configuration, was classified either as baroque with a score of 0.31 or romantic with 0.23. These low values, none of them achieving even near the threshold of 0.5, just show how the system was confused by this particular piece, which mixes traces from several genres. When one looks at this particular score, in its Urtext version, one can easily understand why the system was led into classifying it under the classical root category. The second outlier was work number 091, which was classified as bebop with 0.42. The low score indicates also a great degree of uncertainty about this particular work. When one looks at the score, one easily understands the ambiguity: it is a fado with very peculiar and complex contrasting rhythm figurations, chromatic passages, ornamental figurations, and it uses at least four different voices. The sophistication of this particular arrangement (which is also one of the most recent ones) when compared to the rest of the corpus clearly singles it out. Still, we have to add that both these works must have something of fado about them, since they were not clearly classified as any other genre, remaining ambiguous.

In Test 3 we opted for refining the process and used the same Urtext corpus with the same 49 features based only on rhythmic, melodic and harmonic traces. However, we used the 39 classes from the expanded taxonomy presented in Table 3, and the total world's musics set of 950 MIDI files, plus 50 fados as a training set. We wanted to know whether increasing the complexity and array of possibilities would confuse the system and whether the peculiarities of the database would start to be singled out more often. The results show that 45 fados were still successfully classified as fados (a precision of 90%) with very relevant scores (min 0.46, max 0.97, avg 0.76). Although, as expected, the precision rate was lower than in the previous tests, this shows how consistent the corpus is; contradicting our initial intuitions after all. Two of the fados, the MIDI files 044 and 050, were wrongly classified as renaissance with high scores (0.65 and 0.75). Our best guess for this fact is the relevant percentage of ornamental figurations and embellishments appearing in these scores. MIDI files number 091 and 097 were classified as unknown. This means that no class was able to gather any score above 0.25 and just reveals the ambiguity of these works – number 091 being recurrent in the previous test too. Number 088 also got an ambiguous classification – the software chose to classify it as ragtime with a score of 0.51 but also as fado with 0.86. This apparent contradiction comes from the fact that fado is a leaf category from the root category folk, while ragtime is a leaf category of the jazz root category. When the system assumes a first classification under the jazz root, then is has difficulties in finding a suitable child class. On the other hand, if the system opts to classify it as a folk root as a second choice, then it clearly nests it on the fado child class. The same ambiguities seem to arise with MIDI file 079, as it is classified not only as fado with 0.87, but also as ragtime with a strong score of 0.63. A closer look at the scores of these works reveal how, in fact, many idiosyncrasies typical of ragtime are present. We point out how work number 041 also raised doubts: although it was classified successfully as fado, it only achieved a score of 0.51, displaying a second choice of country blues at 0.38.

In all of these tests one can notice how the fado training set is actually larger than the other classes. Since the original database was provided with 25 samples for each class we also wondered what would happen if we trained the fado class with 25 samples as well, in order to avoid a bias in this respect. Therefore, in Test 4 we kept the same conditions of Test 3. However, we trained the classifier with 25 random fados from the set and then asked the software to classify the remaining 75. We were expecting that this could add some confusion since now there was much less initial information available to create a fado profile. We felt this step had to be taken in order to eliminate any bias created by the over-representation of fado in the training stage. The results of this trial somewhat confirmed our expectations: the system was more confused and less effective in recognizing as fado the 75 remaining samples. Nevertheless, the classifications did not spread randomly among all other classes; instead, they were highly concentrated around very precise ones. The system successfully classified solely as fado 37 MIDI files (a partial precision of 49.3%). Additionally, it made double classifications on 14 MIDI files classifying them either as fado or as country blues (18.7% of the total). This double classification happened because none of the classes got a score above 0.5, with two or more of them being very similar. MIDI file 022, for instance, got a score of 0.44 as country blues and 0.45 as fado, while MIDI file 033 got scores of 0.41 in both those same classes. If one adds up the 14 MIDI files that were classified as possible fados to the other 37, one gets a total of 51 MIDI files successfully classified (68% precision). Then, one has 20 other MIDI files (wrongly) classified solely as country blues, representing 26.7% of the total. Adding up the 14 cases where the system could not decide between fado and country blues, one gets a total of 45.3% of the MIDI files being possible country blues songs. Considering both clusters, we realize that both classes encompass 57 possible cases (76% of the total), which indeed shows that there is some consistency among the corpus, even if it is not as expected. In 11 MIDI files the system returned unknown (14.7% of the total MIDI files), meaning that no class led to a relevant score. This shows that, although these MIDI files might not have been recognized as fados, they were not recognized as any of the other possible classes either. This leads us to believe that our partitioning of the training set influenced and impaired the results. It seems that the training sample of 25 MIDI files was too small to contain some unique relevant traces and the MIDI files containing them were needed as trainers. As seen in the previous tests, with more MIDI files, this was not a relevant problem. Five MIDI files were classified as ragtime (6.7%) which is also a trait present in previous classifications and not a surprise. Finally, MIDI file 088 was classified as romantic (score of 0.4) and 054 as either bluegrass (0.33) or country blues (0.33). It might be added that this last trial is still encouraging. While we thought most of the MIDI files would be very ambiguous, presenting many traces from several different styles, the tests have shown the software having little doubts about the true classification of the works present in the corpus. This was the case, even when using a non-formatted and non-homogeneous version of the corpus, and only relying on harmonic, melodic and rhythmic features. We consider the lack of precision of the last trial rather homogenous, instead of dispersed, since the data clustered heavily around only two classes. The confusion between fado and country blues can be expected given that only rhythmic, melodic and harmonic features were analyzed. fado and country blues share common traits since they pertain to the umbrella of folksongs, what one could call a "daily life singing stories" tradition. We speculate that if one had classes for ballads, romances, modinhas or mornas the confusion might add up. Also, the confusion between ragtime and fado was also to be expected, since all the fado MIDI files are written as piano solo folios following many of the same conventions of the ragtime piano solo folios of the period.

In order to control bias, we decided to search the Internet for recorded MIDI files based on piano reductions, so they could be "somewhat comparable" to the fado piano reductions and act as a control group. We have found the site of Doug McKenzie 8 from which a random sample of 25 MIDI files was downloaded, all supposedly from "jazz related" genres. Therefore, we decided to replicate two of the tests already conducted, but this time refining them with control groups. In Test 5, the process of using the reduced taxonomy with 10 classes, presented in Table 2, was once again repeated. The same 225 MIDI files representing the world's musics used in Tests 1 and 2, along with 50 MIDI files from the Urtext corpus, using 49 one-dimensional features, were used in training. This time, however, we asked the computer to classify not only the 50 remainder fados from the Urtext corpus, but also the 25 jazz-related MIDI files from Doug McKenzie site (as a control group), and 25 automatically generated piano fados using a generative system, as a second control group. Furthermore, we decided to create a third control group with 25 MIDI files of original songs composed by us and encoded using the same software in which we made the transcriptions of the original corpus. This "Author Control" group was created in order to test the bias created by our own idiosyncrasies. It consists of manually composed songs of different genres, styles and flavors, some fados included. Results revealed that 49 fados were successfully classified as fados (a precision of 98%), reinforcing the strong consistency of the corpus with high scores (min 0.46, max 0.97, avg 0.77). The MIDI file 091 was again classified as bebop, but the MIDI file 076 this time was recognized as fado with a score of 0.68. Among the first control group, the jazz-related MIDI files, 12 were classified as bebop, 8 as romantic, 4 as swing and 1 as unknown, which can make sense given the lack of jazz classes available in the reduced taxonomy used. None was classified as fado, which was the main issue at stake. Among the automatically generated piano fado MIDI files, 17 were indeed classified as fado, 4 as romantic, and 4 as modern classical (therefore only 68% precision). This does not represent anything particularly serious or inconsistent at this stage. Within the Author control group, 6 MIDI files were identified as fado, 5 as baroque, 4 as bebop, 4 as traditional country, 4 as romantic, 1 as Jazz Soul, and 1 as unknown. The eclecticism of these choices mirrors well the chaotic assorted character of the group, given the available classes. It also demonstrates that the mere fact of manually transcribing and encoding the pieces using the same software, and human idiosyncrasies, in the end does not correlate with the system identifying the pieces as some particular cluster.

Finally, in Test 6, we decided to improve Test 5 by using the expanded taxonomy with 39 classes, presented in Table 3, and the total database of 950 MIDI files, plus 50 fados from the Urtext corpus, as a training set, and asking the computer to classify the remainder 50 fados, plus the control group of 25 jazz-related MIDI files, the 25 automatically generated piano fados, and the 25 pieces composed by us. In the end, 44 fados were indeed classified as fados (a precision of 88%, with scores min 0.46, max 0.94, avg 0.77), 3 as unknown, 1 as a renaissance, 1 as Celtic and 1 as ragtime. As predicted, there was a little more confusion, but comparable to when we first ran the test. Among the jazz-related control group, 11 MIDI files were classified as cool, 7 as bebop, 6 as ragtime and 1 as smooth jazz. This classification actually seems more consistent than the one obtained with only 10 classes, which was also expected since this expanded taxonomy has many more classes related to jazz. Among the automatically generated piano fado MIDI files, 21 were classified as fado, 3 as Celtic and 1 as bluegrass (therefore, 84% precision), which is also a far better result than the one obtained with only 10 classes. Finally, within the Author Control group, 8 were classified as unknown, 5 as fado, 3 as cool, 3 as bluegrass, 2 as ragtime, 1 as bebop, 1 as modern classical, 1 as baroque and 1 as traditional country. These results reinforce the idea that when a chaotic assortment is combined, one cannot expect to obtain a consistent corpus. In a way, the "Author Control group" acts as evidence that if a corpus is not consistent the data does not cluster and indeed becomes disperse. The results from the Author control group also show how little influence our encoding bias seems to have in the end. Table 4 summarizes the results of all the tests.

| Test Number | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| Number of classes | 10 | 10 | 39 | 39 | 10 | 39 |

| Number of features used | 71 | 49 | 49 | 49 | 49 | 49 |

| fado Sample size used for training | 50 | 50 | 50 | 25 | 50 | 50 |

| Jazz-related Control size | - | - | - | - | 25 | 25 |

| Automatically generated fados Control size | - | - | - | - | 25 | 25 |

| Author Control size | - | - | - | - | 25 | 25 |

| fado Sample size supplied for Classification | 50 | 50 | 50 | 75 | 50 | 50 |

| Precision (%) | 100 | 96 | 90 | 68 | 98 | 88 |

CONCLUSIONS

We have transcribed and encoded 100 songs associated with the fado practice and laid the foundations for a relevant digital archive concerning memory and cultural heritage, as well as source material for systematic musicological research and creative artistic purposes. We hope the communication of our efforts and experimentations aid other researchers and help us gather support to proceed with the enlargement and improvement of the archive, the professional engraving and fine tuning of the critical edition of the scores, the refinement of the metadata, and the association of the scores with available sound recordings.

Many musicologists have a background in the humanities or social sciences, are merely computer users, and are neither programmers nor skilled mathematicians. It was in thinking specially about this community that we tried to devise a methodology to test the internal consistency of a corpus that was based on software combining ease of use, friendly graphic user interface, efficiency of resources and data handling and intelligibility of the output.

The results have shown that Bodhidharma was able to correctly classify every fado MIDI file when using all the 71 one-dimensional features available (100% precision). Moreover, even when using only 49 one-dimensional features, in the remaining tests, Bodhidharma was able to identify correctly fado MIDI files with huge margins of precision, above 90% of the time. Even in very precarious conditions, with a very small training sample, and few features, the success rate was still rather reasonable. This leads us to believe that in ideal conditions (namely using all features, including the multidimensional ones) the precision rate will be near perfect. Furthermore, when necessary to skip features or work with reduced corpora sizes, either to optimize resources or to cope with situations where less material is available, it seems the results can still be expected to be very high. The results with control groups have also shown that Bodhidharma is minimally affected by the encoding conditions of the MIDI files. It was also demonstrated that Bodhidharma is relatively immune to MIDI files being manually composed or transcribed, performed live, quantized or not quantized, or automatically generated. As such, the software has shown to be a useful empirical evaluation tool, for musicologists in general, as well.

The proposed methods, although experimental, seem promising as a way of knowing more about the internal consistency of any given corpus, its "families" and look-alike taxonomies. When the world's musics set is large enough, possessing a relevant number of classes, and when any given MIDI file is very different from the ones included in the trained classes, then such a MIDI file will either be classified in the wrong class or it will be classified as unknown.

Our experiments revealed that the software can be very time-consuming and demanding of high computational resources when one decides to use multidimensional features, to employ round robin techniques and larger sets of data. In these cases, calculation times can grow exponentially. However, our experiments hinted that smaller sets, the absence of round robin techniques, and even just partial subsets of specific features might be more than enough to run most tests without severely impairing the results. We speculate that the use of some common sense and prior experience with most symbolic corpora can greatly enhance the results relative to time spent. As shown, in our case, if one is dealing with corpora of piano reductions, then it might be wise to only use melodic, harmonic and rhythmic features, since they best represent what is in fact encoded. At the end of the experimentations we are not sure anymore about our decision to use a humanizer to encode the MIDI files. On the one hand it can be argued that it might contaminate the data and include unnecessary bias (not only because of altering the data per se, but also because the chosen preset might be a poor simulation of the conventions of the time), on the other, the MIDI files without it sound dull and don't seem to reflect a human practice. Furthermore, the automatic performance at least is consistent for all scores and at all times. The impossibility of having a consistent human player playing all the scores on a MIDI keyboard following the conventions of the time presents a difficult challenge and leaves us with no optimum solution.

We feel that adding more songs to the archive will be a crucial step. Though we were not completely satisfied with the idea of a constant numeric over-representation of fado MIDI files when compared to the other classes, the results have also shown that when only 25 MIDI files were used as a training set this was indeed too small a number to conveniently represent the genre as we know it. It was demonstrated that this fact did impair the results. As such, in an ideal scenario, a much larger number of MIDI files and classes should be present in order to have both a proportional and representative set of the worldʼs musics. We were left wondering how the system would behave with a taxonomy of 100 classes, each class being represented by 100 MIDI files, for instance.

The existence of software more advanced than Bodhidharma, namely JMIR, gives us hope that these same methods and techniques can also be employed using sound files and not merely symbolic corpora based in MIDI format. As users we would be very happy if such software would become available in user-friendly versions, namely by having an intuitive GUI. The opportunity to apply these methods using actual sound recordings would greatly enhance the possibilities for further research. Currently we believe the segmentation and processing of complex signals as well as the necessary computational resources necessary to extract meaningful features and to train large sets of data, in sound formats, might still be an issue. Therefore, the use of symbolic corpora can still be advantageous in terms of time and computational resources, specially for the individual researcher.

ACKNOWLEDGEMENTS

We wish to thank the valuable insights of Bruce Pennycook, António Tilly, Isabel Pires and Cory McKay in the preparation of this paper. We also wish to acknowledge the valuable contribution of Fábio Serranito, who proofread the text and made suggestions for its improvement. This article was copyedited by Tanushree Agrawal and layout edited by Kelly Jakubowski.

FUNDING

This work was funded by FCT – Fundação para a Ciência e Tecnologia in the framework of UT Austin-Portugal Digital Media program, grant [SFRH / BD / 46136 / 2008].

NOTES

-

INET-MD and CESEM; FCSH/NOVA; Av. Berna, 26-C 1069-061 Lisboa; (+351) 217908300; http://tiagovideira.com

Return to Text -

CIC.Digital; FCSH/NOVA; Av. Berna, 26-C 1069-061 Lisboa; (+351) 217908300; dedalus.jmmr@gmail.com

Return to Text -

http://fado.fcsh.unl.pt

Return to Text -

http://www.unesco.org/culture/ich/en/RL/fado-urban-popular-song-of-portugal-00563, accessed 30 May 2017.

Return to Text -

https://www.youtube.com/channel/UCJlUZ6RIRgbL7iHfbNdfJhA, accessed 30 May 2017.

Return to Text -

http://web.mit.edu/music21/, accessed 30 May 2017.

Return to Text -

http://www.music-ir.org/evaluation/mirex-results/sym-genre/index.html, accessed 30 May 2017.

Return to Text -

http://bushgrafts.com/midi/, accessed 30 May 2017.

Return to Text

REFERENCES

- Antunes, P. G., de Matos, D. M., Ribeiro, R., & Trancoso, I. (2014). Automatic Fado Music Classification. ArXiv Preprint ArXiv:1406.4447. Retrieved from http://arxiv.org/abs/1406.4447

- Bardin, L. (2009). Análise de Conteúdo. Lisbon: Edições 70.

- Castelo-Branco, S. E.-S. (1994). Vozes e guitarras na prática interpretativa do fado. In Fado: vozes e sombras (pp. 125–141). Lisbon: Electa.

- Conklin, D. (2013). Multiple Viewpoint Systems for Music Classification. Journal of New Music Research, 42(1), 19–26. https://doi.org/10.1080/09298215.2013.776611

- Conklin, D., & Anagnostopoulou, C. (2011). Comparative Pattern Analysis of Cretan Folk Songs. Journal of New Music Research, 40(2), 119–125. https://doi.org/10.1080/09298215.2011.573562

- Cook, N. (1994). A guide to musical analysis. Oxford: Oxford University Press.

- Côrte-Real, M. de S. J. (2000). Cultural Policy and Musical Expression in Lisbon in the Transition from Dictatorship to Democracy (1960s-1980s) (PhD Dissertation). Columbia University, USA.

- Cuthbert, M. S., & Ariza, C. T. (2010). Music21: A Toolkit for Computer-Aided Musicology and Symbolic Music Data. In ResearchGate (pp. 637–642). Retrieved from https://www.researchgate.net/publication/220723822_Music21_A_Toolkit_for_Computer-Aided_Musicology_and_Symbolic_Music_Data

- Eerola, T., & Toiviainen, P. (2004). MIR In Matlab: The MIDI Toolbox. In ISMIR. Citeseer.

- Feder, G. (2011). Music Philology: An Introduction to Musical Textual Criticism, Hermeneutics, and Editorial Technique. Hillsdale: NY: Pendragon Press.

- Gray, L. E. (2013). Fado Resounding: Affective Politics and Urban Life. Durham, NC: Duke University Press. https://doi.org/10.1215/9780822378853

- Grier, J. (1996). The Critical Editing of Music: History, Method, and Practice. Cambridge: Cambridge University Press.

- Hillewaere, R., Manderick, B., & Conklin, D. (2009). Global Feature Versus Event Models for Folk Song Classification. In ISMIR (Vol. 2009, p. 10th). Citeseer. Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.158.1512&rep=rep1&type=pdf

- Holt, F. (2007). Genre in Popular Music. Chicago and London: University of Chicago Press. https://doi.org/10.7208/chicago/9780226350400.001.0001

- Knopke, I. (2008). The PerlHumdrum and PerlLilypond Toolkits for Symbolic Music Information Retrieval. In J. P. Bello, E. Chew, & D. Turnbull (Eds.), ISMIR 2008, 9th International Conference on Music Information Retrieval, Drexel University, Philadelphia, PA, USA, September 14-18, 2008 (pp. 147 152). Retrieved from http://ismir2008.ismir.net/papers/ISMIR2008_253.pdf

- Leitão da Silva, J. (2011, September). Music, theatre and the nation: The entertainment market in Lisbon (1865–1908) (PhD Dissertation). Newcastle University, Newcastle, UK. Retrieved from https://theses.ncl.ac.uk/dspace/bitstream/10443/1408/1/Silva,%20J.%2012.pdf

- Losa, L., Silva, J. L. da, & Cid, M. S. (2010). Edição de Música. In Enciclopédia da Música em Portugal no Século XX (Castelo Branco, Salwa El-Shawan, Vol. 2, pp. 391–395). Lisbon: Temas e Dabates/Círculo de Leitores.

- Mayer, R., Rauber, A., Ponce de León, P. J., Pérez-Sancho, C., & Iñesta, J. M. (2010). Feature Selection in a Cartesian Ensemble of Feature Subspace Classifiers for Music Categorisation. In Proceedings of 3rd International Workshop on Machine Learning and Music (pp. 53–56). New York, NY, USA: ACM. https://doi.org/10.1145/1878003.1878021

- McKay, C. (2004). Automatic Genre Classification of MIDI Recordings (MA Thesis). McGill University, Montréal, Canada. Retrieved from http://jmir.sourceforge.net/publications/MA_Thesis_2004_Bodhidharma.pdf

- McKay, C. (2010). Automatic music classification with jMIR. McGill University, Montréal, Canada. Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.165.2642&rep=rep1&type=pdf

- Negus, K. (1997). Popular Music in Theory: An Introduction. Wesleyan University Press.

- Nery, R. V. (2004). Para uma história do Fado. Lisbon: Público/Corda Seca.

- Nery, R. V. (2010a). Fado. In Enciclopédia da Música em Portugal no Século XX (Salwa Castelo Branco, pp. 433–453). Temas e Debates.

- Nery, R. V. (2010b). Pensar Amália. Lisbon: Tugaland.

- Neves, C. das, & Campos, G. (1893). Cancioneiro de musicas populares: contendo letra e musica de canções, serenatas, chulas, danças, descantes … e cançonetas estrangeiras vulgarisadas em Portugal (Vols. 1–3). Porto: Typographia Occidental. Retrieved from http://purl.pt/742

- Oliveira, G. A. de, Silva, J. L. da, & Losa, L. (2008). A edição de música impressa e a mediatização do fado: O caso do Fado do 31. Etno-Folk: Revista Galega de Etnomusioloxia, 12, 55–68.

- Pérez-Sancho, C., De León, P. J. P., & Inesta, J. M. (2006). A comparison of statistical approaches to symbolic genre recognition. In Proc. of the Intl. Computer Music Conf.(ICMC) (pp. 545–550). Retrieved from https://grfia.dlsi.ua.es/repositori/grfia/pubs/178/icmc2006.pdf

- Pimentel, A. (1904). A triste canção do sul (subsidios para a historia do fado). Lisboa: Gomes de Carvalho. Retrieved from http://www.archive.org/details/tristecanodo00pimeuoft

- Ribas, J. A. (1852). Album de musicas nacionaes portuguezas constando de cantigas e tocatas usadas nos differentes districtos e comarcas das provincias da Beira, Traz-os-Montes e Minho estudadas minuciosamente e transcriptas nas respectivas localidades. Porto: C. A. Villa Nova.

- Videira, T. G., Pennycook, B., & Rosa, J. M. (2017). Formalizing fado – A contribution to automatic song making. Journal of Creative Music Systems, 1(2). https://doi.org/10.5920/JCMS.2017.03

- Vieira, E. (1890). Diccionario musical contendo todos os termos technicos, com a etymologia da maior parte d'elles, grande copia de vocabulos e locuções italianas, francezas, allemãs, latinas e gregas relativas à Arte Musical; noticas technicas e historicas sobre o cantochão e sobre a Arte antiga; nomenclatura de todos os instrumentos antigos e modernos, com a descripção desenvolvida dos mais notaveis e em especial d'aquelles que são actualmente empregados pela arte europea; referencias frequentes, criticas e historicas, ao emprego do vocabulo musical da lingua portugueza; ornado com gravuras e exemplos de musica por Ernesto Vieira. Gazeta Musical de Lisboa.