PEOPLE tend to move their hands when they talk. The gestural origins hypothesis posits that humans used physical gestures to communicate before they evolved the capacity for speech (Sterelny, 2012). The evidence for this is that the ability to walk on two feet (bipedalism), which freed the hands from their role in movement, preceded the expansion of the brain and skull that changed the shape of the vocal tract, facilitating speech in human evolution (Marschark & Spencer, 2011; Tattersal, 1999). It may be that the origins of syntax in language are to be found in the distinction between the hand as an agent (noun) and its movement as an action (verb) (Stokoe, 2000). In spite of the development of verbal language, humans still move their hands during speech as this enhances the quality of their communication with others. Even blind people use their hands when they talk to other people, including those they know to be blind too, although they may never have seen others gesture (Iverson & Goldin-Meadow, 1998).

Gesturing is therefore a robust phenomenon that is stable across cultures and contexts (Goldin-Meadow, 1999). The gestures that are used when they are the sole means of communication are formed in a more language-like way, however, than when they accompany speech; they have the equivalent of phonological, morphological and syntactic structure. According to McNeill, "One of the basic facts of gesture life: the gesticulations with which speech is obligatorily present are the least language-like; the signs from which speech is obligatorily absent have linguistic properties of their own" (McNeill, 2000, p. 4). This is what happens in sign-supported English (SSE). Gestures have been differentiated from signs (Kendon, 1988) on what is now termed the 'Kendon continuum' (McNeill, 1992):

spontaneous gesticulation — speech-linked gestures — emblems — pantomime — sign language

where each type of gesture varies according to a number of factors, the most salient being its relationship to speech. Spontaneous gesticulation accompanies speech. Speech-linked gestures are illustrative and can replace words, for example, 'He went [gesture]', or they can be deictic, for example, 'There [points]'. Emblems (or signs) are gestures representing consistent meanings or functions within a particular culture, such as the thumbs-up meaning 'OK', rendering speech unnecessary. Pantomime and true sign language are used, by contrast, in place of speech. Thus communication can involve the vocal and manual modalities separately and combined to different extents.

Although differing in their scope, the taxonomies of gesture produced by Ekman and Friesen (1969), Efron (1972) and McNeill (1992) contain common elements. In addition to emblems (defined above), illustrators can encompass both spontaneous gesticulation and those speech-linked gestures that are made during speech. Ekman and Friesen's original taxonomy encompassed all kinds of non-verbal cues, however, not just those conveyed by gesture: emblems can include uniforms, since they signal authority, and regulators can include eye contact, used in conversation to mediate turn-taking. Gestures are categorised, not only by type but also meaning, as deictic, iconic or metaphoric. As we have seen from the example of pointing, given above, those described as deictic assign meaning to different locations in space (Liddell, 2000) such as places, people and points in time. When a gesture imitates an action it can be described as iconic. For example, a speaker might cup his hand, the palm facing towards him, and bring it towards him, as though bending the branch of a tree, while saying 'He bends it way back' (McNeill, 2000). Metaphoric gestures present an abstract concept, known as the 'referent' of the gesture, via concrete imagery, known as the 'base' of the gesture which provides the vehicle for the metaphor (McNeill, 1992, p. 80).

GESTURE IN MUSICAL CONTEXTS

Musicians use gestures in many different ways while practising independently, rehearsing together and performing in public. Some gestures are used in the context of speech or are linked to speech but others reflect the performer's ideas about musical shape and motion. The gestures of instrumental musicians however, are rarely entirely spontaneous, as the physical demands of the musical score contribute to their repeatability over successive performances (Wanderley & Vines, 2006). Musicians develop visual mental representations of the notated score and auditory mental representations when learning, and particularly when memorising music (Chaffin, Imreh, & Crawford, 2002). These representations are also kinaesthetic involving proprioception (awareness of the body in space and around the instrument) and other, learned, physical behaviours. For example, singers have been shown to gesticulate in rehearsal, most commonly maintaining a pulse or beating time (King & Ginsborg, 2011). Such movements may have to be suppressed deliberately in performance (Ginsborg, 2009), or they may become expressive performance gestures such as those described by Davidson in her studies of Annie Lennox and Robbie Williams (Davidson, 2001, 2006). Musicians' gestures therefore often reflect rhythms, dynamics, climaxes or pauses within the music itself and, while it may come naturally to singers to gesture while singing as though they were gesturing while talking, these movements may also serve important social, communicative and expressive functions in performance.

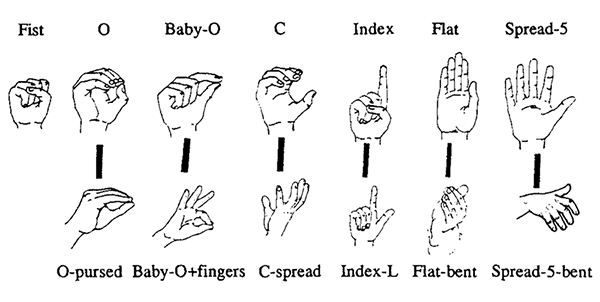

Another form of choreographed gesture in music is the 'ballet mime' performed by the principal characters in classical ballets such as Giselle, Coppélia, Swan Lake and The Sleeping Beauty. Ballet mime uses a vocabulary of well-defined signs to convey love, anger, beauty, listening/hearing, a kiss, an order or command and even "death by crossbow and arrow" (Morina, 2000). Similarly, conductors use a repertoire of emblem-like beating patterns to indicate different temporal organisations, principally two-, three- and four beats, and it has been shown that the most salient cue for beat abstraction is the absolute acceleration along given trajectories in such beating gestures (Luck & Sloboda, 2009). The conductor's role however, extends much further than keeping time; he or she must convey expressive intentions to the ensemble so they may be communicated to the audience. Boyes Braem and Bräm (2000) examined the movements of conductors' non-dominant (i.e. non-beating) hands and identified six categories of gestures, performed using the customary handshapes illustrated in Figure 1: i) manipulating objects, including pulling out, taking out of view (such as the emblematic 'rounding-off' gesture), gathering, supporting, hitting, pushing, touching a surface and feeling a substance; ii) showing the path or form of an object, including traditional deictic movements such as pointing; iii) vertical direction such that high equates to more or louder, while low equates to less or softer; iv) portraying sound quality via the upward, 'radiating' gesture, for example; v) indicating a body part such as the chest, ears, lips and nose; and vi) holophrastic interjections including emblematic keep moving, pay attention and 'offering' gestures. Conductors' gestures can thus be simultaneously iconic, metaphoric and deictic, conveying through their location in space and direction of movement both explicit (e.g., start/stop playing now) and referential meaning (it should sound like this). They may be more or less spontaneous at different times; according to Boyes Braem and Bräm, they are influenced by musical settings and styles, audiences and the personality and culture of the conductor. They may be explicitly intentional or wholly responsive to auditory feedback: they both shape — and are shaped by — the sounds of the orchestra. Conductors physically embody (and respond to) music in the psychological present but, at the same time, consciously shape the production of music not yet sounded.

Figure 1. Handshapes used by conductors in non-dominant hand gestures, from Boyes Braem and Bräm (2000, p. 150)

GESTURE IN REHEARSAL TALK

The first author explored the effects of hearing impairment on communication during rehearsal and in the performance of music as part of a wider project investigating the potential use of vibrotactile technology to help musicians with and without hearing impairments to perform together. 1 In one study, three flautists and pianists were matched by hearing level to form three flute-piano duos, one pair with 'normal' hearing, one pair with mild or moderate hearing loss (25-69dB) and one pair with profound deafness (95 dB+). 2 The two profoundly deaf musicians, Ruth and Paul, were users of British Sign Language (BSL), having pre-lingual sensorineural deafness. Of the two moderately deaf musicians, William (flute) has an acquired sensorineural deafness caused, in part, by 40 years of professional piccolo playing in a non-acoustically treated orchestra pit. The other, Angie, was born with a moderate sensorineural deafness. Kai-Li (flute) and Manny (piano), the two hearing players, were students at the RNCM when the study was conducted in August 2011. In each session, the players rehearsed and performed two works; the slow movement of Bach's Sonata in E Major ("Adagio"), which was familiar to most players, and a work composed especially for the study to ensure both unfamiliarity and a stylistic contrast, Petite Sonate by Jacob Thompson-Bell. 3 Players were instructed to practise the pieces until they were comfortably 'under the fingers'. Each profoundly and moderately deaf player worked with their matched partner and a hearing partner (two sessions), while hearing players played with all partners (three sessions). Although two players were socially acquainted, no players had performed together prior to the study. The following research questions were posed:

- What kinds of gestures are used when talking about music?

- What is the effect, if any, of a hearing impairment on the production of gestures?

- How does the production of gestures relate to the content of rehearsal talk?

In order to answer Question 3, rehearsal talk was analysed using Seddon and Biasutti's (2009) categories of Modes of Communication, shown in Table 1.

Table 1. Modes of Communication, adapted from Seddon and Biasutti (2009)

| Mode | Description | Example |

| Instruction | Instructions to start, verification of the score, instructions about how to play certain sections | 'Yeah, I think you have to stay longer on the E♭ than you are' |

| Cooperation | Discussion and planning to achieve a cohesive performance, addressing technical issues | 'Erm bar 19 — keep the tempo right through and then back to the original tempo?' |

| Collaboration | Evaluation of performance, discussion of remedial action, development of style and interpretation | 'It should be very atmospheric [circular gesture] shall we try to achieve that?' |

Noldus Observer XT9 was used to code gestures as Illustrators or Emblems. The latter were gestures that conveyed explicit, culturally-embedded, referential meaning and included signs borrowed from BSL. Illustrators included all other gestures and were, if possible, coded into one of three further sub-categories: gestures indicating temporal aspects of music were categorized as 'Beating'; those accompanying functional descriptions such as requests for physical cues (e.g., "You could help by moving a little bit more") were categorized as 'Demonstrators'; and gestures drawing on familiar cross-modal mappings (e.g., height to pitch, size to loudness) were categorised as 'Shaping'. All gestures were subsequently mapped to specific verbal utterances transcribed using QSR NVivo v9. Given their prevalence in musical contexts and BSL, a primary focus of the code scheme was to identify the proportion of emblematic gestures produced and to replicate the coding of Beating gestures in musical contexts in line with King & Ginsborg (2011). As prior research has suggested that gestures in music are often simultaneously iconic, metaphoric and deictic, formal classification under these categories was not attempted. Instead, the characteristics of some example tokens are discussed.

1. What kinds of gestures are used when talking about music?

One hundred and sixty-two gestures were observed in a total of two hours and 23 minutes of talk during rehearsals, representing a frequency of 1.13 gestures per minute.[3] Table 2 displays the frequencies of gestures in each category. Illustrators were produced most frequently and 27% of these were coded into sub-categories using functional or formative descriptors (as Shaping, Beating and Demonstrators). Only 12% of all gestures were identified as Emblems. More gestures were produced during rehearsals of the Petite Sonate (93) than the Adagio (69), most likely due to a proportional increase in time spent talking, but the difference was not statistically significant. Data presented here are for both pieces combined.

Table 2. Frequency of gestures by mode of communication

| Instruction | ||||||

| Cooperation | ||||||

| Collaboration | ||||||

| Total |

2. What is the effect, if any, of a hearing impairment on the production of gestures?

As shown in Table 3, profoundly deaf players made more gestures than both moderately deaf and hearing players, and produced more Illustrators than hearing players. These differences were shown to be statistically significant using Kruskal-Wallis tests (as the data were not normally distributed) and post-hoc comparisons using a maximum of two Mann-Whitney tests with Bonferroni corrections. Too few gestures of other types were observed for further differences attributable to the players' hearing level to be identified. While no effect of partner's hearing level was found overall, a trend was observed such that hearing players made more gestures the greater the level of their partners' hearing loss (hearing, 5; moderate, 10; profound, 16).

Table 3. Frequency of gestures by players' hearing level

| Sum Median (Range)* | Significant differences | ||||||||

(Mann-Whitney) |

|||||||||

| Emblem | 15 |

1 (7) | 0 |

0 (0) | 5 |

0 (1) | - | - | - |

| Illustrator | 55 |

6.5 (6) | 30 |

4 (7) | 18 |

1 (5) | 14.1 | .001 | P>H |

| - Beating | 9 |

2 (5) | 2 |

0 (1) | 2 |

0 (1) | - | - | - |

| - Demonstrator | 4 |

0 (3) | 1 |

0 (1) | 4 |

0 (2) | - | - | - |

| - Shaping | 11 |

1 (3) | 4 |

0 (2) | 2 |

0 (1) | - | - | - |

| All gestures | 94 |

10 (13) | 37 |

4.5 (7) | 31 |

2.5 (5) | 17.2 | .001 | P>M, P>H |

|

|

|

|||||||

* Medians and ranges are per participant, per session, per piece.

3. How does the production of gestures relate to rehearsal talk content?

Table 2 above shows that the players gestured most often during Cooperative speech, that is, discussion about how to achieve a cohesive performance in terms of ensemble and expressive manner, addressing all technical issues. They gestured only half as often when in Collaborative mode, discussing style, developing their interpretations, evaluating their performances and planning possible remedial action. A focused comparison between Emblems and Illustrators (all sub-categories combined) revealed a significant association between mode of communication and the type of gesture produced, χ2(2) = 15.31, p < .001, such that the odds of an Emblem occurring during Instructive speech were 9.03 times higher than during Cooperative speech and 22.67 times higher than during Collaborative speech. Shaping gestures were unlike other Illustrators, occurring more frequently during Collaborative than during Cooperative speech.

Further analysis of the content as well as the mode of rehearsal talk revealed, first, that 54 of the 162 gestures were produced while the players were discussing tempo and ensemble synchrony, specifically: i) the use of rubato, ii) maintaining a regular beat, iii) not being together in time, and iv) moving forward. Second, 30 of the 162 gestures occurred during pauses in utterances, replacing words (e.g., "Was that better, that feeling of [gesture]"?), and can be described as 'speech-linked gestures'. Of these, 14 occurred at the end of a phrase or sentence.

EXAMPLES and DISCUSSION

The prevalence of Illustrators over Emblems observed in the rehearsals suggests that, unlike conductors, the players did not draw upon a common repertoire of gestures (Boyes Braem & Bräm, 2000). Although fewer in number, Emblems tended to be either universal, such as thumbs-up or OK gestures, or BSL signs representing bar numbers, which is consistent with their association with Instructive speech. It is likely that the profoundly deaf musicians produced more gestures than the moderately deaf or hearing musicians because they were accustomed to communicating through BSL. The results also show that the spontaneous gesticulations of deaf musicians prompted the same behaviour in the hearing musicians, an effect that may have been caused by requests for clarification (Holler & Wilkin, 2011) or due to behavioural mirroring processes (Skipper, Goldin-Meadow, Nusbaum, & Small, 2007). While previous studies of speakers' gestures have shown that roughly a quarter occur during silent pauses (Beattie & Aboudan, 1994; Nobe, 2000), a slightly smaller proportion (19%) was found in the present study. This may be attributable to the fact that rehearsal talk differs from other kinds of talk, in that its purpose is music-making, with which it is interspersed.

The majority of Illustrative gestures (73%) could not easily be classified as Shaping, Beating or Demonstrator gestures. These general, Illustrative gestures, representing 64% of the total corpus, were polysemous in that their meaning and form depended on musical and verbal contexts. Their prevalence during Cooperative speech suggests they were best suited to supporting the communication of a variety of musical ideas including phrasing, dynamics and tempo. In contrast, Shaping gestures were produced most often during Collaborative speech, suggesting that cross-modal associations may influence the systematic formation of these gestures and, in turn, facilitate the comprehension of high-level, abstract concepts of style and interpretation. In the following sections we explore this premise by presenting examples of specific gestures relating to pulse, rubato, tempo, ensemble synchrony and 'shaping', and discuss how they represent musical meaning.

i) Pulse

The players often referred to the importance of keeping a regular beat when discussing tempo, accompanying utterances such as "It's just very, very precise" (Paul) and "But I'm just keeping my quavers going" (Ruth) with Illustrators, some of which could be classified as Beating. For example, Paul consistently used the same gesture when talking about the need to maintain a consistent, steady beat (see Figure 2 below): palms flat, facing in at chest height and 'chopping' in one or two short downward motions. In addition to the gestures analysed in the present study, Paul conducted with his left hand while playing the piano: a way of maintaining the pulse demonstrated by pianists in other research (King & Ginsborg, 2011).

Figure 2. Paul's 'chopping' gesture

ii) Rubato

The use of rubato was an issue for the two profoundly deaf players, Ruth and Paul, in their rehearsals. Paul reported that he could not hear the high register of the flute and Ruth was only able to discern the general rhythms of the piano accompaniment when she herself was playing. They therefore had to discuss the use of rubato in advance. While Paul preferred to maintain a regular pulse in the Bach Adagio, for the purposes of ensemble synchrony, Ruth wanted more flexibility, for the purposes of expression. Their different views on rubato were reflected in the ways they gesticulated when they talked about it. For example, the final word of Paul's statement "When I play Bach, I rarely shift that" was accompanied by his chopping gesture, which he then expanded and contracted as if the block of time it indicated had begun to breathe (Figure 3), demonstrating that he conceptualized rubato as the departure from a steady beat.

Figure 3. Paul's 'breathing-block' gesture for rubato

By contrast, when Ruth asked for "A little bit more [gesture]", she gestured at the end of the phrase instead of saying explicitly what she wanted, pulling her hand gently towards her, holding an imaginary rope, then releasing it (see Figure 4). The handshape she used for this gesture is not in the standard repertoire of conductor's gestures but is used to form many different signs in BSL, in which Ruth is fluent, and can mean many different things, depending on the form and context of use.

Figure 4. Ruth's 'pull-release' gesture

Similarly, when Ruth said "You just have to listen out for a natural stretch" she made the metaphoric gesture illustrated in the left-hand panel of Figure 5 (note that it could have been described as iconic had she been referring to stretching fabric). Paul replied "But, if you do it too much, then, by the time you get to the 6th bar it actually feels like [gesture]", making the 'pulling apart' gesture shown in the right-hand panel of Figure 5. It is revealing that the gesture began with both hands together, representing the players' synchrony; Paul clearly did not feel that pulling apart was desirable. The referents of both musicians' gestures were therefore very similar but the bases of the metaphor were conceived differently, revealing differences in both the players' conception of rubato and their opinions about its use.

Figure 5. Ruth and Paul's gestural representations of rubato as stretching and pulling apart

iii) Keeping going and slowing down

William (flute) and Angie (piano), both moderately deaf, spent much of their rehearsal discussing ways of moving forward without slowing down. Angie quickly identified that William wanted to take the Adagio slower than she did: "Am I pulling you along?" She repeatedly used C- and C-spread handshapes from the standard repertoire of conductors' gestures (see Figure 1) in a 'rolling forward' action as she argued for a slightly faster tempo, aiming towards cadential points. She did not use this rolling forward gesture consistently, however; it also accompanied the comment "I wonder whether it's more of a change of colour than dynamics?" Ruth used gesture to indicate slowing down. As she was saying "I was wondering towards the end, was I slowing down too much?" she slowly moved her arm down and away from her body, as shown in Figure 6. At the same time she made small circles with her hand, as if tracing the outline of a ball rolling down a hill.

Figure 6. Ruth's 'slowing down' gesture

iv) Ensemble (a)synchrony

As we have seen in the section on rubato, it was difficult for the two profoundly deaf musicians to establish and maintain ensemble synchrony, so much of their talk in the first rehearsal was on this topic. Paul indicated lack of 'togetherness' using a variant of his 'breathing-block' gesture (see Figure 3), with both palms facing towards him but angled towards Ruth. Spontaneous gesticulation was not limited to the players with hearing impairments, of course. The flautist Kai-Li illustrated ensemble asynchrony by holding her two hands flat, palms down, and waving them up and down alternately to show the two musicians playing out of time with each other.

v) Other forms and shapes

It should be clear from the foregoing that, while it might be assumed that all musicians conceptualise pulse, rubato, tempo and (a)synchrony in similar ways, this is not in fact the case. When they were in Collaborative mode, the majority were Shaping gestures, drawing on cross-modal associations to indicate duration, volume and texture. For example, in response to Ruth's request for "A little bit more [pull-release gesture, see Figure 4]" Paul asked "Volume [Gesture 1] rather than [Gesture 2]?" Gesture 1 consisted of moving his hands up and out, palms down with fingers spread, indicating an increase in size. Gesture 2 was a variation of the breathing-block movement (see Figures 3 and 7) to indicate flexibility. In an exchange with Ruth, Manny, the hearing pianist, repeatedly used the same gesture drawing on the association between height as well as size and volume:

Manny: I think we could come down [gesture] a bit more…

Ruth: What do you mean, "down"? Tempo?

Manny: No, er — vol- dynamic. I think we can probably just start a bit

quiet [gesture] in areas and also peak higher [gesture] in other areas, so it's more of a sense of shape perhaps?

In each case he moved his hand up and down between the levels of his head and his chest, using a flat handshape with his palm facing down and his fingers together.

Figure 7. Paul: "Volume rather than ..?"

Some cross-modal associations may not be so intuitive. Angie clarified Kai-Li's suggestion for a "darker, more mellow kind of sound" by asking "So you would like a darker [gesture] sound from me?" On the word 'darker', she moved both her hands quickly up and down towards the piano keyboard several times, her fingers spread, bent and tense, suggesting an association between verticality, or possibly weight, with tone colour: down or heavy with dark; up or light with light. In fact, Angie's solution involved playing into the keys, pushing them slowly down into the key bed to reduce the percussive brightness of the piano sound, linking this visual association with a physical, technical, performance strategy.

Lastly, in addition to the metaphorical associations above, several gestures illustrated spatial aspects of music more literally. For example, gestures produced by Angie while arguing for a better sense of direction: "And it all goes to the fact that if we're thinking like that [Gesture 1] horizontally and going towards the cadences [Gesture 2] and building up the whole thing architecturally, it can't be boring." Gesture 1 represented 'sweeping' (see Figure 8). Gesture 2 involved the rolling forward movement described above. While these could be described as gestures indicating form, Paul provided an example of a gesture indicating texture, with reference to the specially-composed piece: "Piano-wise, it's got imitation — it's more soloistic — it's almost as if we've got this idea [Gesture 1] and you fiddle around [Gesture 2] over the top!" Paul made Gesture 1 by holding both hands together at chest height using a pointing handshape to indicate the piano part and then, keeping his left hand in place, making a fast, circling movement with the right hand at head height to represent the flute part (see Figure 9). This gesture can be defined as simultaneously deictic, in that it reflects in space the relative locations of the two parts on the notated score, and iconic in that the fast-moving right hand conveys the speed at which the flute passage must be played.

Figure 8. Angie's 'sweeping' gesture

Figure 9. Paul representing the piano and flute parts

CLASSIFYING MUSICAL SHAPING GESTURES (MSGs)

The review that follows addresses, first, issues relating to the classification of Illustrative gestures in musical contexts, or accompanying talk about music. These gestures are referred to as 'musical shaping gestures' or MSGs. Second, the extent to which MSGs may be used to form a sign language of music will be discussed. Lastly some potential uses of a standardised gestural system for music are explored.

Although there is some evidence here that MSGs may be influenced by repertoires of emblematic gestures used in ballet mime and by conductors, the results reported above suggest that MSGs do not always convey consistent meanings. Rather, they reveal performers' understandings of fundamental musical concepts, which may differ, as we have seen from the example of Paul's and Ruth's gestures for rubato. For example, Angie's rolling forward gesture, with its referent of the abstract concept of keeping going conveyed by the analogy or base of rolling forward, resembles the keep moving gesture used by conductors involving repeated forward circling, classified as a holophrastic interjection by Boyes Braem and Bräm (2000).

MSGs are difficult to classify using existing taxonomies of gesture. In terms of the 'Kendon continuum' they are best described as spontaneous gesticulations in that they reveal the mental imagery of the speaker (McNeill, 1992). As we have shown, however, talk about music lends itself to metaphoric and deictic gestural representation and, as such, MSGs may function as Emblems even if they are not always 'speech-linked'. For example, Paul created a visual description of musical texture, formed of melody and accompaniment, by using concepts of 'placement' within 'sign-space', techniques used in BSL to assign meaning to different spatial locations (Figure 9). If gestures are classified along continuous spectra rather than within discrete categories (McNeill, 2005), MSGs would appear from the present data to represent an optimal use of gesticulation, drawing on iconicity, metaphor, deictics and emblems simultaneously. Explanations for this lie in the very nature of music itself; Goldin-Meadow (1999) suggests that abstract ideas, including those concerning spatial location, and concepts that are as yet undeveloped, may be represented better using gestures than by attempts at verbalisation. Music provides good examples of such abstract ideas. Furthermore, the ability of both music and gesture to represent motion enables depictions of change over time, such as Ruth's 'slowing down' gesture (Figure 6) and Angie's 'rolling forward' gesture. Further research involving parallel coding methods should explore how MSGs might be defined using existing taxonomies of gesture.

The production and comprehension of MSGs appear to depend on stable cross-modal associations: height and pitch; size and loudness; time (rhythm, duration) and length on the horizontal plane. These associations may render certain gestures emblematic and therefore more easily understood by musicians. According to Conceptual Metaphor Theory (Langacker, 1987) their stability across cultures derives from universal perceptual-motor experience. For example, we learn in childhood to expect large objects to be heavier than small objects and to make a louder sound when they fall. These associations inevitably influence our perception of auditory information; Walker (1987) has shown that people with musical training are more likely than those without to associate frequency (pitch) and vertical placement; waveform (form or texture) and pattern; amplitude (loudness or dynamic) and size; and duration (rhythm) and horizontal length. The MSGs observed in the present study included all four types of association. This is not surprising given the evidence that musical parameters such as pitch contour, articulation and dynamic changes can elicit metaphoric associations in a variety of ways and often simultaneously (Eitan & Granot, 2006).

TOWARDS A SIGN LANGUAGE FOR MUSIC

Acknowledging the universal basis of cross-modal associations, their relation to MSGs, and that talk and music are both forms of temporally organised sound, one might reasonably ask whether it is possible to sign music. Sign language interpreters are indeed capable of communicating aspects of music, in real time, by depicting which instruments are playing and providing information about the shape and pitch of melodies and of the rhythmic and structural form of the overall music percept. Empirical evidence also suggests that musical contexts may be favourable to the use of gesture and sign. The parsing of streams of information, whether visual, as in sign language, or aural as in music or speech, involves the Brodmann 47 region of the brain (Levitin & Menon, 2003) and a recent study with deaf signers, deaf non-signers and hearing non-signers has shown that regions of the superior temporal cortex that normally respond to auditory linguistic stimuli not only adapt to perceive visual input (sign language) but also retain their original cognitive functionality (Cardin, Orfanidou, Ronnberg, Capek, Rudner, & Woll, 2013). People with musical training are better at copying gestures (Spilka, Steele, & Penhune, 2010) and autistic children's memory for hand signs accompanied by music is better than those accompanied by rhythms or speech (Buday, 1995). Conductors can also accurately recognise the emotional content of other conductors' gestures (Wöllner, 2012). These arguments suggest that musical contexts could support the development of a formal sign language of music in the absence of speech, since "while it is possible to produce signs and speak simultaneously, doing so has a disruptive effect on both speech and gesture" (McNeill, 2000, p. 2).

So why does a sign language for music not exist already? The answer is because it has never been necessary, unlike the sign languages that have emerged naturally in communities of deaf people and the hearing people with whom they interact. Attempts to translate music into signs might reveal language-like aspects of music but would not create a true sign language of music: concatenating MSGs like words, end-to-end, would merely form a running visual description of it, a form of visual action notation. Such a translation, like any musical notation, is not capable of eliciting the emotional responses of audible music. Furthermore, the inconsistent use of MSGs suggests that mental representations for music, like emotional responses, are idiosyncratic making the codification of MSGs in the form of a sign language for music difficult if not impossible. The idea that music is like language is attractive, but music is not the same as language; there are no literal equivalents of phonemes, morphemes and syntax in music, even though composers may use chords, phrases, leitmotifs or other abstractions that can be seen as analogous.

Nonetheless, vocabularies of signs for the production of musical sound have indeed been contrived (Minors, 2012). The stability of cross-modal associations, however, and their apparent role in MSGs suggests that a sign language for music that draws upon them, rather than using arbitrary signs, would be both more natural to produce and easier to understand by musicians and non-musicians alike. While there is no firm evidence from the present study that the MSGs used by one member of each duo were understood intuitively by the other, this could be explored empirically. Goldin-Meadow (2003) presented gesture-speech mismatches in relation to mathematical problem-solving so as to find out the extent to which perceivers understood the intended meaning. This paradigm could well be adapted to the context of speech in music to test the hypothesis that the perceived spatial aspects of music can be reliably translated into three-dimensional, spatial representations using the hands, involving both form and motion, and providing a new way to explore the ubiquity of cross-modal associations and even subjective, emotional responses to music.

POTENTIAL APPLICATIONS

A system for describing music using gestures, beyond those used in the context of conducting, could have its uses. Gesture recognition technology can be used to generate musical sounds, facilitating musical improvisation, creativity and expression. Arfib and Kessous (2002) have attempted to classify gestures and map sounds to gestures and vice versa; Willier and Marque (2002) aimed to "control music by recycling mastered gestures generated by another art [juggling]" (p. 298). Gesture recognition technology for the creation of musical sounds is one of many driving forces behind the computational modelling of the spatial properties of gestures; this relies on the notion of shape: "Shape-related or shape-describing hand movements are a subset of iconic gestures […] An icon obtains meaning by iconicity, i.e. similarity between itself and the referent" (Sowa & Wachsmuth, 2002, p. 22). Building on the quantitative analysis of musicians' gestures (Wanderley, 2002), gesture recognition technology has also been used to analyse movement and gesture in musical contexts (Camurri, Mazzarino, Ricchetti, Timmers, & Volpe, 2003; Castellano, Mortillaro, Camurri, Volpe, & Scherer, 2008).

Technologies for generating sound and music may rely on the abstraction of specific movement cues (Mailman & Paraskeva, 2012) or directly manipulate the sounds of our muscles moving (Donnarumma, 2012). As with artificially created sign languages, they tend, therefore, to produce idiosyncratic gestural vocabularies. Future technologies may be able to utilise the commonalities between musicians' mental representations for music as inferred from their MSGs, for example the relationships between height of gesture and pitch, size of gesture and volume and so on. These could form the basis of a new, more ecologically valid vocabulary of gestures drawing on the metaphors that are used and understood by musicians themselves to refer to structure, rhythm, rubato and texture, some so consistently that they become emblematic. While these are largely implicit — we are not typically aware of our gestures when we are talking (Goldin-Meadow, 1999) — it could be advantageous to make more explicit use of gestures when rehearsing. Gesture recognition technology may enable individuals to make music independently but collaborative music-making will continue to involve social and interactive processes, including talking.

Gesture also has an important role in the teaching and learning of music. The 17 Curwen/Kodaly hand signs represent the degrees of the scale and enable users to develop kinaesthetic representations for music: the intervals between the pitches of a melody, consonances, dissonances and even the resolution of suspensions. There is also evidence that singers use gestures including pulsing and beating as a way of forming a kinaesthetic representation of material to be learned and memorized (Ginsborg, 2009; Nafisi, 2010). The North West Deaf Youth Orchestra run by the UK charity Music and the Deaf, consisting of 16 children between the ages of 7 and 16 with varying degrees of hearing impairment, recently performed a work on the theme of fireworks, derived from free improvisation and co-ordinated and conducted by the first author, its co-leader. Four BSL signs were used for different kinds of fireworks, together with a vocabulary of conducting gestures adapted from Erdemir, Bingham, Beck, and Rieser (2012), including 'flicks', 'punches', 'floats' and 'glides' and the gestures drawing on cross-modal associations identified in the present study, to elicit specific rhythmic patterns and changes of pitch and dynamics from the orchestra. Very little rehearsal was needed to establish the meaning and, therefore, the auditory correlates of the MSG, suggesting that the signs and gestures were understood intuitively by the children.

CONCLUSION

Gesturing while talking about music not only helps us think about music but also to communicate our ideas about music to others. MSGs can vary from one user to another, revealing differences in the way they conceptualise music. While those observed in the present study included a relatively low proportion of emblematic gestures, or true signs, MSGs often drew on consistent cross-modal associations that seemed to be understood intuitively. An attempt to codify MSGs by drawing on these commonalities would represent a bridging of the gap between the language-like aspects of music and gesture and the true language of sign. There could be advantages in translating music into sign for those who cannot hear music fully or for the purposes of kinaesthetic learning. There could also be advantages in drawing on the intuitive language of gesture to develop a vocabulary that could be learned, shared and used for the creation of music. The opportunity to undertake research with musicians, profoundly deaf since birth and fluent in BSL, provided the rare chance to observe more systematic types of spontaneous gesticulation than those produced by musicians with normal hearing and provided clear visual correlates of their mental representations for musical shape.

NOTES

- The present study was financially supported by the AHRC. The authors would like to thank all respondents who enthusiastically gave up their time to take part.

Return to Text - Of the participants described in this paper, those with profound deafness were Dr Paul Whittaker OBE, Artistic Director of the UK charity 'Music and the Deaf' and Ruth Montgomery, flautist, teacher and writer. An example of Paul signing with vocal group The Sixteen can be found at http://icls.fimc.net/hearhere/article.asp?id=637345 and he can be contacted at paul@matd.org.uk. Examples of Ruth's performances and signing can be seen at http://www.youtube.com/user/FluteGuitarDuo/videos and her email address is magicflute7@hotmail.com. Those with moderate deafness included freelance music education researcher and piano tutor Angela Taylor ('Angie') who has published her work about older amateur keyboard players in the journal Psychology of Music. She can be contacted on artaylor8@gmail.com.More information about pianist Emmanuel Vass ('Manny') can be found at http://www.emmanuelvass.co.uk.

Return to Text - A video clip of selected gestures observed in this study and accompanying extracts can be found online: http://youtu.be/WLSKjx0y-DA

Return to Text

REFERENCES

- Arfib, D., & Kessous, L. (2002). Gestural control of sound synthesis and processing algorithms. In: I. Wachsmuth & T. Sowa (Eds.), Gesture and Sign Language in Human-Computer Interaction.: Berlin Heidelberg: Springer, pp. 285-295.

- Beattie, G., & Aboudan, R. (1994). Gestures, pauses and speech: an experimental investigation of the effects of changing social context on their precise temporal relationships. Semiotica, Vol. 99, pp. 239-272.

- Boyes Braem, P., & Bram, T. (2000). A pilot study of the expressive gestures used by classical orchestral conductors. In: K. Emmorey & H. Lane (Eds.), The Signs of Language Revisted. An Anthology to Honor Ursula Bellugi and Edward Klima. New Jersey: Lawrence Erlbaum Associates, pp. 143-167.

- Buday, E.M. (1995). The effects of signed and spoken words taught with music on sign and speech imitation by children with autism. Journal of Music Therapy, Vol. 32, No. 3, pp. 189-202.

- Camurri, A., Mazzarino, B., Ricchetti, M., Timmers, R., & Volpe, G. (2003). Multimodal analysis of expressive gesture in music and dance performances. In: A. Camurri & G. Volpe (Eds.), Gesture-Based Communication in Human-Computer Interaction, Vol. 2915, pp. 20-39.

- Cardin, V., Orfanidou, E., Ronnberg, J., Capek, C. M., Rudner, M., & Woll, B. (2013). Dissociating cognitive and sensory neural plasticity in human superior temporal cortex. Nature Communications, Vol. 4, No. 1473.

- Castellano, G., Mortillaro, M., Camurri, A., Volpe, G., & Scherer, K. (2008). Automated analysis of body movement in emotionally expressive piano performances. Music Perception, Vol. 26, No. 2, pp. 103-119.

- Chaffin, R., Imreh, G., & Crawford, M. (2002). Practicing Perfection: Memory and Piano Performance. Mahwah, NJ: Lawrence Erlbaum Associates.

- Davidson, J. (2001). The role of the body in the production and perception of solo vocal performance: A case study of Annie Lennox. Musicae Scientiae, Vol. 5, No. 2, pp. 235-256.

- Davidson, J. (2006). "She's the One": Multiple functions of body movement in a stage performance by Robbie Williams. In: A. Gritten & E. King (Eds.), Music and Gesture. Aldershot: Ashgate, pp. 143-167.

- Donnarumma, M. (2012). Xth Sense, a biophysical musical instrument. Demonstration given at Live Interfaces Performance Art Music, School of Music, Leeds.

- Efron, D. (1972). Gesture, Race and Culture. The Hague: Mouton Publishers.

- Eitan, Z., & Granot, R.Y. (2006). How music moves: Musical parameters and listeners' images of motion. Music Perception, Vol. 23, No. 3, pp. 221-247.

- Ekman, P., & Friesen, W. (1969). The repertoire of non-verbal behaviour: Categories, origins, sage and coding. Semiotica, Vol. 1, pp. 49-98.

- Erdemir, A., Bingham, E., Beck, S., & Rieser, J. (2012). The coupling of gesture and sound: Vocalizing to match flicks, punches, floats and glides of conducting gestures. In: E. Cambouropoulos, C. Tsougras, P. Mavromatis, & K. Pastiadis (Eds.), Proceedings of the 12th International Conference on Music Perception and Cognition and the 8th Triennial Conference of the European Society for the Cognitive Sciences of Music, p. 286.

- Ginsborg, J. (2009). Beating time: the role of kinaesthetic learning in the development of mental representations for music. In: A. Mornell (Ed.), Art in Motion. Frankfurt: Peter Lang, pp. 121-142.

- Goldin-Meadow, S. (1999). The role of gesture in communicating and thinking. Trends in Cognitive Sciences, Vol. 3, No.11, pp. 419-429.

- Goldin-Meadow, S. (2003). Everyone reads gesture. In: S. Goldin-Meadow, Hearing Gesture: How Our Hands Help Us Think. Cambridge, MA: Harvard University Press, pp. 73-95.

- Holler, J., & Wilkin, K. (2011). An experimental investigation of how addressee feedback affects co-speech gestures accompanying speakers' responses. Journal of Pragmatics, Vol. 43, No. 14, pp. 3522-3536.

- Iverson, J.M., & Goldin-Meadow, S. (1998). Why people gesture when they speak. Nature, Vol. 396, No. 6708, p. 228.

- Kendon, A. (1988). How gestures can become like words. In: F. Poyatos (Ed.), Crosscultural Perspectives in Nonverbal Communication. Toronto: C. J. Hogrefe Publishers, pp. 131-141.

- King, E., & Ginsborg, J. (2011). Gestures and glances: Interactions in ensemble rehearsal. In: A. Gritten & E. King (Eds.), New Perspectives of Music and Gesture. Surrey: Ashgate, pp. 177-202.

- Langacker, R. (1987). An introduction to cognitive grammar. Cognitive Science, Vol. 10, pp. 1-40.

- Levitin, D.J., & Menon, V. (2003). Musical structure is processed in "language" areas of the brain: a possible role for Brodmann Area 47 in temporal coherence. NeuroImage, Vol. 20, No. 4, pp. 2142-2152.

- Liddell, S. (2000). Blended spaces and diexis in sign language discourse. In: D. McNeill (Ed.), Language and Gesture. Cambridge: Cambridge University Press, pp. 331-357.

- Luck, G., & Sloboda, J.A. (2009). Spatio-temporal cues for visually mediated synchronization. Music Perception, Vol. 26, No. 5, pp. 465-473.

- Mailman, J., & Paraskeva, S. (2012). Composition, emergence and the fluxations human body interface. Demonstration at the Sound, Music and the Moving - Thinking Body, Senate House, London.

- Marschark, M., & Spencer, P.E. (Eds.) (2011). The Oxford Handbook of Deaf Studies, Language and Education (Vol. 1). Oxford: Oxford University Press.

- McNeill, D. (1992). Hand and Mind. Chicago: The University of Chicago Press Ltd.

- McNeill, D. (Ed.) (2000). Language and Gesture. Cambridge: Cambridge University Press.

- McNeill, D. (2005). Gesture and Thought. Chicago: The University of Chicago Press Ltd.

- Minors, H. (2012). Music and movement in dialogue: exploring gesture in soundpainting. Les Cahiers de la Societe quebecoise de recherche en musique, Vol. 13, Nos. 1-2, pp. 87-96.

- Morina, B. (2000). Mime in Ballet. Eastleigh: Green Tree Press.

- Nafisi, J. (2010). Gesture as a tool of communication in the teaching of singing. Australian Journal of Music Education, Vol. 2, pp. 103-116.

- Nobe, S. (2000). Where do most spontaneous representational gestures actually occur with respect to speech? In: D. McNeill (Ed.), Language and Gesture. Cambridge: Cambridge University Press, pp. 186-198.

- Seddon, F., & Biasutti, M. (2009). A comparison of modes of communication between members of a string quartet and a jazz sextet. Psychology of Music, Vol. 37, No. 4, pp. 395-415.

- Skipper, J.I., Goldin-Meadow, S., Nusbaum, H.C., & Small, S.L. (2007). Speech-associated gestures, Broca's area, and the human mirror system. Brain and Language, Vol. 101, No. 3, pp. 260-277.

- Sowa, T., & Wachsmuth, I. (2002). Interpretation of shape-related iconic gestures in virtual environments. In: I. Wachsmuth & T. Sowa (Eds.), Gesture and Sign Language in Human-Computer Interaction. Berlin Heidelberg: Springer, pp. 21-33.

- Spilka, M.J., Steele, C.J., & Penhune, V.B. (2010). Gesture imitation in musicians and non-musicians. Experimental Brain Research, Vol. 204, No. 4, pp. 549-558.

- Sterelny, K. (2012). Language, gesture, skill: the co-evolutionary foundations of language. Philosophical Transactions of the Royal Society of Biological Sciences, Vol. 367, No. 1599, pp. 2141-2151.

- Stokoe, W. (2000). Gesture to sign (language). In: D. McNeill (Ed.), Language and Gesture. Cambridge: Cambridge University Press, pp. 388-399.

- Tattersal, I. (1999). Becoming Human: Evolution and Human Uniqueness. New York: Harcourt, Brace and Co.

- Walker, R. (1987). The effects of culture, environment, age and musical training on choices of visual metaphors for sound. Perception & Psychophysics, Vol. 42, No. 5, pp. 491-502.

- Wanderley, M. (2002). Quantitative analysis of non-obvious performer gestures. In: I. Wachsmuth & T. Sowa (Eds.), Gesture and Sign Language in Human-Computer Interaction. Berlin Heidelberg: Springer, pp. 241-253.

- Wanderley, M., & Vines, B. (2006). Origins and functions of clarinettists' ancillary gestures. In: A. Gritten & E. King (Eds.), Music and Gesture. Farnham, UK: Ashgate. pp. 165-191.

- Willier, A., & Marque, C. (2002). Juggling gestures analysis for music control. In: I. Wachsmuth & T. Sowa (Eds.), Gesture and Sign Language in Human-Computer Interaction. Berlin Heidelberg: Springer, pp. 296-306.

- Wöllner, C. (2012). Self-recognition of highly skilled actions: A study of orchestral conductors. Consciousness and Cognition, Vol. 21, pp. 1311-1321.