Introduction

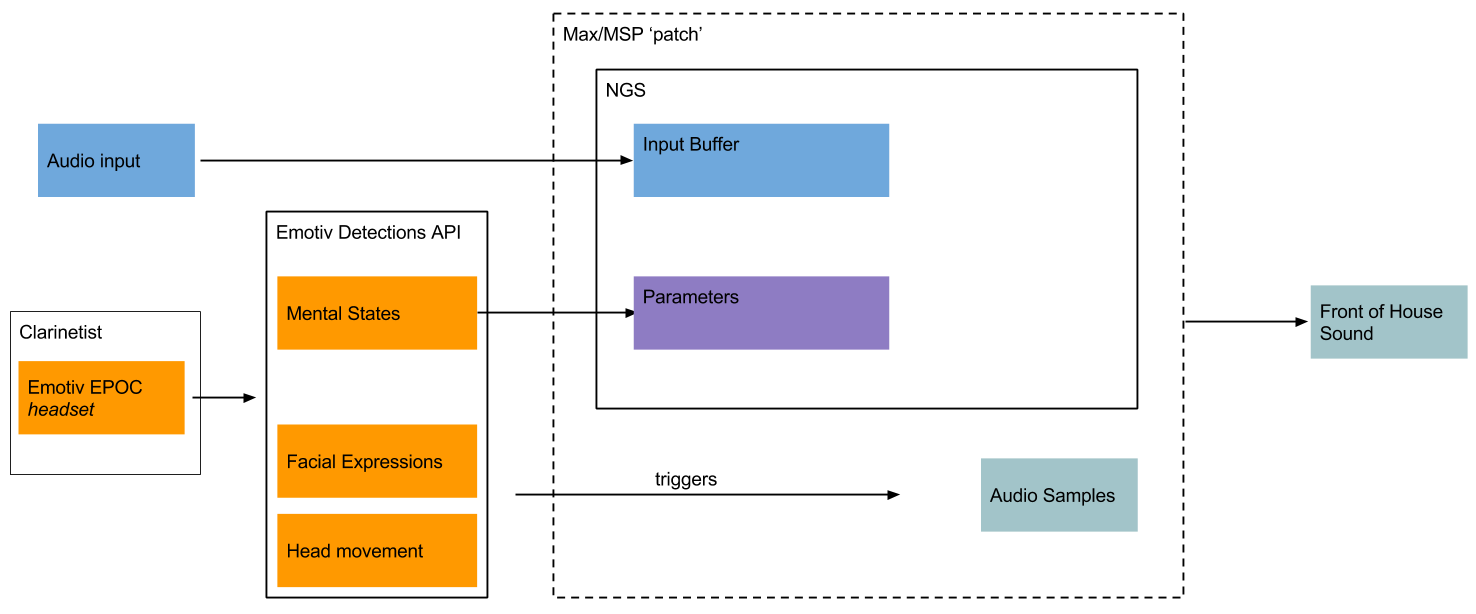

CLASP Together (beta) (Whalley, 2012a, 2012b) derives its title from the etymology of the word "synapse", from the Greek sunapsis (σύναψιζ), a compound of συν "together" and άψίς "joining" (Merriam-webster.com, 2014). The underlying concept of the piece revolves around the interaction and relationship between a human in the context of ensemble performance and a computer-based artificial neural network, in this case the Neurogranular Sampler (NGS), developed by Eduardo Miranda and John Matthias (Miranda & Matthias, 2009). The artificial neural network sonifies its underlying processes by playing short audio fragments from a constantly updated buffer when a neuron in the model fires. The composition is scored for an ensemble comprised of solo bass clarinet, violin, trombone, double bass and live electronics. The extremely weak electrical currents produced by these synaptic firings in the brain are picked up by an EEG headset worn by the bass clarinetist and are subsequently transformed into sound, effectively creating a fifth virtual performer through the sampler.

The integration of the NGS into the composition was used to explore the relationship between predetermined compositional material and its nondeterministic, granulated playback during performance. The piece demanded the use of musical elements that sound subjectively pleasing when manipulated and reproduced through the aleatoric processes of the NGS; for example, employing short melodic phrases and easily recognizable rhythmic patterns and textures. An extra layer of brain-mediated interaction provides control over NGS performance and its sound output range, complexity and texture, affording it the potential to participate as an additional musical presence. It also further extends the level of agency in the EEG wearer, who doubles as controller of both their acoustic instrument and the NGS layer.

To further convey the performer's direct control of the NGS to an audience, dramaturgical techniques were employed, such as gesture and facial expression. This serves to create further visual and spatial association between the performers and the computer transformations produced. At other moments in the piece clearly defined physical detachment from the musicians and their instruments whilst not playing was used to clarify to the audience the sources of the sounds. As the sound sources of both the musicians and the NGS were combined in the front of house sound system, this dramaturgical technique was used to define and demonstrate the origins of the sound.

The first performance of Clasp Together (beta) in May 2012 formed part of the inaugural concert of the Inventor-Composer Coaction (ICC) 3, a research group based at the University of Edinburgh. The event aimed to foster a spirit of experimentation and included the use of other HCI and biofeedback frameworks, such as the Xth Sense bio-sensor (Donnarumma, 2011) and Alpha-Sphere (Desine, 2011) haptic interface.

This study will first consider the rationale and context behind the composition, followed by an examination of the specific use of technology in this piece, the relationship between composer, performer and audience and how these relationships are challenged with the introduction of a new technological agent. Finally, it will relate how these were realized in performance.

Compositional Context

Clasp together (beta) (Whalley, 2012) forms part of a larger body of work inspired by Douglas Hofstadter's Gödel, Escher, Bach (Hofstadter, 1979). It is one of a number of pieces by Whalley that reflect some of the core ideas of the book: fractals, recursion, translation, mapping (isomorphisms), paradox, and their relation to consciousness through analogy to art, music and mathematics. Clasp together (beta) represents a dialogue between two important areas in the investigation of cognition: computational cognitive neuroscience and observation of the human biological system. It reflects these streams through both the human vs. artificial neural network division (the EEG and NGS respectively) and the division of human vs. computer in a game of Go, from which the musical source material is derived.

The ancient board game Go involves a considerable depth of strategy, employing deceptively simple rules, which chess master Emanuel Lasker is said to have described as "so elegant, organic, and rigorously logical that if intelligent life forms exist elsewhere in the universe, they almost certainly play Go" (American Go Association, 2014). Like chess, Go is a non-chance combinatorial game and it is relevant to the wider discussion on Hofstadter's approach to consciousness. Even though Go computers are now able to challenge professional players, it is a matter of debate how soon it will be before they are superior in play. Despite the rules being very simple, the number of possible combinations of game play are vast and therefore a more nuanced approach than brute force is needed to improve its performance (due to the exponential nature of the move combinations and the NP-complete nature of the Endgame 4). Hofstadter suggests that when a computer is truly more intelligent than a chess master, it will say "I'm bored of chess, lets talk about poetry" (Hofstadter, 1979, p. 678). The same statement could also be applied to Go.

During the composition of Clasp Together (beta), a particular game of Go 5 that resulted in a half-point win (the closest possible result, since there are no draws) served as the initial exploration of the exchange between human and machine. The game produced two 'streams' of source material for use in the composition: firstly an algorithmic one and, second, an 'intuitively' based stream. Clasp Together (beta) is derived from the latter, generated as a piano improvisation while watching a video of the game. Both algorithmic and intuitive streams finally meet in the composition Entangled Music which is the confluence of Whalley's compositional research on Gödel, Escher, Bach (Whalley, 2014).

Emergent Strange Loops

The fractal nature of mind, operating at different levels, is a central concern in Hofstadter's approach to tackling the problem of consciousness. Moreover, it is the structure of the "tangled hierarchy", or "strange loop" that he considers to underpin the emergence of the perceived self. As Hofstadter (2006, p.515) notes:

… the 'I' is an illusion, but it is such a strong and beguiling and indispensable illusion in our lives that it will not ever go away. And so we must learn to live in peace with this illusion, gently reminding ourselves occasionally, 'I am a strange loop.'

The operation at different levels is also apparent in the workings of neural networks, both biological and simulated, from the firing of individual neurons, to the network of connection on a micro as well as macro scale. At the highest level lies abstract thought, which seems so far removed from the underlying "wetware" that it is described as the "mind-body problem". Nicholas Humphrey discusses the intricacies of this in A History of the Mind (Humphrey, 1992, p. 29):

In one sense brains are unquestionably physical objects, which can be described reductively in terms of their material parts. But that is surely not the only way of representing them, nor is it necessarily the most revealing way.

This echoes Ray Jackendoff's concerns with reductionism in Consciousness and the Computational Mind (1987, p. 18), in which he states:

I find it every bit as incoherent to speak of conscious experience as a flow of information as to speak of it as a collection of neural firings.

The difference between what we subjectively feel to be the nature of consciousness, having an "I" and the physical nature of the hardware on which it runs, is one of many ontological dichotomies. Others include "Determinism" vs. "Indeterminism" and "Abstract" vs. "Concrete" objects, and these are also highlighted in Clasp Together (beta) through the juxtaposition of human performer and computer.

Musical structures at all levels are frequently deeply hierarchical in nature, functioning on multiple and simultaneous levels of structure: note against note, phrase against phrase, section against section and movement against movement. These levels are constantly compared against one another by the listener, both consciously and unconsciously. Human brains are well adapted to pick up on such structures, even when very dense and complicated. Our ability to distinguish subtle sonic differences and spot structures of recursion in sound has been explored in various ways. For example at MIT, Markus Buehler (Buehler, 2014) reports on the sonification of protein strands to help scientists understand how such structures are interlinked coherently.

The Neurogranular Sampler

Developed by John Matthias, the Neurogranular Sampler (NGS) features a spiked neuronal network model originally developed by Eugene Izhikevich (2003), whereby when a neuron fires, a sample from a delayed audio buffer is played (Miranda & Matthias, 2009). This instrument produces a cloud-like cluster of 'sound grains' that vary in intensity depending on the activity in the network. The authors' description clearly outlines these processes. (ICC, 2012)

The level of synchronization in distributed systems is often controlled by the strength of interaction between the individual elements. If the elements are neurons in small brain circuits, the characteristic event is the 'firing time' of a particular neuron. The synchrony or decoupling of these characteristic events is controlled by modifications in the strength of the connections between neuron under the influence of spike timing dependent plasticity, which adapts the strengths of neuronal connections according to the relative firing times of connected neurons.

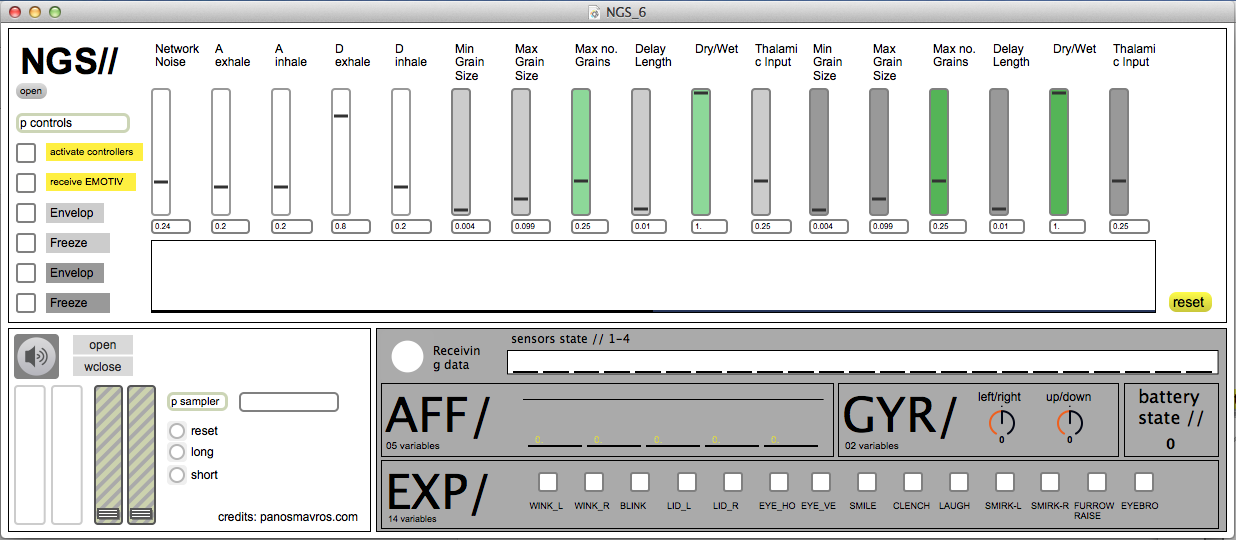

It is possible to set various parameters of the NGS, including network activity and size (1-1000 neurons), synapses per neuron, thalamic input and maximal axonal delay, as well as grain length. Previous performances and installations using the NGS such as cortical songs (Nonclassical.greedbag.com, n.d.) and Plasticity (Plasticity installation, 2014) show how wide and varied the results can be with the static approach to the settings. Indeed, the NGS was designed to be used with static parameters:

These parameters are not intended for frequent modulation when the piece is running; rather, they are intended as a way to fine-tune the initial parameters of a generative system, ensuring that the density of output is not too low or too great. The subsequent operation should primarily be governed by the internal neural plasticity. (Jones et al., 2009, p. 300).

Conceptually, the compositional context of Clasp Together (beta) differs from these previous examples in one important respect: that the NGS should be directly subordinate to the agency of the performer not only through interacting with it via the sound input but also through a control device. There is a strong conceptual link between the chosen control device (EEG) and the design intentions behind the NGS, namely making apparent the usually hidden workings of neural networks explicitly for artistic repurposing.

A Framework for Interaction

A biofeedback framework was developed to control the parameters of the NGS, which can be used to introduce further input or control of a system. In a sense, this involves augmenting the number of ways in which a user can interact with the environment as various bodily phenomena become quantified and used as input to a system. These inputs include cardiac and respiratory frequency, galvanic skin response, muscle and certain brain activity, among other physiological responses. Out of these available options, the gathering of neurophysiological information through an EEG was chosen primarily because of the previously discussed conceptual links to the NGS, but also because it leaves the performer's hands free, while providing a certain dramaturgical element to the performance (especially with regard to the donning of the device). Use of the Emotiv EPOC™ headset also afforded the possibility of integrating the detection of various facial and whole head movements into this framework.

EEG

EEG is a method to detect brain activity, dating back to 1929 and the work of Hans Berger (Swartz, 1998). The electrical discharge produced by millions of firing synapses produces a faint electrical current that can be detected by electrodes positioned on the scalp 6. It has been used as a tool in both clinical and academic fields to understand the localization of brain activity, relating to different tasks or to abnormalities. While primarily used to study brain function and anomalies, during the last few decades EEG has been at the center of scientific research on brain-computer interfaces (BCI), aiming to establish that "a communication system in which messages or commands that an individual sends to the external world do not pass through the brain's normal output pathways of peripheral nerves and muscles" (Wolpaw Birbaumer, McFarland, Pfurtscheller & Vaughan, 2002). A surge in the computing power of personal devices and advanced statistical techniques (such as machine learning) led to the commercialization of such equipment in the mid 2000s. The Emotiv EPOC™, released in 2009, is one of a series of low-cost headsets which is aimed at users outside of the clinical sector. Emotiv's neuroheadset has been used in several innovative biofeedback art projects and musical performances such as Neuro-Knitting (Guljajeva, Canet & Mealla, 2013) and Brain Music (Paile, 2011).

The advantages of the Emotiv EPOC™ are that it is affordable, portable, wireless and relatively easy to set up in comparison to the intricate wiring of a traditional EEG. Its ease of connectivity and placement afforded the possibility to explore the performative aspects of wearing such a device, explained further below. Although less accurate than its high-end and expensive EEG cousins, the EPOCTM has been successfully used in research, thereby validating its performance and output. 7 A comprehensive interrogation of current uses of brain-computer interfaces in the context of performance art (Zioga, Chapman, Ma, & Pollick, 2014) further embeds Clasp Together (beta) as a part of a wider cannon of performance work in this area.

Fig. 1.The Emotiv EPOC™ 'neuroheadset' during rehearsal. (photograph, Panos Mavros)

The EPOC™ includes a set of software tools that analyze and decompose the EEG signal in real-time, performing various detections of facial expressions, mental states and (after "training") several cognitive patterns. The emotion-detection algorithm, named "Affectiv Suite" [sic], interprets brain activity into five mental-emotional states: "engagement", "instantaneous excitement", "long term excitement", "frustration" and "meditation". These generalized categories have been chosen by Emotiv™ to best describe an amalgamation of the brain activity patterns. Arguments may be made regarding the appropriateness or otherwise of these descriptions, but that is outside the scope of this paper. To compensate for the variability of this activity among individuals, a necessary step for commercial and generic software, the system creates a profile for each individual, and the output is normalized accordingly.

Fig. 2.The interface of the Max/MSP patch, showing the parameters of the NGS interface with both the Expressiv Suite and the NGS.

The Expressiv Suite is able to recognize a range of facial expressions such as raising and furrowing of eyebrows and smiling. This electrical signal recorded at the scalp contains multiple components emanating from the cortex (EEG - measured in microVolts), from muscles (electromyography / EMG — measured in milliVolts) or electrical noise produced by movement artifacts and other sources. Muscle signals are approximately a thousand times stronger than EEG signals when classified according to their source, resulting in a facial expression detection algorithm. Furthermore, the headset integrates a two-axis gyroscope, which allows the detection of head movements (e.g. turning, tilting, nodding etc.).

The capacity of this system to provide these four additional streams of data (mental states, cognitive patterns, facial muscle activity and head-movement) makes it possible to incorporate the EEG device in the performance in multiple ways, and to augment the performative instruments of the musician. In a traditional musical performance, musicians perform through their musical instruments, while in this framework, the musician control both the physical instrument as well as a digital instrument, through a seamless interface.

| Input | Mediated by | Controls |

|---|---|---|

| Mental State | Excitement | Thalamic Input |

| Frustration | Delay Length | |

| Long Term Excitement | Network Noise | |

| Engagement | N/A | |

| Meditation | Minimum Grain Size | |

| Meditation (inverse) | Maximum Grain Size | |

| Facial Expression | Eyebrows | Trombone* |

| Furrow | Violin* | |

| Head gestures | Head nod (vertical) | Double Bass* |

| Head Left/Right | Bass Clarinet* | |

| * Pre-recorded "Motif Samples" | ||

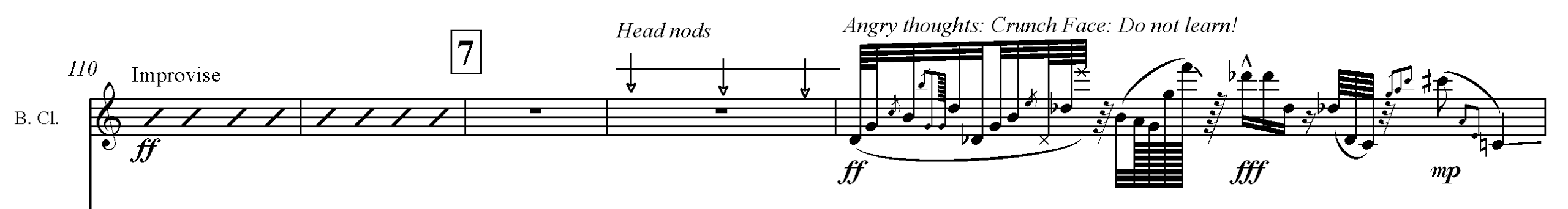

Task Switching

One of the unique aspects of the composition was that the complexity of the composition and instructions to the musician were manipulated to increase or reduce the cognitive load and the mental state of the performer, in order to produce the desired outcomes from the NGS interface. Due to the practice-centered nature of this project, no empirical data was gathered at this stage; however, observations early on in the process of experimentation with the technical rig, the bass clarinet soloist and the EEG device informed the evolution of the setup, the digital interface and the composition. In this process, it became apparent that, due to the formal training of the musician, some modes of playing called for in the piece were considerably less demanding at a cognitive level than the composer had anticipated. For example, in addition to standard notation, the score contains sections of free improvisation, playing from memory, interpretation of graphic notation, non-instrumental gestures, and instructions regarding the thoughts of the player. As we observed, while the performer was performing each of these different modes, the various signals from the EPOCTM headset were stabilized close to a baseline, suggesting that the mental states remained fairly consistent during a particular part of the composition. This was in stark contrast to the increase in overall brain activity when the player was required to change rapidly from one task to another. This result is consistent with previous research, which documented the process of rapid changes between tasks known as "task switching" (Wylie & Allport, 2000; Monsell, 2003). Before these observations the composer had assumed that the demanding nature of each task would have been sufficient to "drive" the NGS through the EPOCTM headset. As a result, the composition was adapted to increase the rate of change between these modes of playing, using task switching to guarantee consistent and high spikes in brain-activity, and subsequently in the sonic outcome of the NGS algorithm.

Composition

The piece is composed in the Western art music tradition, with clear references to jazz, extended techniques and free improvisation. The dramaturgical progression of the piece falls into six distinct sections, each of which also reflect some of the intentions behind the use of the technology employed:

- The donning of the headset by the bass clarinetist on stage before the performance: an introduction to this aspect of the technology.

- NGS signal-processing playback only: the audience understands that the live electronics are working, that the NGS is the source of the sound cluster, and that these sounds are causally linked to the live ensemble material.

- The ensemble play each of the "motifs": the audience hears which sounds will be triggered through gesture alone.

- The wearer of the headset goes through each gesture in turn. This triggers the "motif samples" and the audience learns which movement triggers which sound.

- The composition gradually increases in density, the ensemble continuing to produce more sounds for the NGS to sample: the strain on the bass clarinet soloist is seen to increase.

- The soloist is tasked to reduce the input into the NGS by entering a state of calm: the audience witnesses the EEG control as a kind of battle with the (now rather invasive) NGS; one in which the human performer is ultimately victorious as the piece fades to silence.

To aid the coherence of a piece of music over time various formal structures are often employed by a composer such as Rondo, Binary or Sonata Form. During the pre-compositional phase, a composer may gather materials including sketches of motives, textural ideas and wider structural ideas. In the case of Clasp Together (beta) the sources of these considerations were derived from different places. Firstly, the motivic fragments (b.1, b.58-59, b.81-85) were direct quotations of a piano improvisation of the composer on a stop animation of the game of Go as detailed earlier. The development of these motivic ideas was shaped iteratively after the sessions of experimentation with the clarinet performer. The development of the composition was therefore non-linear, with details being added and subtracted in order to find a balance between the acoustic rendition of the score and its consequent granulation by the NGS and mental activity of the lead performer. Within this context, the increased frequency of task switching for the clarinetist throughout the score became a constraint from which the content was shaped into a cohesive form. Although the form of Clasp Together (beta) is difficult to determine with accuracy, the fast task switching alludes to a type of moment form shaped to build overtime. This textural crescendo plays a significant dramatic role as it reaches a climax (b.141) from which the NGS is able to solo in a quasi-cadenza.

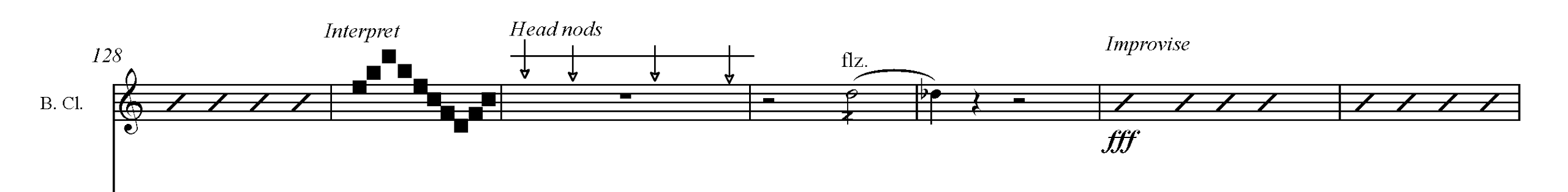

Systems of notation have evolved and adapted to the specifics and modalities of their application. Musical scores contain enough information about the composition to enable repetition and recreation, indicating notes, rhythm, tempo and some expression, while leaving enough 'vital' space for the performer to exercise interpretation. Stylistic conventions often dictate how much or how little information is explicit in the score (for example the use of dynamics in baroque period music, or improvisatory elements for Jazz Ensemble). Composing for new media and technologies creates additional challenges, such as how novel modes of interaction with experimental interfaces may be encoded. The score of Clasp Together (beta) uses a combination of standard and graphical notation, with arrows and text instructing the bass clarinetist to make various gestures, both while playing and when at rest. Some improvised sections are guided by graphical means, while others are left to the discretion of the musician, indicated by the use of slash marks. For the most part, this performer-generated music is "fierce" (b.7) and "free" (b.118), set in relief against the relatively contained writing within the ensemble.

The question of mental preparation in musical performance has not been as widely considered and documented in comparison to more pragmatic matters of technique and the physical stamina required in certain situations. For example, it will be common for a composer to consider how long it might take a performer to change instruments, when they might run out of breath or how large a hand span is, but not the mental strain required to complete a task out of the normal mode of performance on their instrument. Clasp Together (beta) forms part of a growing cannon of compositions that require fundamentally different ways for performers to interact with their instruments and interface with new technology. Issues of performance anxiety and learning strategies for sub-skills such as sight-reading and improvisation have been addressed, but it is possible that there is still some way to go in communicating this with composers. Additionally, new musical interfaces create fresh challenges for composers and performers, such as recombining some of these previously learnt sub-skills, as well as acquiring new ones (McNutt, 2003). Even those performing musicians currently leaving conservatories and universities who have specialized in the study of contemporary music can not be expected to be well versed in using live electronics (Berweck, 2012).

In the case of Clasp Together (beta), the usual pre-performance routine of the performer is interrupted in two important ways. Firstly, working with live electronics generates technical complexities which do not exist in purely acoustic chamber music. These extra factors, such as microphone setup, computer operation and control devices may distract from the well-established routine of a performer (ibid.). Unless they are able to integrate the electronics into their preparations by owning the equipment themselves, this will represent an added strain on the musician. The electronics in Clasp Together (beta) are mediated by a hands-free setup, but the use of EEG provides a new and unfamiliar method of cognitive control, which must be practiced for an effective interpretation of the score.

Performance

The opportunity to perform Clasp Together (beta) arose from an invitation to participate in the Inventor Composer Coaction (ICC), a collaboration between composers, inventors and musicians in the spirit of experimentation. Preparing for a performance required considerable input from the bass clarinetist in the ensemble, in this case Pete Furniss of Red Note Ensemble. In addition to adequate personal practice time and rehearsal, one or two sessions were set aside to calibrate the EEG software in order to fine-tune it to the wearer, maximizing the stability of the triggering system. As a consumer device the EPOC™ is designed to work to a level adequate for its intended use as a computer game controller with little or no calibration. However, the included software improves over time as it calibrates to the individual user. These extra sessions also allowed the performer to familiarize himself with the fit and feel of the apparatus, as well as trying out various gestural and mental signals in an experimental environment with the composer and technical personnel. During these preparatory meetings the performer was able to gain an understanding of the parameters of the Expressiv Suite. The defining gestures or mental states are aided by visualization on the computer screen and by verbal feedback, neither of which are available to the performer during full rehearsal with the ensemble and in performance. Between such sessions, the performer needs to prepare for the mental process of performing the piece, which requires an agility of concentration, rapid task switching, and an exploration of the improvising styles demanded by different sections of the piece.

The bass clarinet part of Clasp Together (beta) is unusual in that it contains a number of unconventional text instructions to the soloist, relating to the mental states used to trigger the EEG. This variously requires the musician to "think about something unrelated and play from memory" (bb.20-32), "become still" (b.80), and think "angry" (b.114) or "calm" (b.80) thoughts while executing the piece. The work contains one instance of a particularly unusual musical instruction: in bb.114-116, the composer has written a deliberately dense and over-complex passage of music, marked "Do not learn" (see Figure 4). This is an attempt to induce a sense of urgent and stressful sight-reading (or at least unfamiliarity), with the intention of triggering a corresponding neural response into the EEG. In fact, in the first performance, clarinetist Furniss had been so taxed by this in rehearsal that the passage played (shown in the video at 4:40) barely resembles that written on the page, and the improvisation continues with little regard to the score. It is interesting to consider that over repeated performances the performer may become so adept at this task switching and "mental gymnastics" that the EEG signal level required to drive the NGS is no longer produced. If this were to be the case the composer would by necessity have to rewrite the composition in-between performances.

Ethical considerations aside, asking highly trained professional musicians to under-prepare and potentially struggle on the concert platform (in this case without the audience being aware of the point of the exercise) may prove somewhat ill-advised; their sense of self-protection may, as here, lead to a reluctance to follow the letter of the score 8. There may be a case for revising the complexity of this passage (perhaps even rewriting it on each occasion) for future renderings, allowing for a more concerted realization to be attempted in the flow of performance.

Fig. 4. An example of the rapid nature of the changing requests made of the soloists including free improvisation (b.229), head nods (b.232), 'unplayable' notation (b.233), interpretive graphic notation (b.128), and extended technique (b.132).

Combining the reading of a part learnt and rehearsed from a notated score with free sections of improvisation requires a rapid adaptation in mental approach on behalf of the performer. In addition to the above-mentioned notational elements, the nature of the soloist's improvisation in Clasp Together (beta)—its contrast to the written score, its wild gestures and extremes of dynamic and pitch range—oblige the musician to switch at short notice between an openly creative, divergent thinking state, and a more considered ensemble focus.

The composer describes his intention (in a personal email from Whalley to Furniss) of keeping the brain "super active to feed the NGS", which can be taxing at first; from a performer's perspective, alternating between such tasks is intrusive to a flow of concentration. In addition to the responsibility of gesturally controlling the electronic triggers, it creates a relatively stressful environment for a musician who will on the whole be more comfortable remaining within one area of focus; for example, ensemble synchronization, improvisation, movement-based cueing or sight-reading. Rapid, repeated alternation between relatively short cognitive, mental and creative states produces a degree of discomfort and confusion, which may result in erratic performance and a disconnection with the other members of the ensemble and conductor (if used) 9. This experience is consistent with the reaction time costs described by Wylie and Allport (2000) as "switch costs" These difficulties may prove to lessen with further performances, but this possibility has not been possible to analyze to date.

A certain amount of theatricality was employed during performance of the piece, in order to communicate the various facial expressions and thought processes clearly to both fellow performers and audience. Lateral and vertical head movements, along with the furrowing and raising of the brow, were exaggerated beyond the threshold of triggering requirements. In addition to ensuring that gestures were reliably picked up by the software (akin perhaps to hitting a pedal or other device with more force than is strictly necessary), this exaggeration aimed to provide some visual affirmation of any perceived human-induced causal effect in the electronics. Early in the piece, where the bass clarinetist is instructed to "Demonstrate all movements" (b.44), the video recording (Whalley, 2012a) clearly shows a very deliberate demonstration of these gestural effects: nodding and shaking of the head, raising of the eyebrows and frowning (2:18-2:39).

Where neural signals were employed as triggers, it was also productive to externalize them to some extent, again with the intention of maximum communication in performance. For example, in the final section (b.142), the bass clarinetist is required to move from a scattered, hectic mind state to one of stillness over a period of approximately 30 seconds, with the processed electronic samples following a similar pattern. This can be observed in the video: at 5:49, the performer employs a rather frenzied, chaotic eye movement to embody this state, gradually moving towards a more overtly 'meditative' physicality, reinforced by a closing of the eyes and a slightly exaggerated slow breathing (6:04).

The examples above offer some revealing insight into the perception of a causal link between the performer-controller and the resultant electronic transformations. In the first instance, where the physical movements may be surprising, laughter is heard in the audience. This may relate to the unexpected novelty of a gestural absurdity inserted into the contemporary concert performance paradigm, or perhaps there is a sense of eccentricity in the musician's movements, echoing those of a spontaneous nervous tick or spasm, in the manner of a Tourette's sufferer. However, there is also a sense that this laughter may indicate an appreciation of causality, that it recognises an invisible connection made between the performer's mind and the subsequent sounds produced from the loudspeakers.

In the case of the ending, with each of the ensemble's instruments placed very deliberately at a distance from their playing positions, the audience is left in little doubt as to the mental efforts of the EEG wearer. In the first performance there was a moment after the final silence when Furniss was in some doubt as to whether the result had been effectuated or simulated with a fader (the pre-agreed 'plan B'), whereupon the electronic sounds abruptly reappeared. This provided an example of the kind of 'error' that can serve to enhance a performance: in this case, the act of doubting caused a neural surge, which caused the EEG to reinitiate the electronics, affirming the musician's ongoing control of the system. It was clearly perceived and duly noted in a Scotsman newspaper review of the event: "…watching Furniss close his eyes and relax his mind to take the piece to its quiet conclusion was unforgettable. (Kettle, 2012)

Discussion

Although we had decided from the beginning to use dramaturgy in the performance to emphasize and distinguish the use of the technology, donning Furniss with the EEG headset during the performance, an unintended (but not wholly unanticipated, given the nature of experimental performance) incident unveiled some of the intricacies of the process. A few minutes into the performance, after the EEG headset had been placed, the computer crashed. As it formed such an essential part of the composition, the performance was interrupted and, once the issues were addressed, restarted. It is possible that the computer crash led to greater audience understanding of the process; having witnessed the system not work, its function became more transparent when it was successful. The aim of this experiment was not to use technology as novelty, gimmickry or as a behind-the-scenes process, but as enquiry. Indeed, this event contributed to removing the idea (or perhaps the veil) of technology in music as a finished and conclusive process. Rather it served to emphasize the exploratory dimension of such experimentation, the state of work-in-progress, not only of the underlying technology, but of the process as a whole.

Using gestures to convey information about the compositional and performative process to the audience was a necessary dramaturgical technique in the context of the piece. It is possible that this technique can be expanded further, especially with relation to the concept of agency more generally. The extreme nature of the composer's demands on the performer here highlights complexities within their working relationship. Future performances may, as previously suggested, require the addition of further layers of complexity within the score in order to maintain the high levels of concentration and agitation required of the soloist to drive the NGS. This creates an interesting form of correspondence-like feedback between live electronics and composer over time. Indeed, the triangular relationship between composer, technology and performer is a strong feature of this type of experimental research.

Conclusion

Music may often be used as a tool to facilitate further research in the fields of neuroscience and psychology, but this knowledge can be exchanged in both directions. For composers and performers alike, a basic appreciation of music cognition can lead to a further sharpening of the tools in the compositional and musical toolbox.

This practice led research was on one level an investigation into specific pieces of technology, protocols and software and their use in performance. On another level it was an artistic expression of the question 'what is agency?' and how our separation (as humans) from the technology that we create is diminishing over time, towards integration. The interaction between technology and performer in the combination of NGS/EEG dictated that rapid task switching was to be a key element in the score. In fact, it dictated to some degree the shape and material of the score itself. The finished score demanded so much of the performer that it led the composer to reconsider the relationship between composer and performer in respect to the ethics of extremely difficult performance material. This composer/performer relationship is again framed against the relationship that performers have with each other and with an audience.

The implications of this research reach into multiple areas: the role that a better understanding of neuroscience can bring to musicians/composers, the development of good practice in experimental and mixed electronic music performance, the embodied vs disembodied nature of working with computers, and the future of HCI in musical contexts. In many cases within the paradigm of experimental music, the composer and interaction designer/creator meet at the same person. The user of a new and custom-made interface may thereby be the only one who will use it in the future. In contrast, Clasp Together (beta) represents a triangular, iterative process, incorporating composition, technology and contemporary performance practice.

ACKNOWLEDGEMENTS

The authors would like to thank Dr. Lauren Hayes for her commissioning and organization of the Inventor-Composer Coaction event, Dr. John Matthias and Tim Hodgson for authorizing the use of the NGS and for their help in understanding its workings, and to Alex Fiennes for his expert sound engineering at the concert. Thanks also to the John Harris, members of the Red Note Ensemble, and to Prof. Peter Nelson, Prof. Nigel Osborne and Prof. Richard Coyne at the University of Edinburgh.

NOTES

- Correspondence can be addressed to: Dr. J Harry Whalley, Reid School of Music, Alison House, University of Edinburgh, 12 Nicolson Sq, Edinburgh, EH8 9DF, Scotland. E-mail: harry.whalley@ed.ac.uk

Return to Text - The augmented performance Clasp Together (beta) was a joint collaboration between composer Harry Whalley, digital media researcher Panos Mavros and clarinetist Peter Furniss.

Return to Text - The Red Note Ensemble performed Clasp Together (beta) on the 9th of May 2012 at The Jam House, Edinburgh. The Inventor-Composer Coaction (ICC) is a research group established at ECA within the University of Edinburgh, http://www.inventorcomposer.net

Return to Text - NP-complete problems have no known fast and efficient solutions, in contrast to P class problems. Whether P can be equal to NP is one of the most important questions in computer science. NP class problems have been studied in relation to many games with simple rules such as Go and John Conway's Game of Life (Demaine & Hearn, 2005) As a result computer Go is still in its infancy with 2013 being the first year that Go computers have been able to challenge high level Go Masters.

Return to Text - The game took place between Agotaoxin (a human player) and Zen19N (2014), a 12-core Mac Pro programmed by Yoji Ojima (game used with permission). The stop animation used as a basis for the piano improvisation by the composer can be found at http://tinyurl.com/GoGameWhalley

Return to Text - Invasive EEG, where the electrodes are inserted in the brain, is very rarely used in humans and can be used to localise the electrical activity of only a few cells.

Return to Text - The Emotiv EPOC™ has been used in researching auditory processing disorders (Stytsenko, Jablonskis & Prahm, 2011), in studying cognitive reactions to urban environments (Aspinall, Mavros, Coyne & Roe, 2013), and in a growing number of other fields of study.

Return to Text - For the first performance it was felt advisable that John Harris conduct the Red Note Ensemble, given the relatively short rehearsal time within the context of a large number of new pieces on a single programme.

Return to Text - It is worth noting that both composer and performer had already worked with one another and therefore the extra-musical aspects of the composition were not entirely unexpected.

Return to Text

REFERENCES

- Agotaoxin and Zen19N. (2014). Game of go [stop animation]. Retrieved from http://tinyurl.com/GoGameWhalley.

- American Go Association. (2014). Notable quotes about Go. Retrieved from http://www.usgo.org/notable-quotes-about-go

- Aspinall, P., Mavros, P., Coyne, R. & Roe, J. (2013). The urban brain: Analysing outdoor physical activity with mobile EEG. British Journal of Sports Medicine, 49(4), 272-276.

- Berweck, S. (2012). It worked yesterday. Unpublished doctoral dissertation, University of Huddersfield, UK.

- Buehler, M. (2014). More materials, maestro. New Scientist, 221(2954), 30-31.

- Demaine, E. D. & Hearn, R. A. (2005). Playing games with algorithms: Algorithmic combinatorial gametheory. Games of No Chance, 3, 3-56.

- Desine, N. (2011). Alphasphere - a musical instrument for now. Retrieved from http://www.alphasphere.com

- Donnarumma, M. (2011). Xth Sense. Retrieved from http://marcodonnarumma.com/works/xth-sense/

- Guljajeva, V., Canet, M. & Mealla, S. (2012). "Neuroknitting", Varvara & Mar. Retrieved from http://www.varvarag.info/neuroknitting/

- Hofstadter, D. R. (1979). Gödel, Escher, Bach. New York: Basic Books.

- Hofstadter, D. R. (2006). What is it like to be a strange loop?. In U. Kriegel & K. Williford (Eds.) Self-representational approaches to consciousness, (pp. 465-516). Cambridge, Mass.: MIT Press.

- Humphrey, N. (1992). A history of the mind. New York: Simon & Schuster.

- ICC. (2012). Inventor-Composer Coaction. Retrieved from http://people.ace.ed.ac.uk/students/s9809024/icc/neurogranular.htm

- Izhikevich, E. (2003). A simple model of a spiking neurons. IEEE Transactions on Neural Networks, 14(4), 1569-1572.

- Jackendoff, R. (1987). Consciousness and the computational mind. Cambridge, Mass.: MIT Press.

- Jones, D., Hodgson, T., Grant, J., Matthias, J., Outram, N. & Ryan, N. (2009). The fragmented orchestra. Proceedings of New Interfaces for Musical Expression (NIME 2009) Conference. Retrieved from http://users.notam02.no/arkiv/proceedings/NIME2009/nime2009/pdf/author/nm090140.pdf

- Kettle, D. (2012, May 10). Gig review: Red note ensemble, Edinburgh Jam House. The Scotsman. Retrieved from http://www.scotsman.com/what-s-on/music/gig-review-red-note-ensemble-edinburgh-jam-house-1-2285166

- McNutt, E. (2003). Performing electroacoustic music: A wider view of interactivity. Organised Sound, 8(3), 297-304.

- Merriam-webster.com. (2014). Synapse - definition and more from the free merriam-webster dictionary. Retrieved from http://www.merriam-webster.com/dictionary/synapse

- Miranda, E. R. & Matthias, J. (2009). Music neurotechnology for sound synthesis: Sound synthesis withspiking neuronal networks. Leonardo, 42(5), 439-442.

- Monsell, S. (2003). Task switching. Trends in Cognitive Sciences, 7(3), 134-140.

- Nonclassical.greedbag.com. (n.d.). John matthias, nick ryan - cortical songs :: Nonclassical. Retrieved from http://nonclassical.greedbag.com/buy/cortical-songs-1/

- Paile, D. [dpaile] (2011, June 8). Brain music [video file]. Retrieved from http://www.youtube.com/watch?v=xYfw7QTZC4g

- Plasticity installation. (2014). Retrieved from https://www.youtube.com/watch?v=rB5SPPyW-as

- Stytsenko, K., Jablonskis, E. & Prahm, C. (2011, June). Evaluation of consumer EEG device Emotiv Epoc. Poster presented at MEi: CogSci student conference 2011, Ljubljana.

- Swartz, B. E. (1998). The advantages of digital over analog recording techniques. Electroencephalography and Clinical Neurophysiology, 106(2), 113-117.

- Whalley, H. (2012a, Oct. 6). Clasp Together (beta) [video file]. Retrieved from http://vimeo.com/50880180

- Whalley, J. H. (2012b) Clasp Together (beta). Retrieved from https://static.squarespace.com/static/5310ad63e4b0d9c24dab9f4e/t/54746aa1e4b0e110a61744b4/1416915617359/4_+ClaspTogether+.pdf

- Whalley, H. (2014). Portfolio of compositions. Unpublished doctoral dissertation, University of Edinburgh, UK. Retrieved from https://www.era.lib.ed.ac.uk/handle/1842/9579

- Wolpaw, J., Birbaumer, N., McFarland, D., Pfurtscheller, G. and Vaughan, T. (2002). Brain—computer interfaces for communication and control. Clinical Neurophysiology, 113(6), 767-791.

- Wylie, G. & Allport, A. (2000). Task switching and the measurement of "switch costs". Psychological Research, 63(3-4), 212-233.

- Zioga, P., Chapman, P., Ma, M. & Pollick, F. (2014). A wireless future: Performance art, interaction and the brain-computer interfaces. In International Conference on Live Interfaces 2014, 20th - 23rd November 2014, Lisbon, Portugal. Retrieved from http://eprints.hud.ac.uk/22799/

APPENDIX

The technical requirements to perform Clasp Together (beta) are:

- Laptop running Max/MSP with NGS Audio Unit (au) installed.

- Audio interface with two analogue I/O and fast stable drivers (e.g. RME)

- Small Mixer (2 XLR inputs, 1 auxiliary send/return)

- Laptop running Emotiv EPOCTM Affectiv Suite and Mind your OSCs

- Emotiv EPOCTM bluetooth headset

- Front of House (FOH) Mixer (8in., stereo 2 aux send/return, one pre-fade (to NGS) one post-fade (to FX))

- Appropriate microphone(s) for each instrument

Three Technician/Engineers:

- 1 Fits EEG, connects to Affectiv Suite

- 2 Activate / Deactivate NGS, activates Gesture samples

- 3 Front of House sound engineers